The Hitchhiker's Guide to DeFi Insurance

by Carolina Goldstein, Catarina Urgueira and Tomás Palmeirim • Monday, October 31

2022 • Published at Three Sigma

Introduction

Since the early days, the DeFi market has been severely shaken by hacks, bugs, exploits, rug-pulls, flash

loan attacks, and a long list of attack vectors, causing loss of confidence in its core value

proposition. Insurance solutions that can mitigate the high risk inherent in this industry's innovations

are one of the most important aspects for the widespread adoption of DeFi.

Yield and risk are positively correlated, with higher yields indicating market participants' greater

exposure to risk. DeFi yields are significantly higher than the ones seen in traditional finance,

indicating a greater level of risk. This risk is mainly attributable to the complexity, novelty, and

immutability of DeFi, where bugs or smart contract errors can lead to exploits resulting in colossal

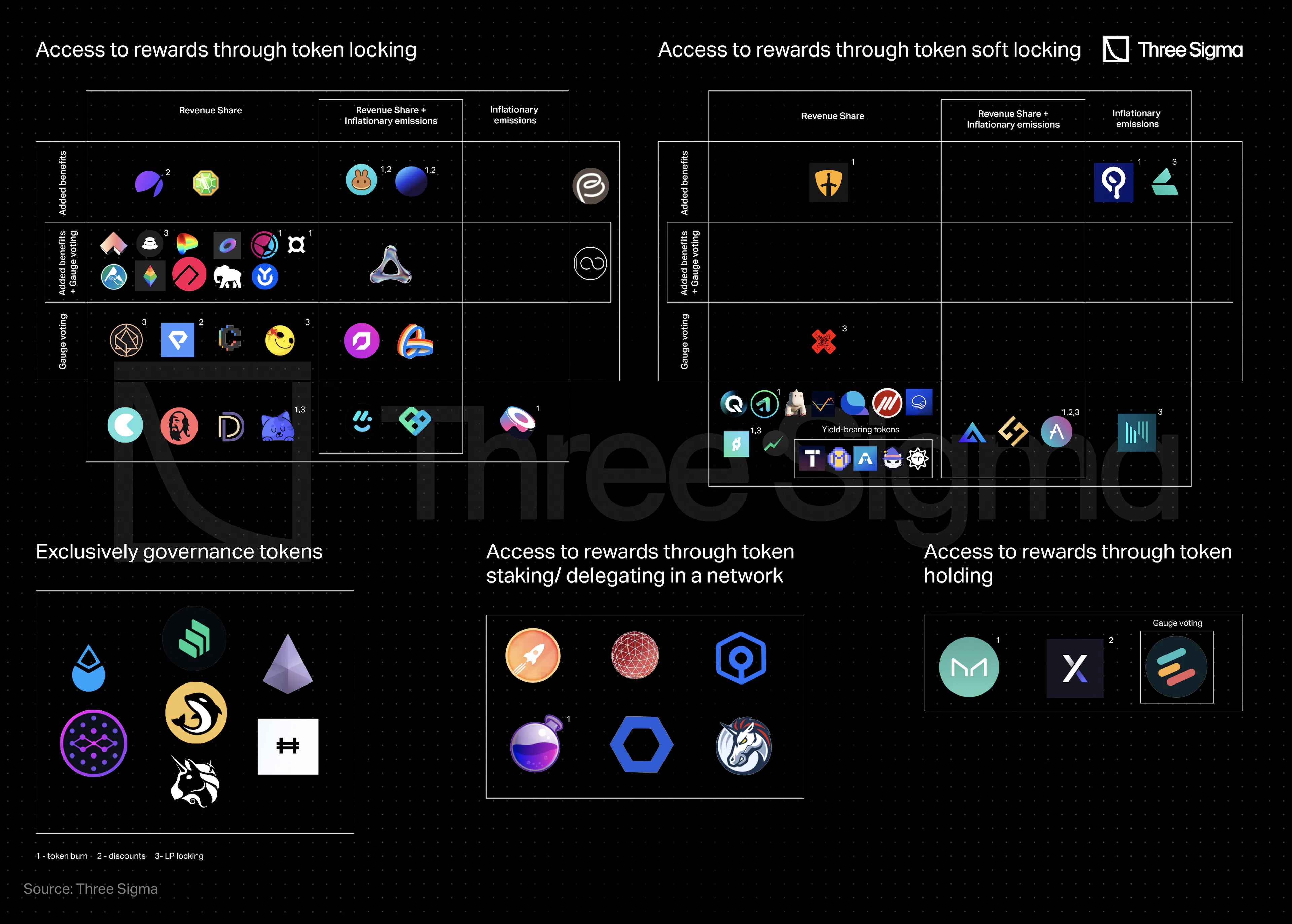

losses, emphasizing the need for insurance solutions in the industry.

Since risk should ideally be measured automatically and in a decentralized manner using solely on-chain

information, developing insurance mechanisms for the DeFi sector is extremely difficult and doesn't

entirely fit with what we see in traditional capital markets. Decentralizing the insurance market has

the potential to transform the claiming process into one that is unbiased, trustless, transparent, and

automated using smart contracts while also providing coverage providers with a return on their capital

and insurers with guarantees about the safety of their assets.

The insured cover types, premium pricing, risk management, and claims process vary according to the

Insurance protocol implementation and strategy. This paper will examine the Ethereum DeFi insurance

sector in depth, examining 12 different protocols, providing a historical review, and comparing their

methodology, business models, and tokenomics.

Insurance Market Overview

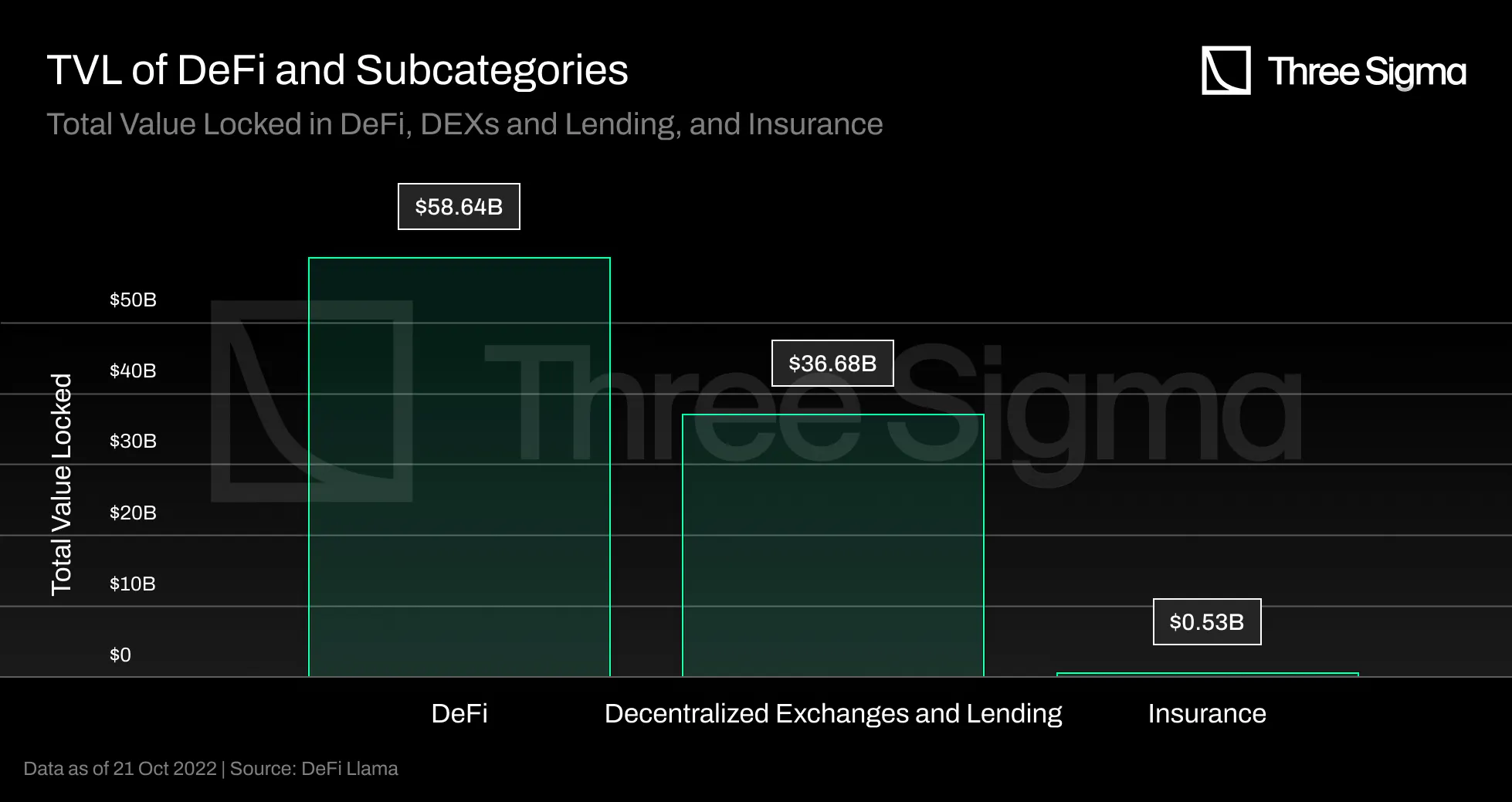

DeFi automates financial services via smart contracts and has 53 billion in total value locked, with an

all-time high of 170 billion in December 2021, with current TVL representing only 31% of the ATH.

(Source: DeFi Llama) The rise in TVL is positive for the industry, but it also increases the possible

damage caused if that value is lost due to smart contract vulnerabilities.

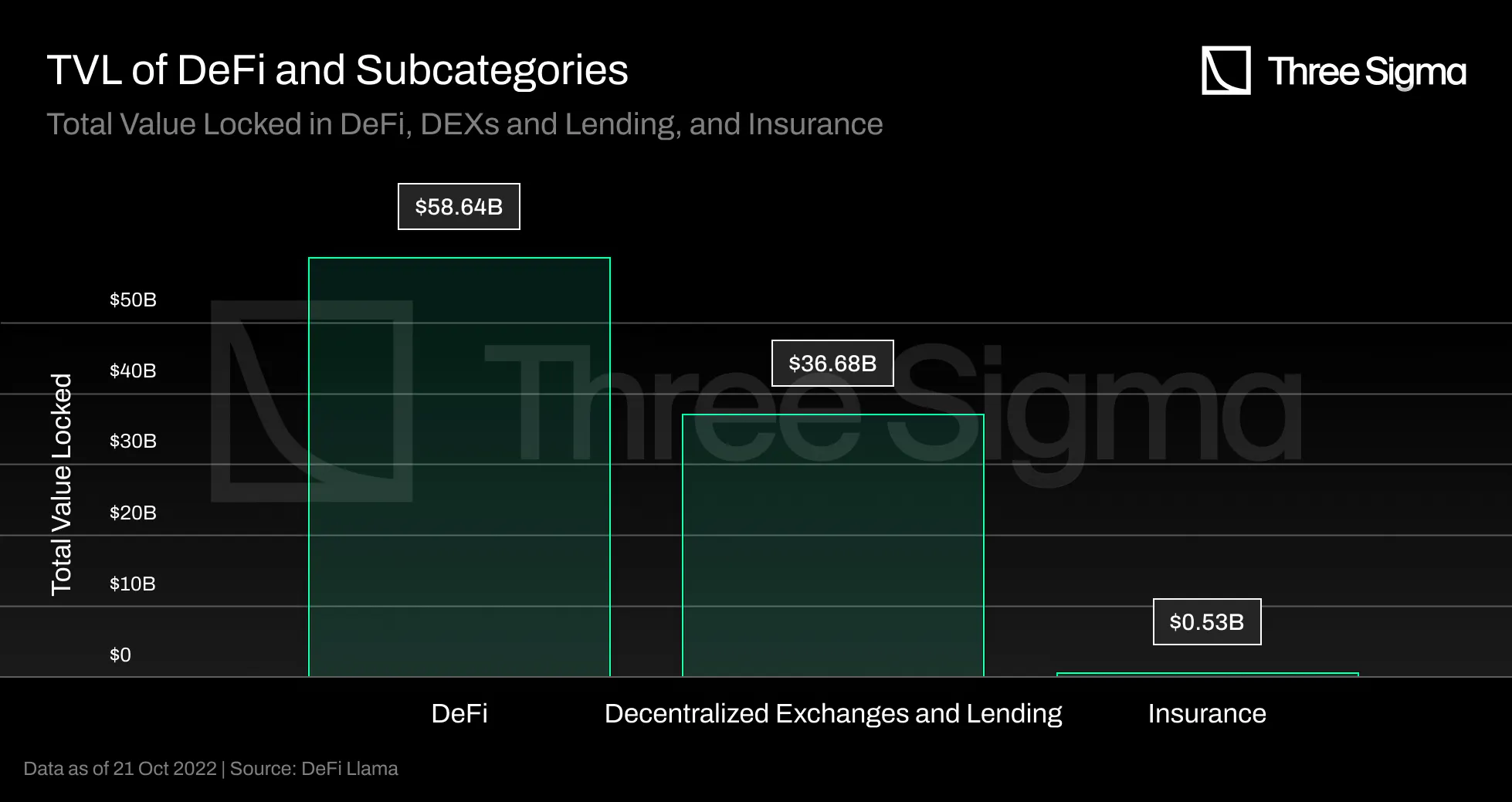

The first wave of innovation in DeFi focused mainly on two fundamental financial primitives:

decentralized exchanges and lending. These two domains account for the vast bulk of the value locked in

DeFi protocols, totalling 36.68 billion dollars in TVL, according to DeFi Llama. In contrast, DeFi

insurance accounts for only 457 million dollars in TVL, despite significant advances in this segment of

the industry. DeFi insurance makes up less than 1% of total TVL in DeFi. Before investing large sums of

money in this market, investors may desire a sense of security, and the entire Web3 economy is currently

underinsured.

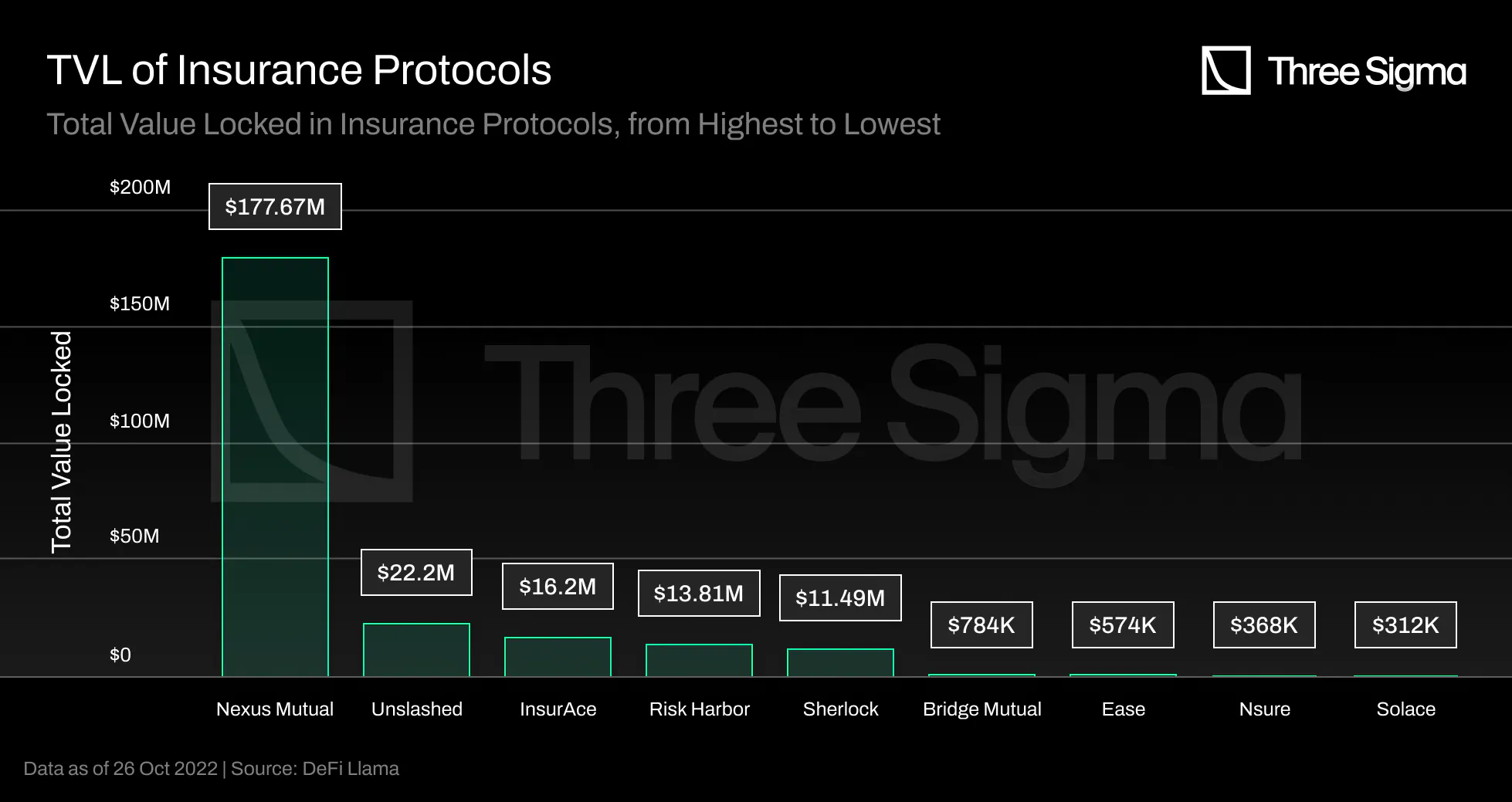

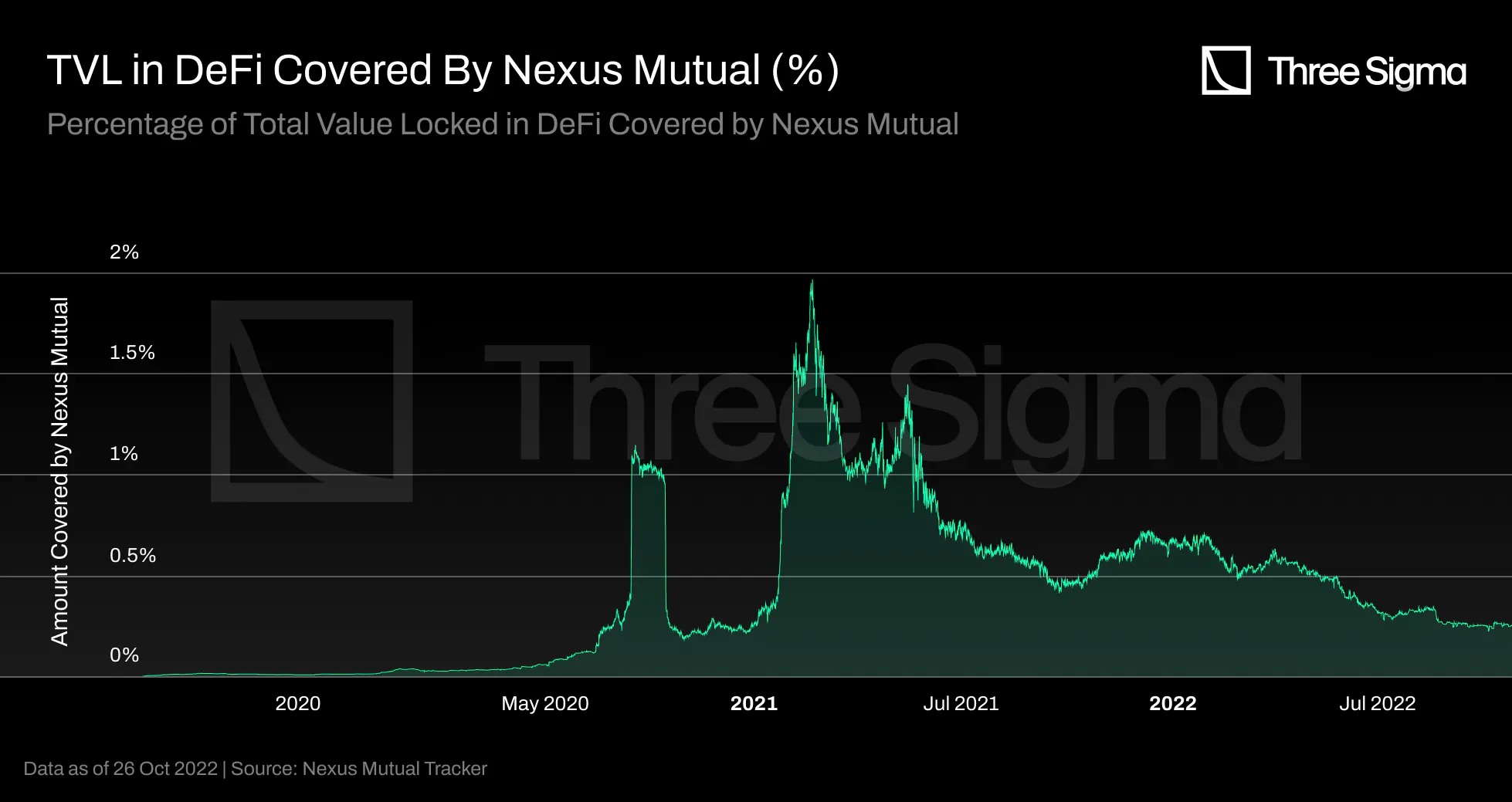

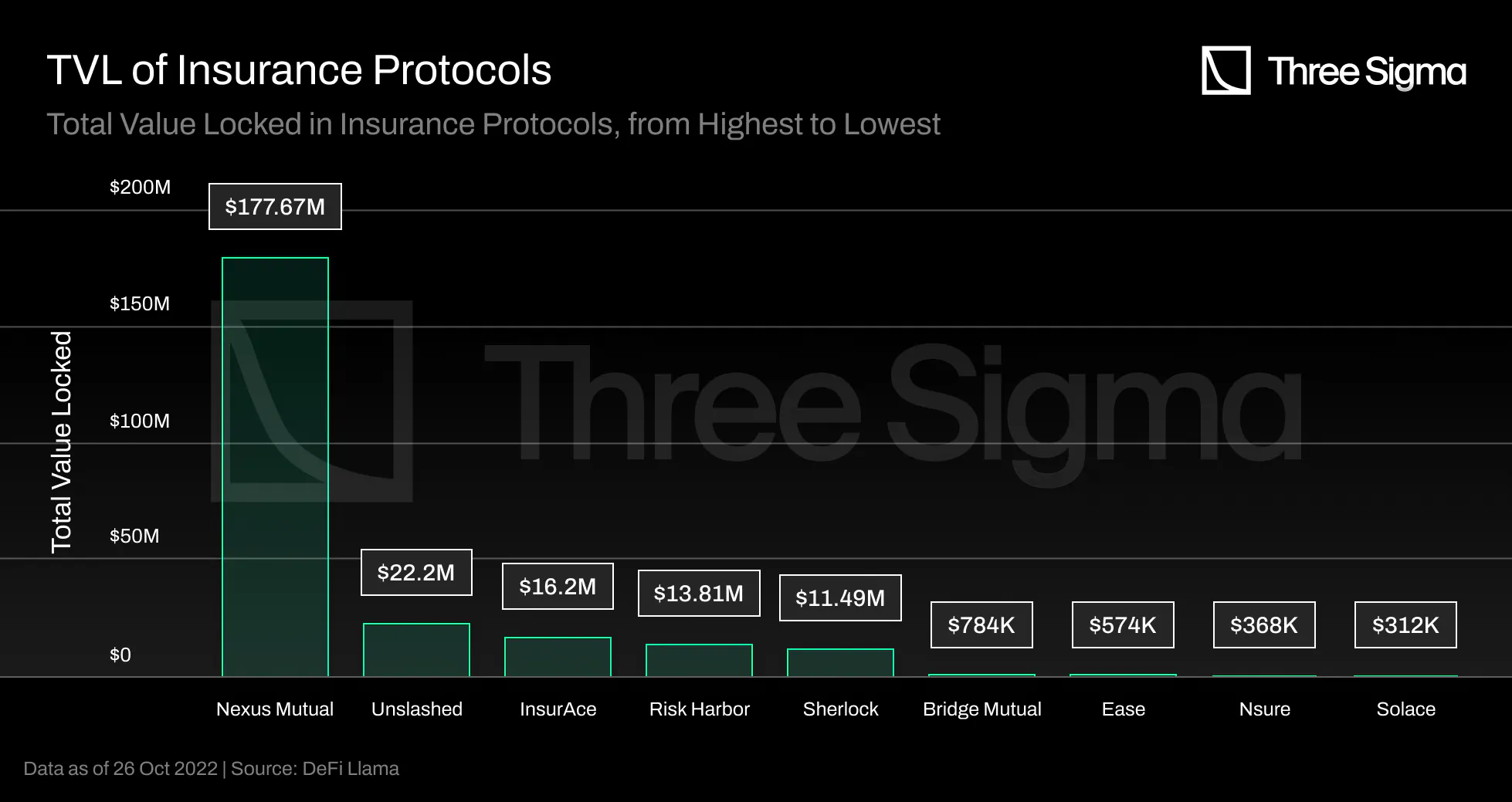

Nexus Mutual, the industry pioneer, dominates the DeFi insurance market since its launch, accounting for

over 68% of the TVL, but it only covers 0.25% of the TVL in DeFi. The remaining insurance market is

still fragmented, with the three protocols listed after Nexus by TVL accounting for roughly 13% of the

market.

What would happen if insurance coverage grows by 10% or 15%? If 10% of the DeFi TVL was insured, the

total assets covered would be $5 billion. The current TVL in insurance is nowhere near one billion

dollars. A significant increase in DeFi insurance TVL is required to cover 10% of DeFi TVL. Developing a

decentralized insurance protocol is substantially complex, and solutions require further work to

increase covered value in DeFi.

How does DeFi Insurance work?

Insurance represents a contract or policy where an individual or entity receives financial protection or

payment from an insurer in the event of a loss.

Insurance companies’ business strategies rely on diversifying risk, and these businesses usually generate

revenue in two ways: by charging premiums and by reinvesting them. Each policy has a premium based on

its risk and after it is sold the insurance firm traditionally invests it in safe short-term

interest-bearing assets to avoid insolvency.

The global traditional insurance market was valued at more than \$5.3 trillion in 2021. It is expected to

grow by approximately 10.4% to \$5.9 trillion in 2022 and \$8.3 trillion in 2026 at a compound annual

growth rate (CAGR) of 9.1%. (source: PR Newswire) DeFi insurance represents a significant growth

opportunity in the blockchain industry, as its ATH in November 2021 was $1.82 billion, accounting for

only 0.03% of the total traditional global market for 2021.

This global insurance market forecast can predict a reasonable coverable value in DeFi. If only 5% of the

traditional global insurance market becomes the coverable value in DeFi insurance, this equals \$265

billion. Assuming that 15% of the coverable value is insured, we have $39.75 billion in active premium

coverage, significantly more than the current TVL in DeFi insurance and even more than the entire

insured value in DeFi.

In the same way as in traditional insurance companies, DeFi insurance protocols can also carefully invest

their users' capital in other DeFi products to generate more revenue. Generally, if a company

efficiently prices its risk, it should generate more income in premiums than it spends on conditional

payouts.

Instead of purchasing coverage from a centralized entity, DeFi insurance protocols allow users and

companies to purchase coverage from a decentralized pool of coverage providers. Anyone can be a coverage

provider by locking capital in a capital pool and exposing themselves to risk, just as liquidity

providers do in lending protocols. Cover providers invest their funds in pools with higher returns

relative to the protocol's risk, which means that individuals trade the outcomes of events based on

their estimations of the probability of the underlying risk event. If a protocol covered by the insurer

suffers an adverse event such as a hack, the funds in the capital pool that covers that protocol will be

used to compensate users who purchased coverage against that specific event on that protocol. Coverage

providers are incentivized to provide liquidity and are rewarded for assuming risk by earning a return

on their capital. The yield is a percentage of premiums paid, presenting a correlation between the

premium paid and the risk for the protocol under consideration. However, DeFi insurers often include

their own liquidity mining incentives in their yield calculation, which are used to bootstrap liquidity

for the pools.

Our DeFi Insurance thesis is that as the total value locked in DeFi grows, so does the need to secure

that value. With the TVL growing, users must have access to solutions that protect their capital. This

is especially true as institutional players enter the market, since insurance is already a big part of

traditional financial markets.

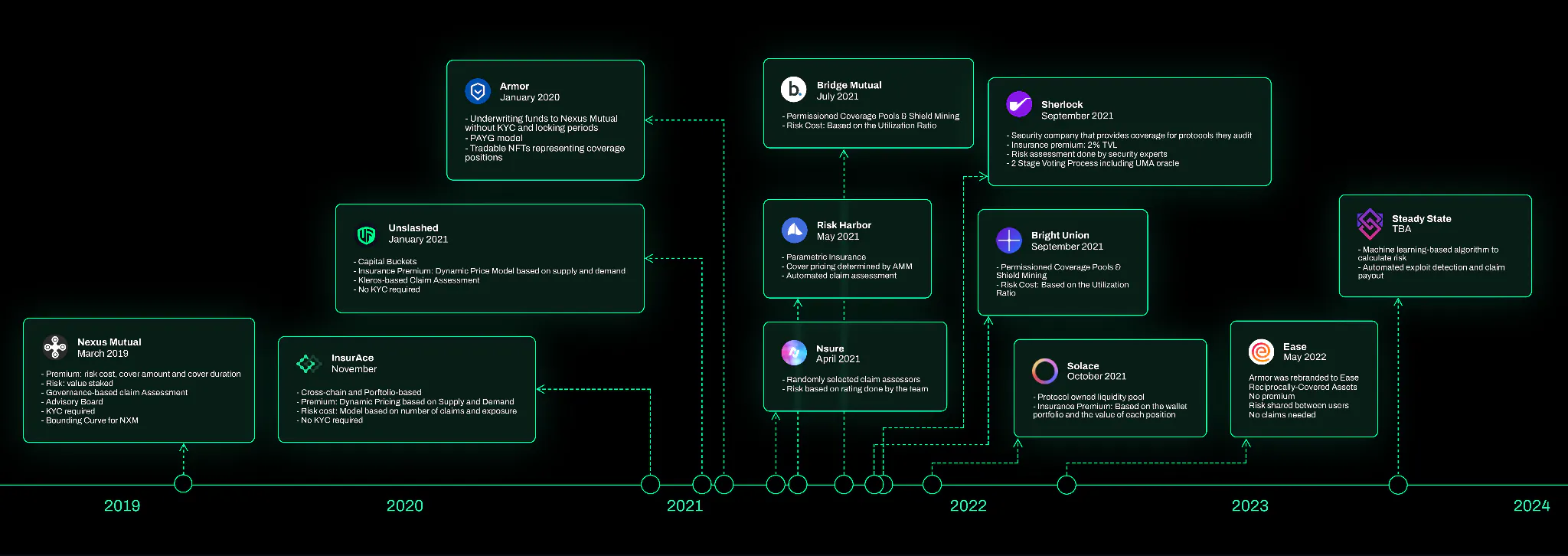

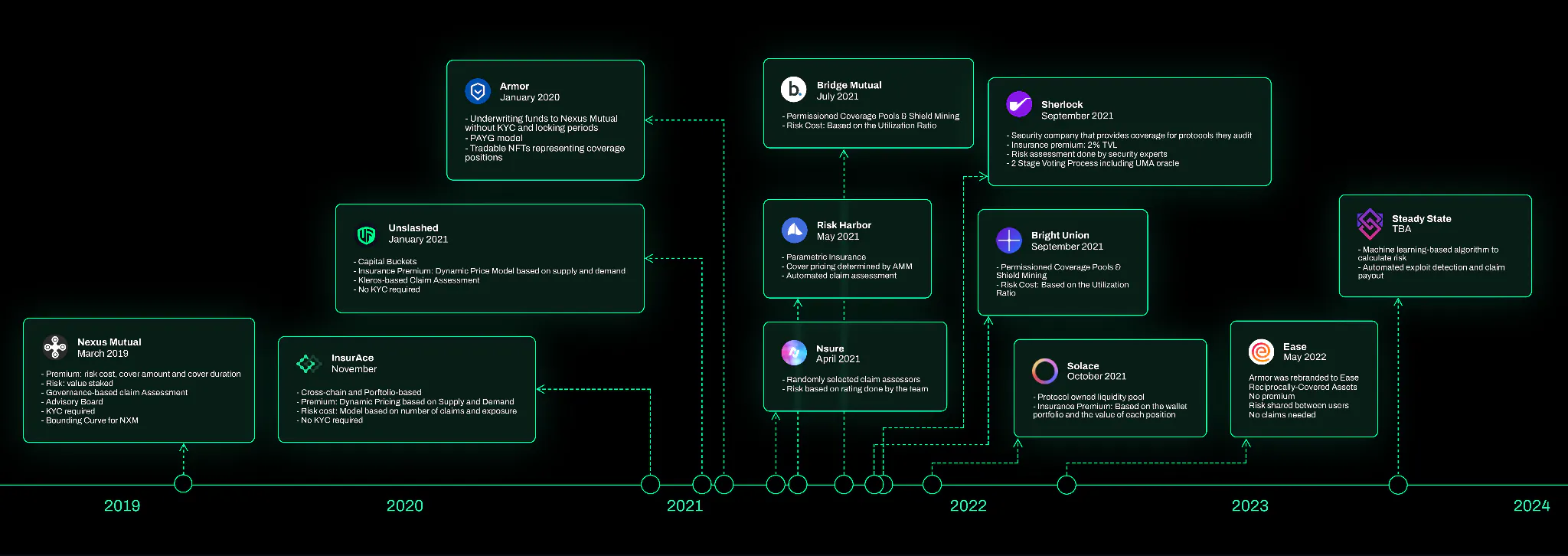

Nexus Mutual was the first insurance protocol in the DeFi industry. Following it, many protocols were

launched in an attempt to solve some of the ongoing challenges in this space. In the next sections, we

will describe how 12 protocols are attempting to solve some existing challenges in decentralized

insurance, as well as provide our inputs on some of the approaches used.

Nexus Mutual

Nexus Mutual launched on Ethereum on May 30th, 2019 as a combination of smart contract code and a fully

compliant legal entity based in the UK operating under a discretionary mutual structure, meaning that

all claims are paid depending on a decision made by the Board, in this case, the Nexus Mutual members.

A discretionary mutual is not an insurance provider but a legal structure that allows members to trade

under the umbrella of a single legal personality. This enables Nexus to disregard all regulatory and

legal requirements that exist for insurance companies. This discretionary mutual allows legal trade in

the UK, but coverage is available globally, with some countries restricted due to local laws. Anyone who

wants to join the mutual in any capacity must go through KYC to ensure compliance, and the membership

rights are represented by their native token NXM. This KYC procedure can give institutional users

greater regulatory confidence.

Nexus Mutual's first product was Smart Contract Cover, the first insurance product that let users protect

themselves from smart contract risks on major DeFi protocols.

In January 2021, Nexus Mutual expanded cover protection to other chains such as BNB, Polkadot, and

Cosmos, as well as added protection for centralized platforms such as Coinbase and Binance and lending

services such as BlockFi and Hodlnaut.

In April 2021, Nexus Mutual added Protocol Cover, given the ever-evolving scope of DeFi attacks. This

broad and versatile protection protects members from smart contract hacks, oracle attacks, severe

economic attacks, governance attacks, layer two components, and protocols on any chain.

In July 2021, Nexus Mutual added Yield Token Cover, which provides coverage against the full range of

risks to which a protocol, or combination of protocols, LP position is exposed to. This covers smart

contract risk, oracle failure or manipulation, stablecoin de-pegs, governance attacks, and any other

threat that leads to the protocol losing value, provided it has an LP token representing consumer

deposits.

The vast majority of Nexus covers protect users against protocols, accounting for more than 80% of total

covers, followed by custodian protection (a little more than 10%) and yield tokens coverage.

Nexus Mutual gain market fit when attracting huge amount of TVL in the first months. It is still the

largest insurance protocol in terms of TVL but since mid-2021, Nexus Mutual's written premium,

denominated in US dollars, has declined. This could be because new insurance protocols are taking market

share from existing protocols, such as Unslashed and InsurAce, since they can provide more economic

incentives to users by distributing governance tokens and do not require a KYC process. Other external

macro conditions could also have influenced this outcome, which will be further analyzed when other

insurance protocols are presented.

Nexus Participants

Nexus Mutual members can buy insurance coverage using NXM, provide liquidity to the capital pool as Cover

Providers and/or vote in the claiming process as Claim Assessors. A small membership fee of 0.002 ETH is

charged to all members.

Cover Providers are Nexus Mutual members who stake NXM against protocols or centralized exchanges to

underwrite insurance and earn 50% of newly minted NXM insurance premiums. Minting NXM requires the

addition of ETH to the Capital Pool, which is currently funded by premiums pouring into the pool. This

mechanism exists due to the existing bonding curve, which was once the primary trading place for NXM. As

a result, the circulating supply of NXM increases, but so does the value of the Capital Pool. Cover

Providers are, therefore, only exposed to protocol-specific risks. The rewards are proportional to the

amount of capital the cover provider has locked into the pool. Staking does not generate rewards on its

own; covers must be purchased for stakers to receive rewards (50% of the premiums) and the protocol to

generate revenue.

On the other hand, Claim Assessors are members who stake NXM to evaluate claims submitted by other

members and receive rewards for voting in conformity with the consensus.

Claim Assessment

Nexus Mutual is implementing a three-step governance-based approach to claims assessment. In a

governance-based design, token-holding claim assessors vote on claim decisions. To submit a claim, the

member must stake 5% of the purchasing cover in NXM tokens. This deposit is returned to the member if

the claim is approved; otherwise, the tokens are destroyed. After submitting a claim, assessors must

vote to approve or deny the claim based on the submitted cover proof. If the claim is approved, cover

providers on that pool will have their stakes reduced proportionally to the claim amount. If the stakes

are insufficient to cover the claim amount, Mutual will assume the loss by reducing all of its stakes.

Claim assessors must lock their tokens for fourteen days before voting on any claim. This encourages a

fair voting procedure because members cannot vote on their request immediately after submitting a claim.

For a claim to be approved, over 70% of votes must be cast, and the total vote weight must exceed five

times the amount of coverage.

All claims are accessible through the Nexus Mutual application and at the smart contract level. If the

insurer denies valid claims, it is unlikely that new members will join, and existing customers will not

purchase new coverage products.

There are disadvantages to such a mechanism to evaluate claims, such as having a process that requires

manual voting, where members can vote to reject a claim to avoid losing their capital and are

incentivized to vote with the majority rather than using their judgment. As seen in the governance of

other DeFi protocols, only some members want to participate in the voting process actively, so the 70%

of votes necessary for a claim to be approved can be challenging to achieve.

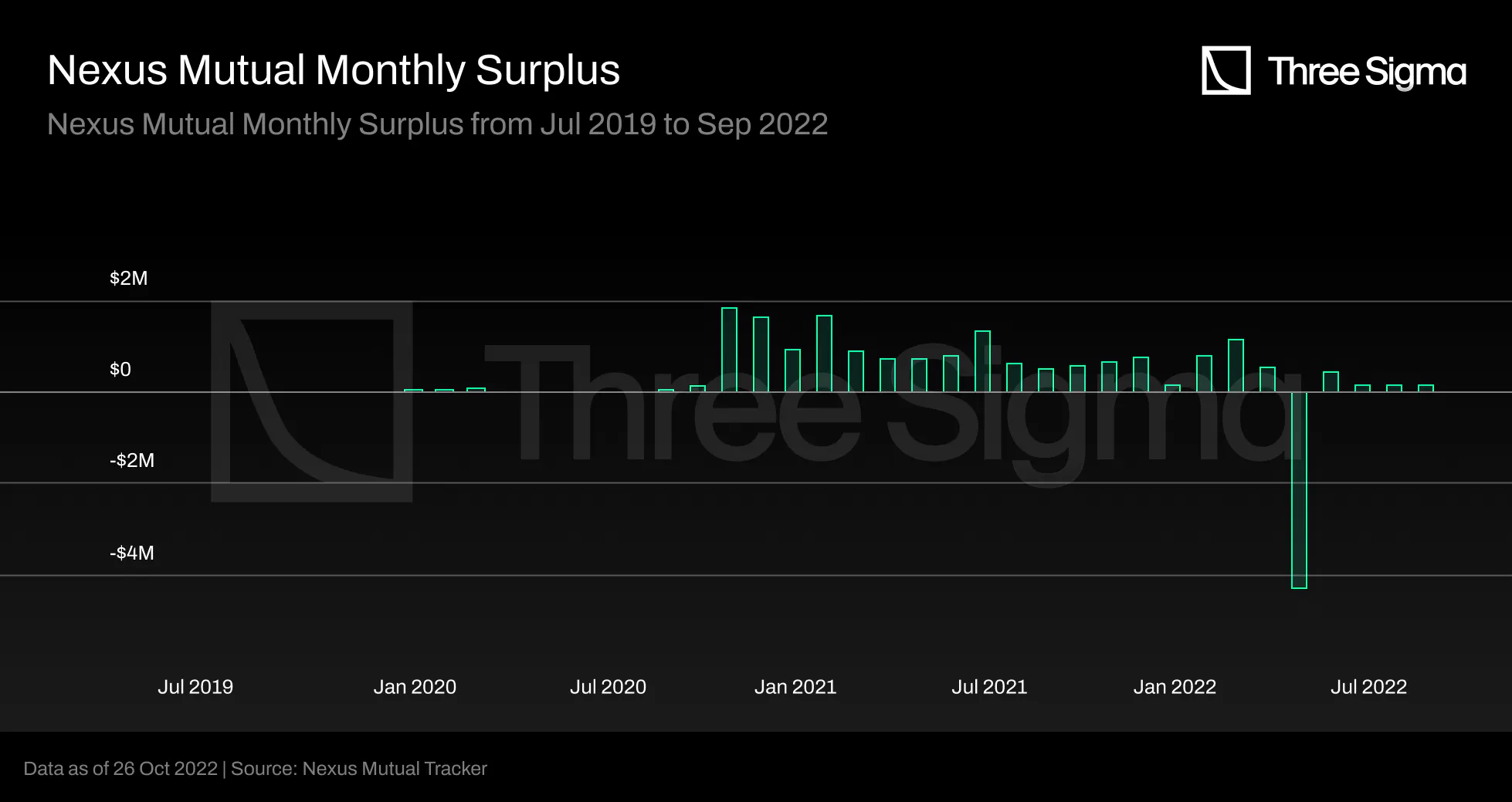

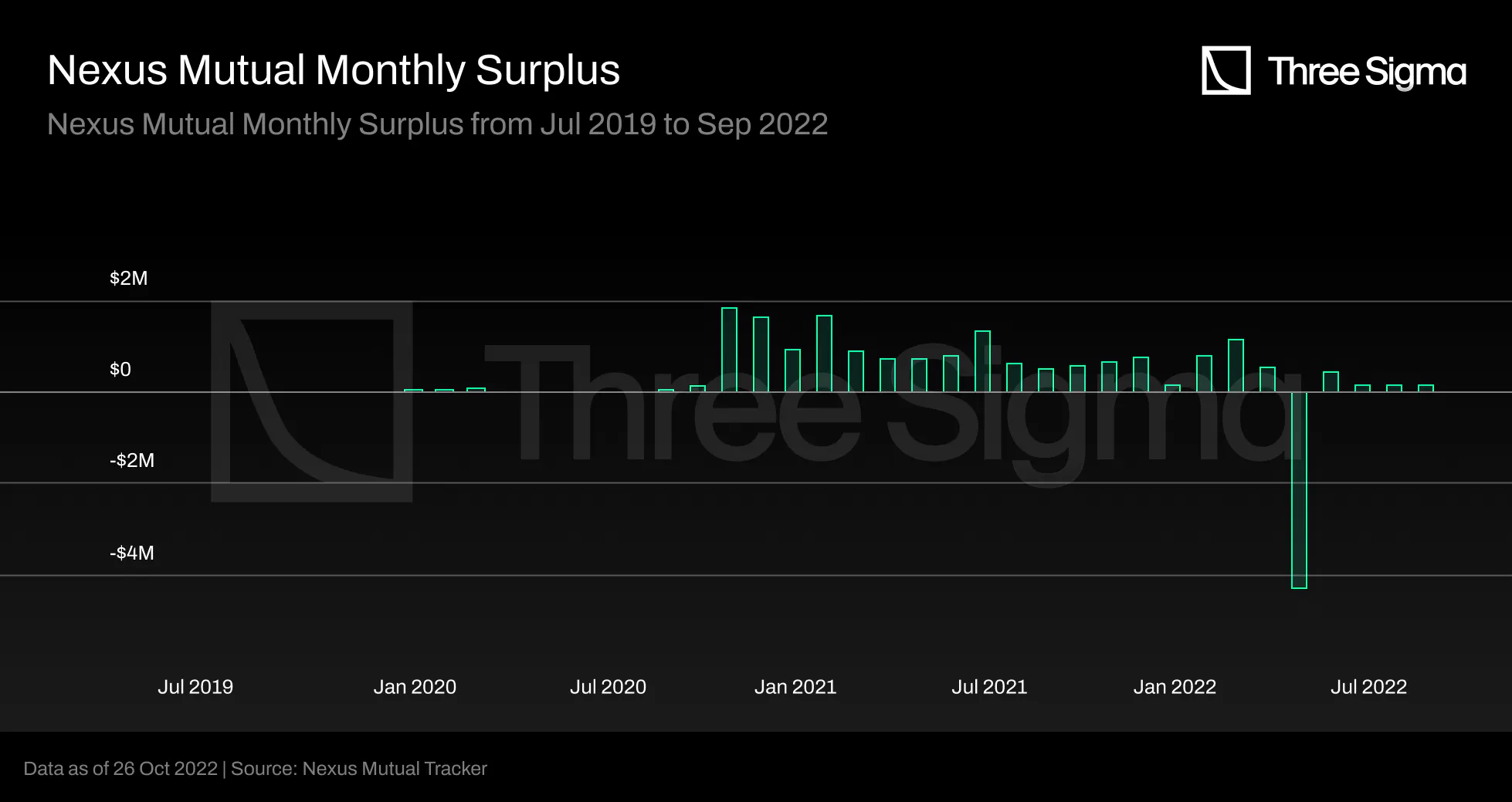

The claim payouts in 2022 were mainly caused by the Rari Capital Fuse Market Exploit due to a reentrancy

vulnerability, and the Perpetual Protocol v1 economic design failure. As shown in the graph below, Rari

Capital paid out 20 ETH and 5,008,000 DAI in April, representing a massive decline in monthly surplus.

Nexus Mutual did not pay a single claim related to UST de-peg and Anchor Protocol because the coverage

provided was limited to issues regarding the smart contract and did not include UST de-peg.

DeFi incidents require expertise and on-chain data analysis to determine if the insurance policy covers

the incident and if the member's wallet submitting the claim was affected. It can be tough for regular

users to vote wisely on this. The Advisory Board of Nexus Mutual comprises insurance experts with the

necessary expertise to conduct this investigation, which is shared with the community before voting in

the form of an investigation summary.

Premium Pricing

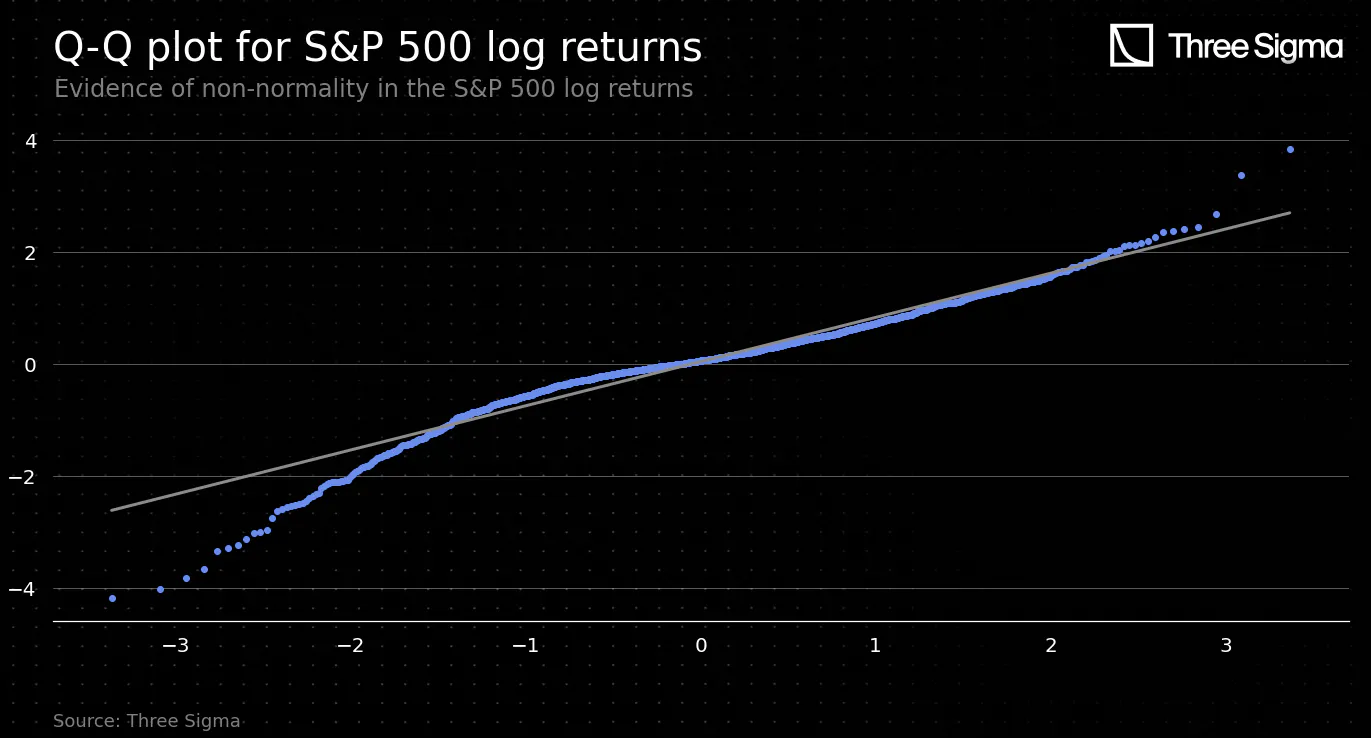

Nexus Mutual uses a market-based risk pricing mechanism. Risk is determined by combining a base risk

calculation, which is computed using actuarial math, with the total value staked. Essentially, cover

providers stake NXM against insurance taken out on a specific protocol to demonstrate their confidence

in the protocol's safety. A more significant amount of staked NXM indicates that after risk assessment,

cover providers feel comfortable depositing funds in that pool, resulting in a lower risk cost and lower

premium for that pool.

In that sense, the premium is entirely driven by the amount of NXM staked by Risk Assessors against each

protocol and custodian. More specifically, the pricing formula for each cover is calculated as follows:

$$Cover\ price\ = \ risk\ cost\ *\ (1 + surplus\ margin)\ *\ \frac{cover\ period}{365.25}\ *\ cover\

amount$$

where the risk cost is calculated automatically based on the value staked against the protocol or

custodian, in a way that the more value staked, the lower the annual cost of coverage. The surplus

margin is a parameter introduced to enable costs (i.e. claim assessor and cover provider rewards) and

generate protocol revenue. It is currently set at 30%. A strong assumption is made here, which is the

basis for the whole pricing system: cover providers stake more money in protocols they consider safer

and believe they will not have to pay out. From this, follows that pools with more value staked need to

charge a smaller premium. However, the incentives for capital providers to stake in a certain pool are

tightly associated with the APY they are expected to receive, which could cloud their judgment regarding

risk assessment. Hence, the question is raised as to whether the value staked against a certain protocol

is, when considered as the sole metric, sufficient for measuring risk.

The inputs for calculating the risk cost include: the net staked NXM, defined as the amount of NXM staked

subtracted by 50% of the pending staking withdrawals, a maximum risk cost, which is set at 100%, a

minimum risk cost set at 2%, and low risk cost limit, which is the amount of stake required to reach the

low risk cost, set at 50,000 NXM. Given these inputs the risk cost is calculated as follows:

$$Risk\ cost\ = \ 1\ - \ (\frac{\text{net staked NXM}}{\text{low risk cost limit}})^{1/7}$$

subject to the risk cost being greater than or equal to the minimum risk cost (2%) and less than or equal

to the maximum risk cost (100%).

It is important to notice that there are capacity limits on the amount of cover that is offered for

specific risks, protecting the protocol from being too exposed to risks. There is a Specific Risk Limit

that varies with the amount of staking on a particular risk and a Global Capacity based on the total

resources of the mutual. The Specific Risk Limit is calculated as the capacity factor times the net

staked NXM (defined above). These capacity factors can be updated by governance. At time of writing,

capacity factors for all covered protocols are equal to 4. The Global Capacity Limit is calculated as

20% of the Minimum Capital Requirement (in ETH terms). A further explanation on how these values were

derived could not be found.

Minimum Capital Requirement

The Minimum Capital Requirement (MCR) is an important component of the Nexus Mutual system, as it is used

directly in the NXM price formula. It represents the minimum amount of funds the mutual needs to be very

confident it can pay all claims and is calculated as follows:

$$MCR = max(MCR Floor, f(Cover Amount))$$

The idea behind this formula is that f(Cover Amount) determines the MCR, however, especially in the

beginning, the mutual sets a MCR Floor value to ensure there is capital to enable cover growth. This was

set at 12,000ETH at launch (May 2019), meaning that the protocol had to gather this amount of ETH before

cover purchases were enabled for the first time. Despite this, the team decided to lower it to 7,000ETH

one month later to be able to start selling cover earlier. A few months later governance voted on the

implementation of a dynamic MCR Floor to better meet concentrated demand on a smaller number of systems.

The incremental rates were tweaked until, in October 2020, it was decided to switch this increase off.

Currently it is 162,424.73 ETH. In May 2021 the capital model floor value was decentralized and MCR

calculations are now fully on-chain. Instead of the MCR being updated manually by the team, it takes the

existing MCR value and moves it towards the target each time someone buys or sells NXM or has a

successful claim. However, the actual MCR is smoothened to avoid large one-off shocks: it is restricted

to move a maximum of 1% in any one update and a maximum of 5% per day. The capital model is currently

implemented by assuming a fixed gearing factor applies to the active cover in ETH terms:

$$f(Cover Amount) = Active Cover in ETH / Gearing Factor$$

If the full Capital Model (off-chain) produces results that are very different, the gearing factor is

updated via governance. The Gearing Factor is currently set at 4.8.

It is the capital model that determines the minimum amount of funds the mutual needs to hold. The MCR is

set using methodology developed by the European Insurance and Occupational Pensions Authority (EIOPA).

The two main considerations that make up the MCR are the Best Estimate Liability (BEL), which represents

the expected loss on each individual cover, and a Buffer, which refers to the funds the pool would

require to survive a black swan event. The BEL for each cover currently corresponds to the entire Risk

Cost to get a more prudent estimation, but should later take into consideration the remaining duration

of the cover.

The Smart Contract Cover Module is based on the exposure Nexus Mutual has to the covers it has written

and is a component of the Buffer. It takes into account the total cover amounts for each individual

protocol and custodian ($CA(i)$), the correlations between each pair of contracts ($Corr(i, j)$) and a

scaling factor (SC) calibrated to make the capital result more comparable to a full Solvency II

calculation. It is calculated as follows:

$$CR_{scc}=SC∗ \sqrt{\sum_{i,j}Corr(i,j)∗CA(i)∗CA(j)}$$

Nexus Mutual holds and invests a Capital Pool of assets in excess of the MCR to back its covers. The

coverage ratio (abbreviated to MCR%) is the ratio between the Capital Pool and the MCR.

Like traditional insurance companies, Nexus Mutual can invest in DeFi protocols using a conservative

investment strategy, such as staking ETH to generate PoS rewards or lending assets on decentralized

collateralised protocols. Nexus Investment posts a proposal for an investment strategy on the forum, and

after community discussion, the proposal is put to a vote.

However, when the Minimum Capital Requirement is reached, capital providers cannot withdraw their

liquidity, which can be a drawdown and a reason for them to be more weary of providing capital in a

protocol.

NXM Pricing and Tokenomics

The NXM token can only be purchased on the Nexus Mutual app, as it isn’t listed on exchanges. It uses a

bonding curve (or continuous token model), meaning that tokens can be purchased at any time at variable

prices. The price correlates with the amount of capital available to the mutual and the capital required

to pay out all claims with a certain probability. The main driver of short-term price movement is the

funding level, which encourages users to buy when funding levels are low. In the long term the capital

required to support covers will rise, reflecting the adoption of the platform. The price (in ETH) is

calculated as follows:

$$T P=A+\frac{M C R}{C} * M C R \%$$

where A and C are constant values that were calibrated at launch (A = 0.01028, C = 5, 800, 000).

These tokens can be used to purchase cover, participate in claims assessment, risk assessment and

governance. The model encourages inflow of funds when required, raising capital as necessary. Since the

MCR% is the ratio between Capital Pool and MCR, when the Capital Pool (which is the Mutual’s current

funding level) decreases, e.g. because a claim was paid, so does the token price to recapitalize the

fund. In the long term it is linked to the adoption of the protocol and not only speculation. Actually,

NXM can only be redeemed for 2.5% below purchase price.

When cover is purchased, 90% of the NXM member tokens are burned and 10% are kept to be used as deposit

when submitting claims or returned to the cover purchaser if no claim is made.

NXM represents ownership of Nexus Mutual’s Capital Pool. Only members of the mutual can buy and sell NXM

in the bonding curve. To become a member, users need to complete a KYC process. There is more recently a

version of the token that does not require KYC, wNXM. This can increase the total number of holders, but

can also decrease the number of members. Members remain the only ones that can maintain price parity,

taking advantage of arbitrage opportunities. wNXM is backed 1:1 with NXM, but as it is traded on

exchanges, it is subject to market forces. However, since December 2020 the MCR% has been under 100%,

which means that redemptions are impossible. While redemptions are not possible, the only way to sell

NXM is to wrap it to wNXM and then sell it on the market. At the time of writing, wNXM is trading at

one-third of the price of NXM. For someone to participate in Nexus Mutual, they have to buy NXM, so to

avoid losing a lot of money when selling it, the only rational option is to buy wNXM on the market and

unwrap it in the platform. Hence the bonding curve is effectively not being used at all. This was

confirmed with the team. wNXM would only be pegged to NXM if MCR > 100%.

There are three sources of value accrual to NXM: cover premiums, redemption fees and investment earnings.

When someone buys coverage, 50% of the premium goes to the Capital Pool without minting new NXM,

benefiting all NXM holders through the increasing of MCR, which increases NXM price if MCR > 100%. 40%

also goes to the Capital Pool, but the corresponding NXM is minted and distributed to stakers. Their

stake is partially or totally burnt if there are valid claims on the contract they staked on. 10% is

kept by the cover holder. The corresponding NXM is minted and locked so that half is burnt if they

decide to submit a claim. If the claim is denied and they wish to re-submit it, the other half is burnt.

If users buy coverage in NXM, 40% go to stakers directly as NXM and 50% accruing to the capital pool is

burnt, so that there is less NXM in circulation, producing the same net effect.

When NXM is sold on the platform, a redemption fee of 2.5% goes into the Capital Pool in the form of ETH.

However, as redemptions have not been available for a long period, this fee is also irrelevant.

It would be in the protocol’s best interest to keep MCR% above 100%. However, this hasn’t been able to

happen since almost the beginning of the protocol, which raises the question of whether there should be

other incentives in place to increase the amount deposited in the Capital Pool. Investment earnings

would also go directly into the Capital Pool, so perhaps there is space for improvement there.

Nexus Tokenomics create a positive loop in which: more insurance policies bought means more demand for

NXM and more revenue for cover providers, incentivizing more NXM staking; more Mutual Members means more

demand for NXM; and a more decentralized mutual leads to more staked value in NXM for claim assessors.

The MCR determined by the Capital Model is calibrated to achieve a 99.5% probability of solvency over 1

year.

The Advisory Board is a central point in Nexus Mutual protocol and comprises only five members. It has

too much power as it has access to an emergency pause function that stops all transactions, can burn

claim assessors staked NXM if they find them fraudulent, and can influence the claim decision.

Adoption and TVL

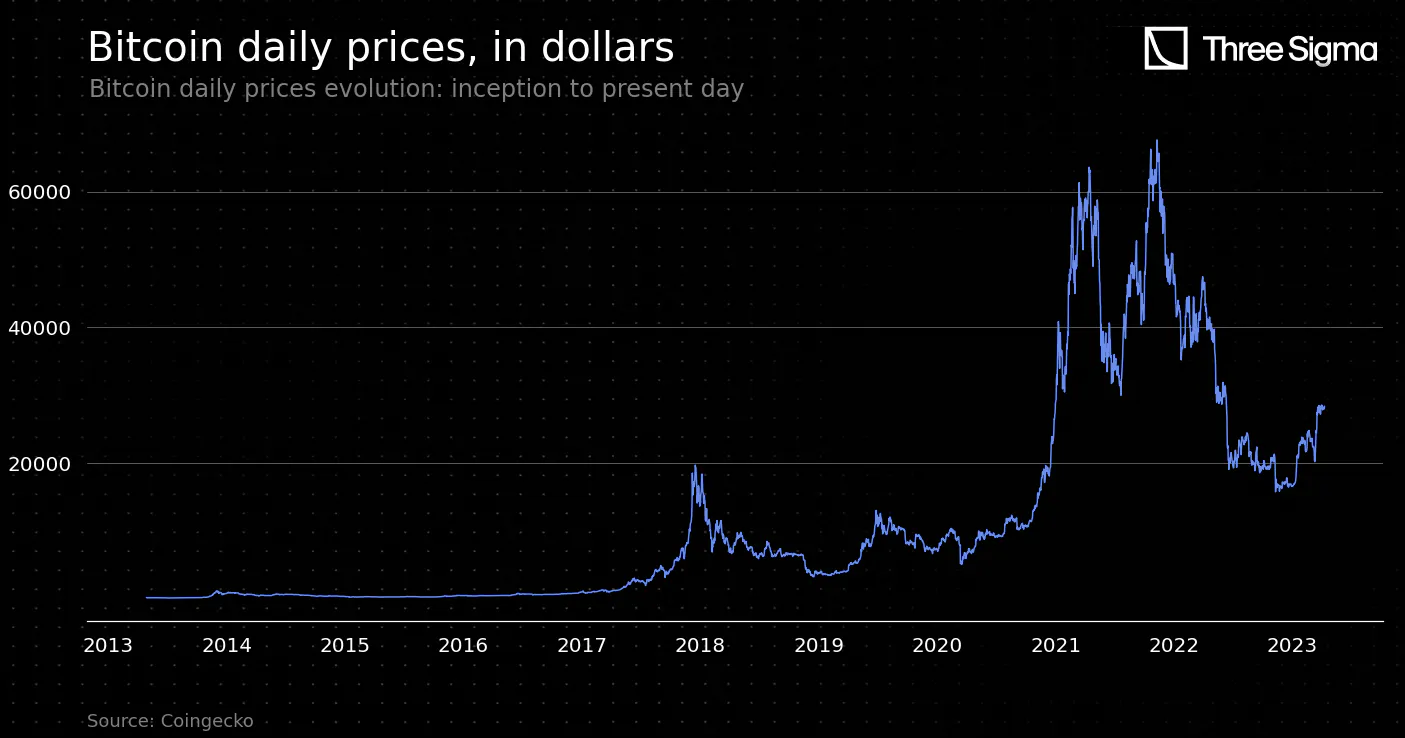

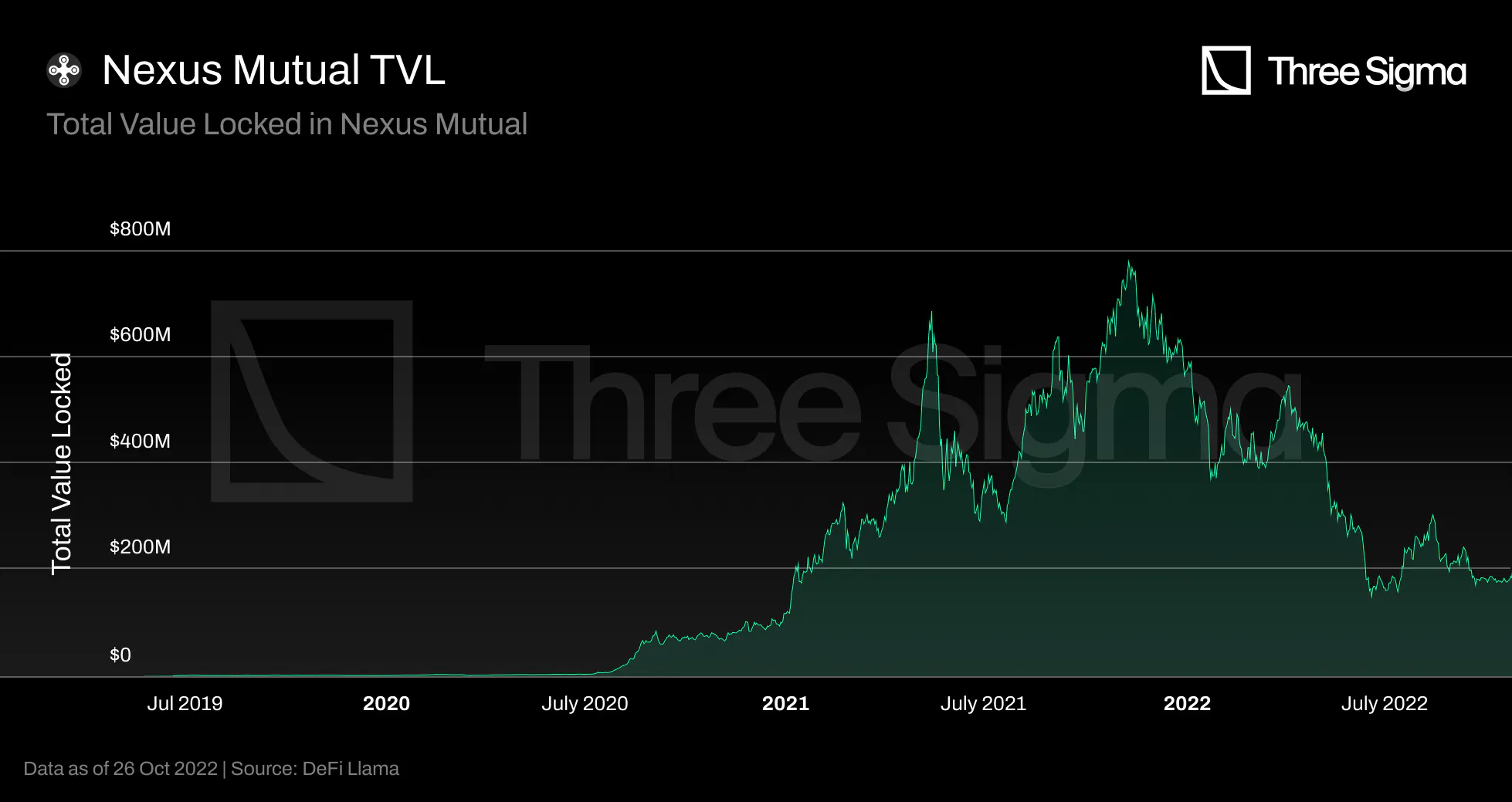

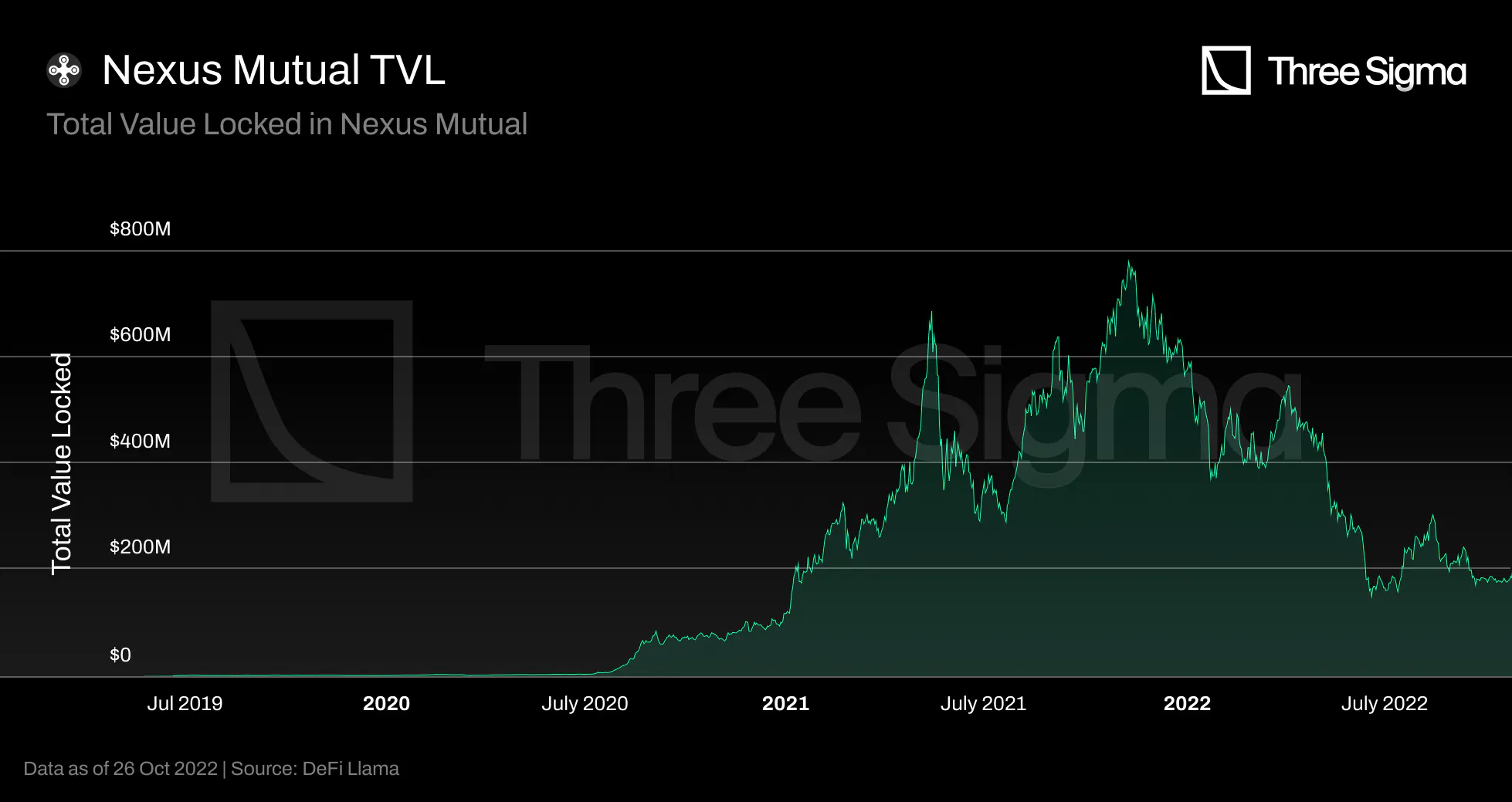

Nexus Mutual's capital pool (TVL) grows whenever a new insurance policy is purchased, investment pools

generate positive income, and NXM is purchased. However, the pool is affected whenever a payout is made,

the Investment Fund incurs a loss, or NXM is burned. The Total Value Locked (TVL) of Nexus Mutual has

grown from \$1.59 million at the start of 2020 to a peak of \$780 million on November 9 2021, an

increase of 490x. However, since then, the broader crypto markets have descended into a bear market

drawdown. Nexus Mutual is no exception, having experienced an approximate 76.5% drawdown to a TVL of

$183 million in October 2022. The value locked in Nexus Mutual represents a negligible portion of the

total unprotected value in the DeFi market, which showcases a massive and risky unprotected value.

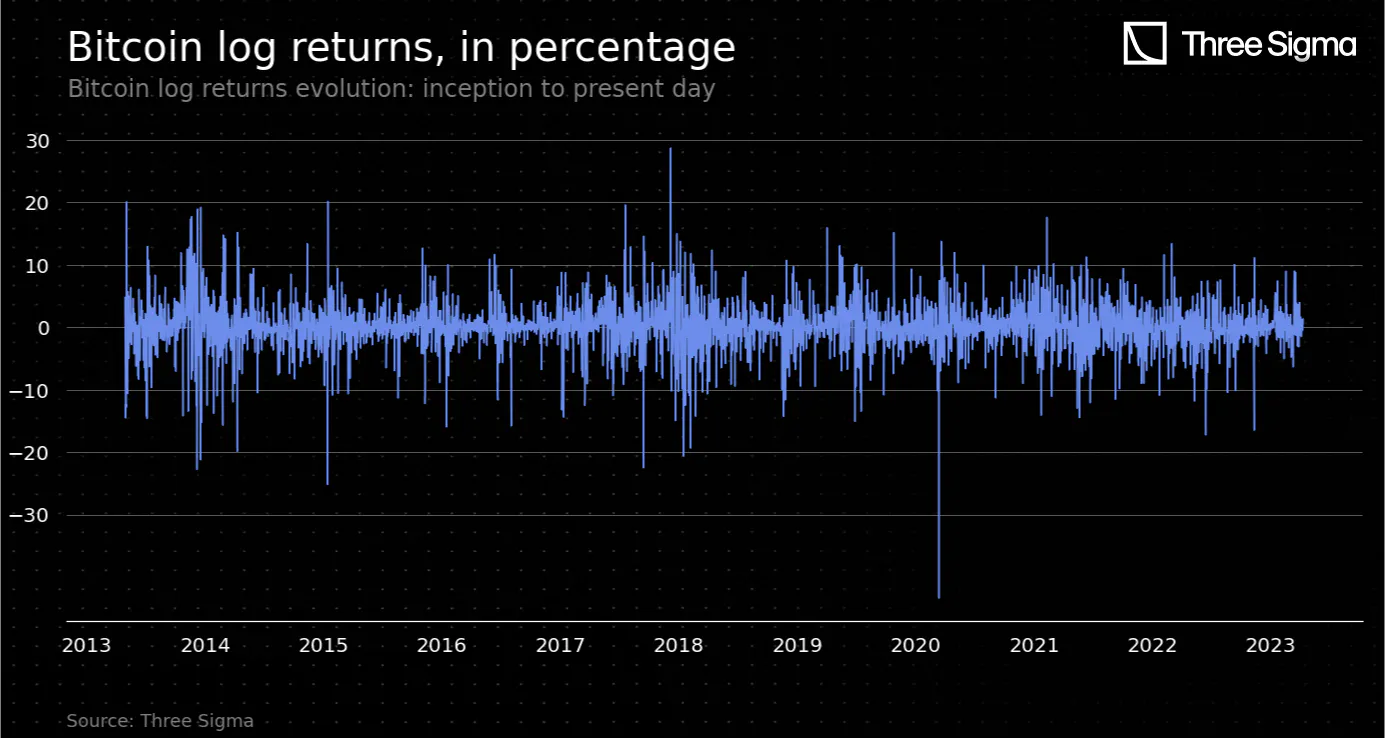

When the crypto market is up and at ATH, DeFi protocols have a significant daily volume, are exposed to

more risk, and protection demand may increase. However, if there is less demand for DeFi, there will be

less demand for insurance coverage, resulting in less revenue for insurance providers. With less demand

in the space, TVLs are also affected, and the lower the TVL, the lower the capacity limit to cover

policies. During bear markets, when capital pools generate less revenue, cover providers have fewer

reasons to invest their funds.

TVC

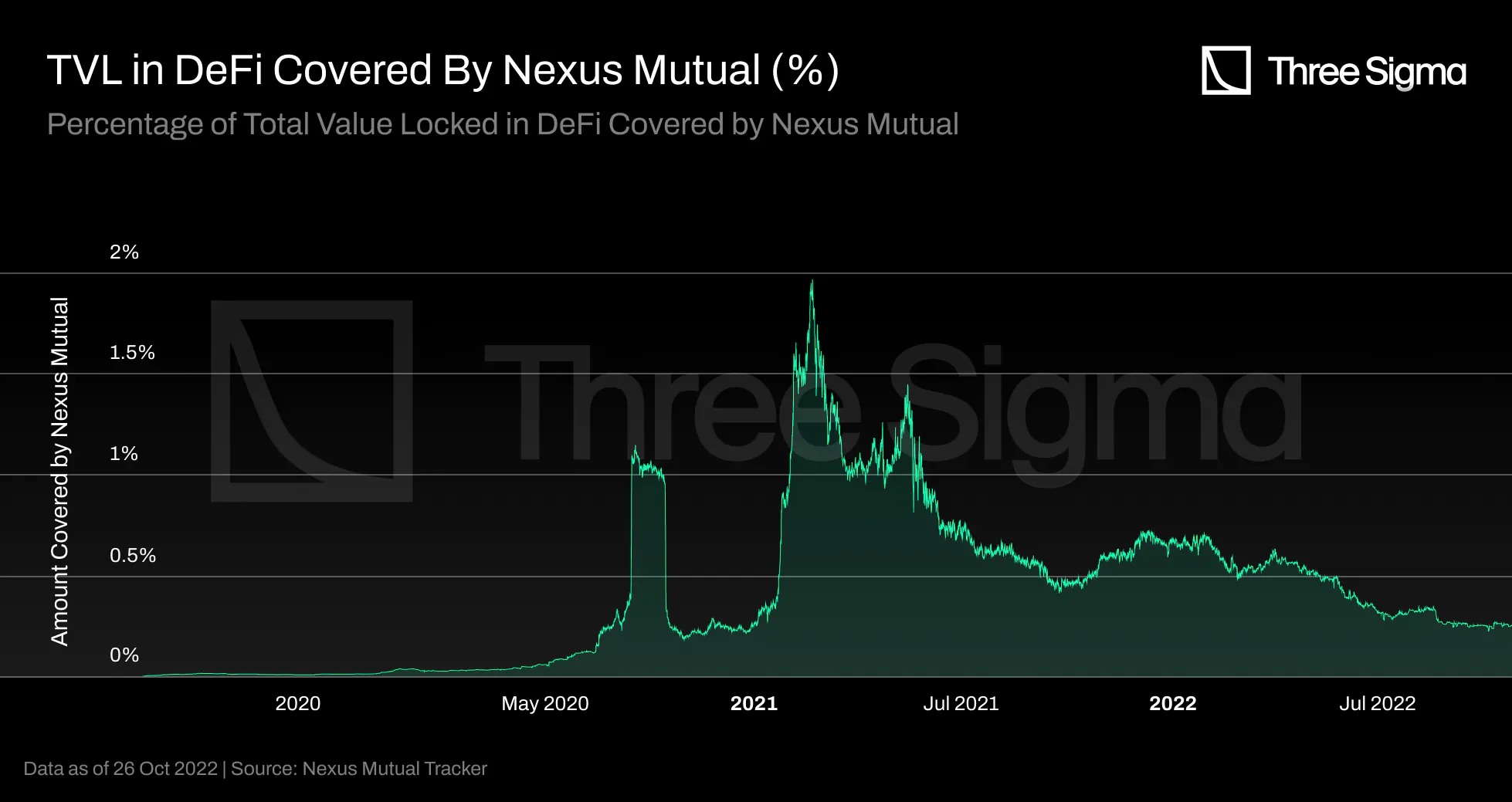

Nexus is the insurance protocol with the higher TVL, however it only accounts for a small portion of

DeFi's TVC. During a bull market with plenty of liquidity in the markets, Nexus' TVC ATH represented

less than 2% of the total DeFi market. These figures indicate a considerable growth possibility for the

decentralized insurance market.

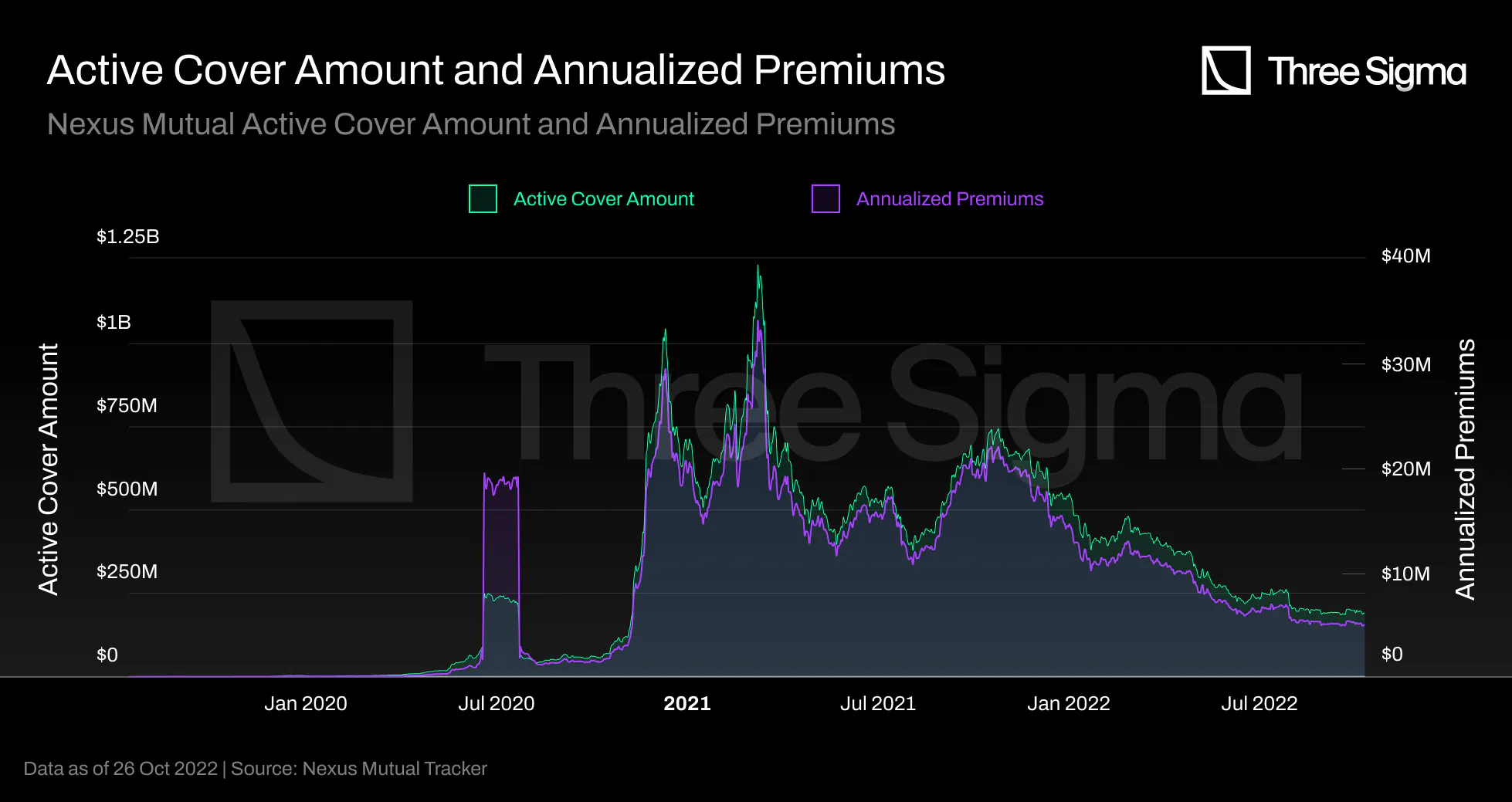

Revenue

Currently, the premium is fully paid when the policy is purchased, and it's a fixed-term amount that the

cover buyer selects. When a user pays for the cover cost, 50% goes to stakers, 10% is held for the

person's cover deposit, and 40% is kept in the capital pool.

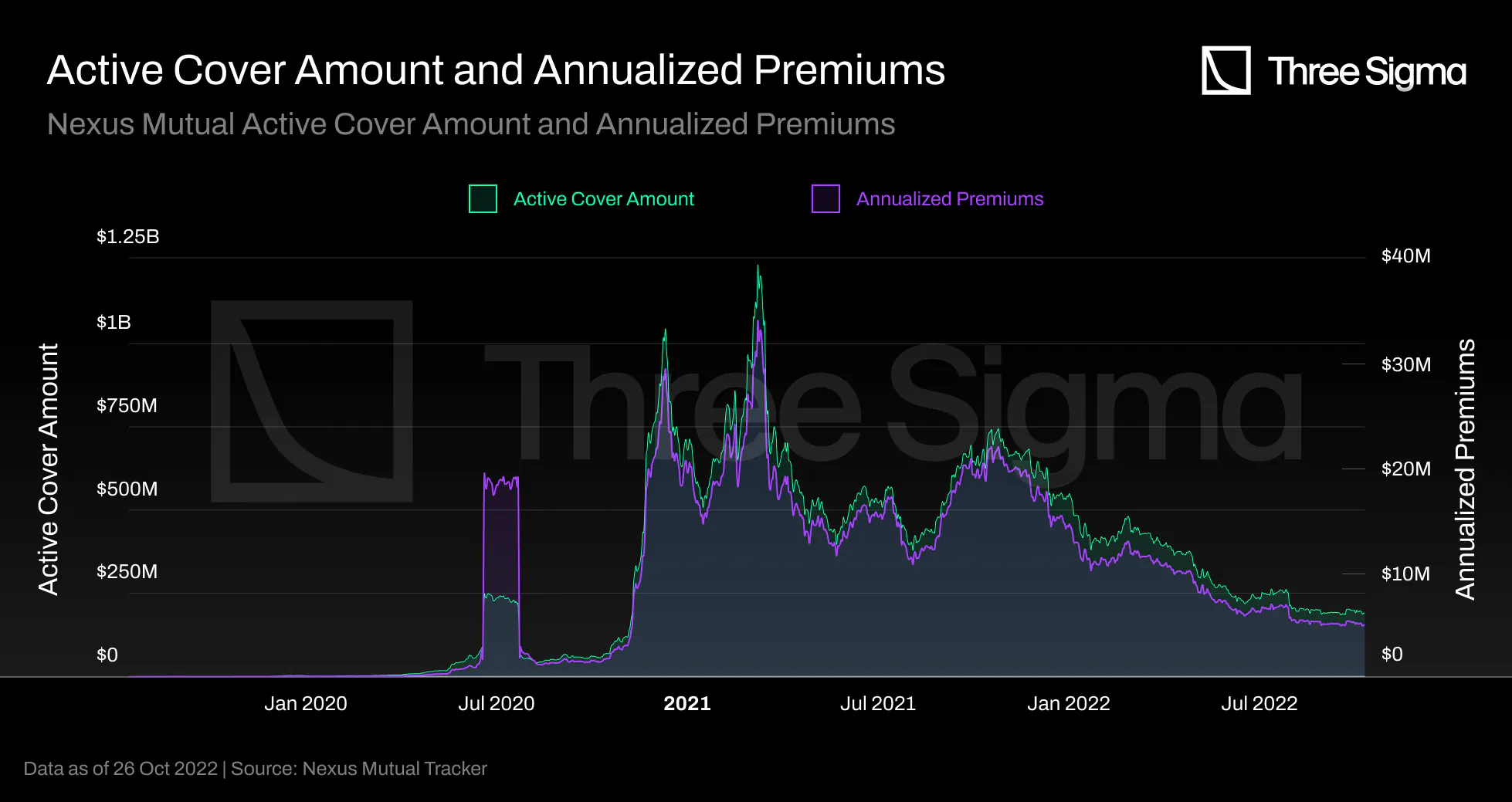

These graphs depict similar behavior but on quite different scales. Nexus' cover price formula is based

on the cover amount, cover duration, and risk amount. This explains this similar behavior, because there

is a direct relationship between the cover amount and cover pricing. As the Total Value Locked in DeFi

Covered rises, so will the Annualized Premiums In-Force.

The Active Cover Amount is always more than an order of magnitude higher than the Annualized Premiums

In-Force scale. This is natural as users only pay a small percentage of the coverage requested.

A larger capital pool (TVL) allow more insurance policies to be sold and increased revenue for stakers

and the Capital Pool. With V2, users can purchase a monthly policy and extend it as long as there is

capacity.

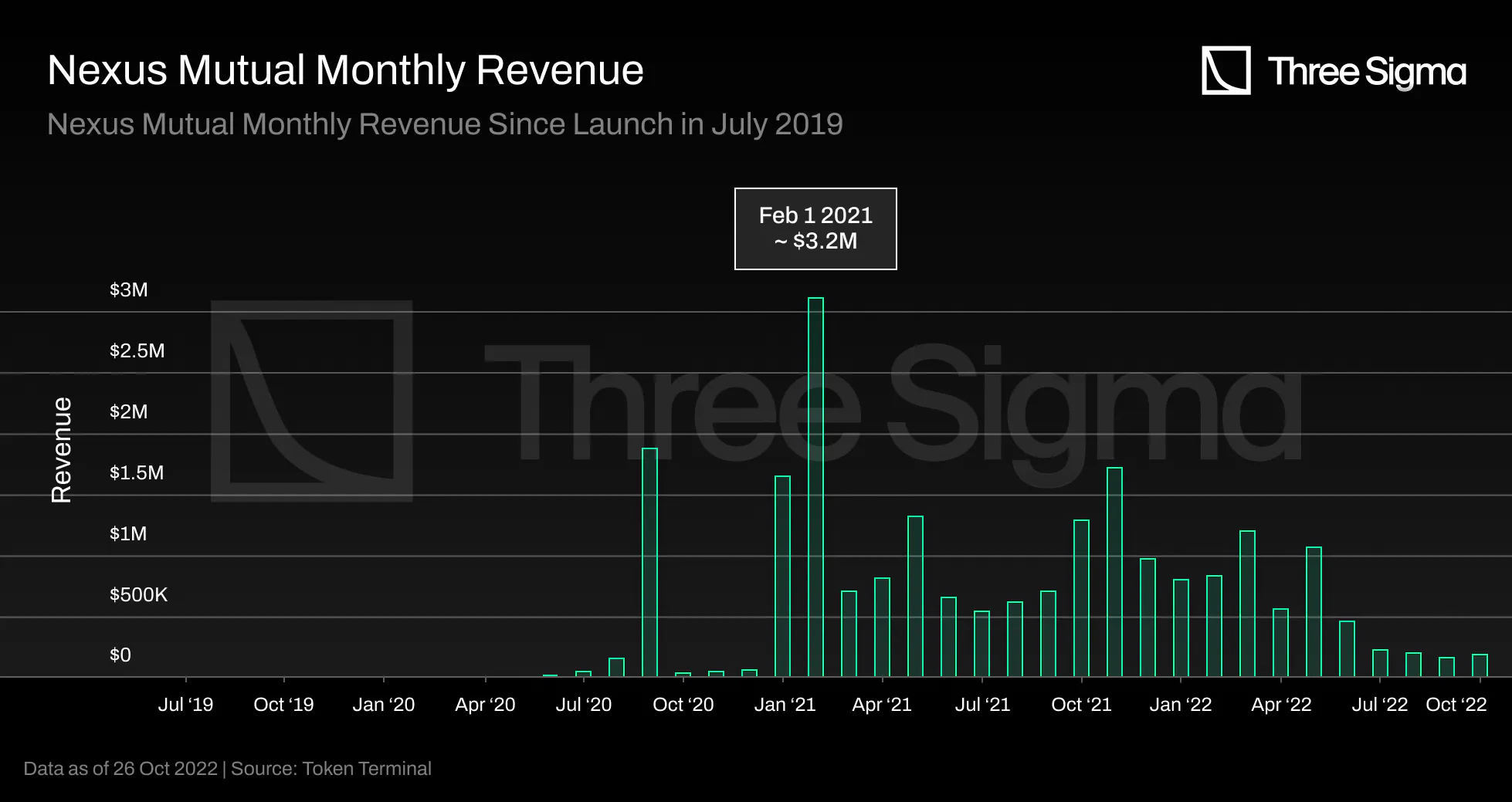

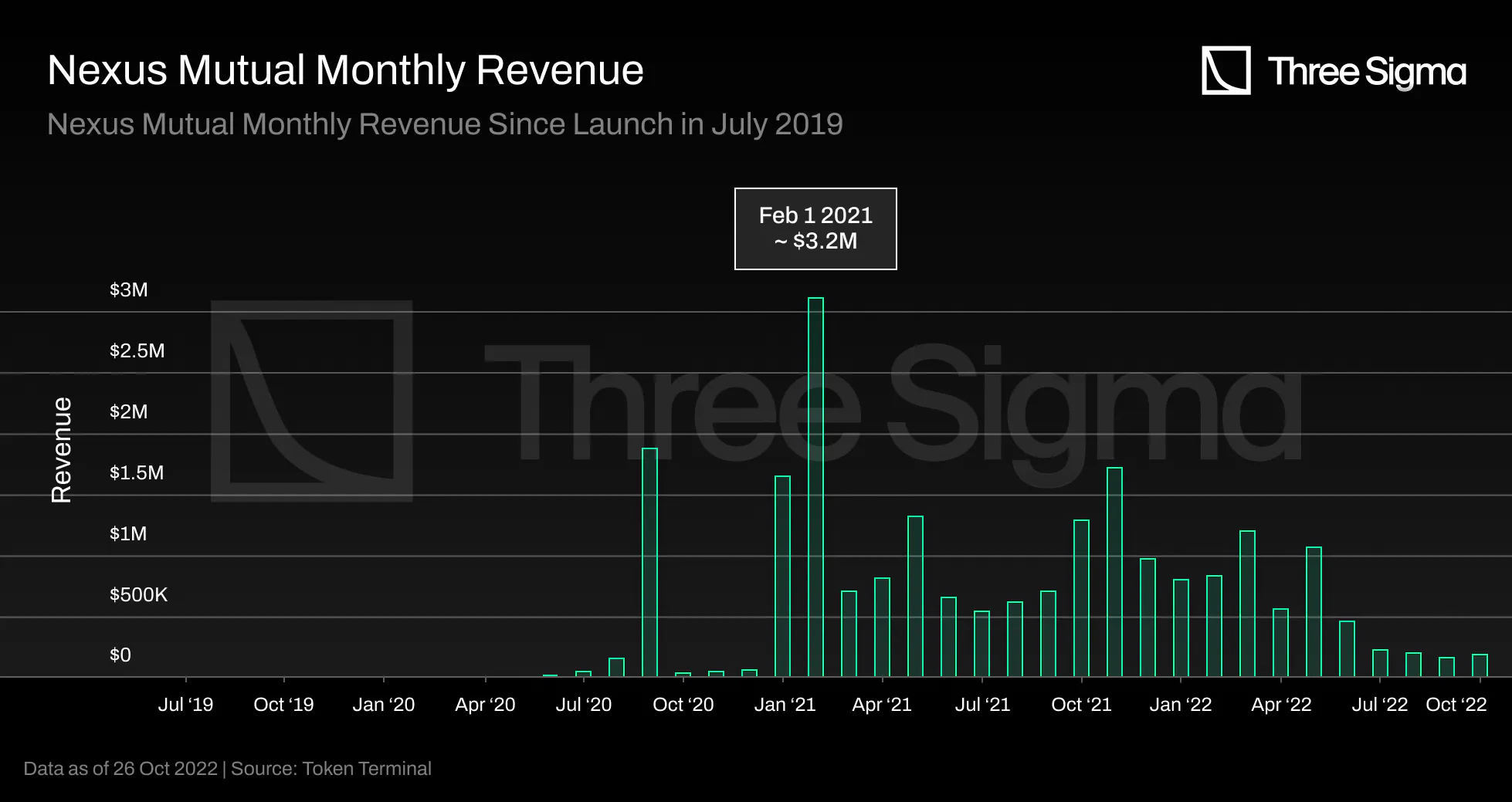

Nexus Mutual started earning revenue one year after its launch, in May 2020, with just over \$2000 in

monthly revenue. The monthly revenue peaked at \$3.16 million in February 2021, during the bull market,

and averaged \$1.2 million per month during 2021. However, the past three months have seen protocol

revenue experience a steep decline, averaging just over $210,000 monthly, due to market conditions.

This chart only considers the fees charged to Mutual members, not the investment earnings. We will

investigate this later. This chart indicates Nexus’ monthly activity, such as the number of new members

paying membership fees or the number of purchase cover policies since the value is paid in advance.

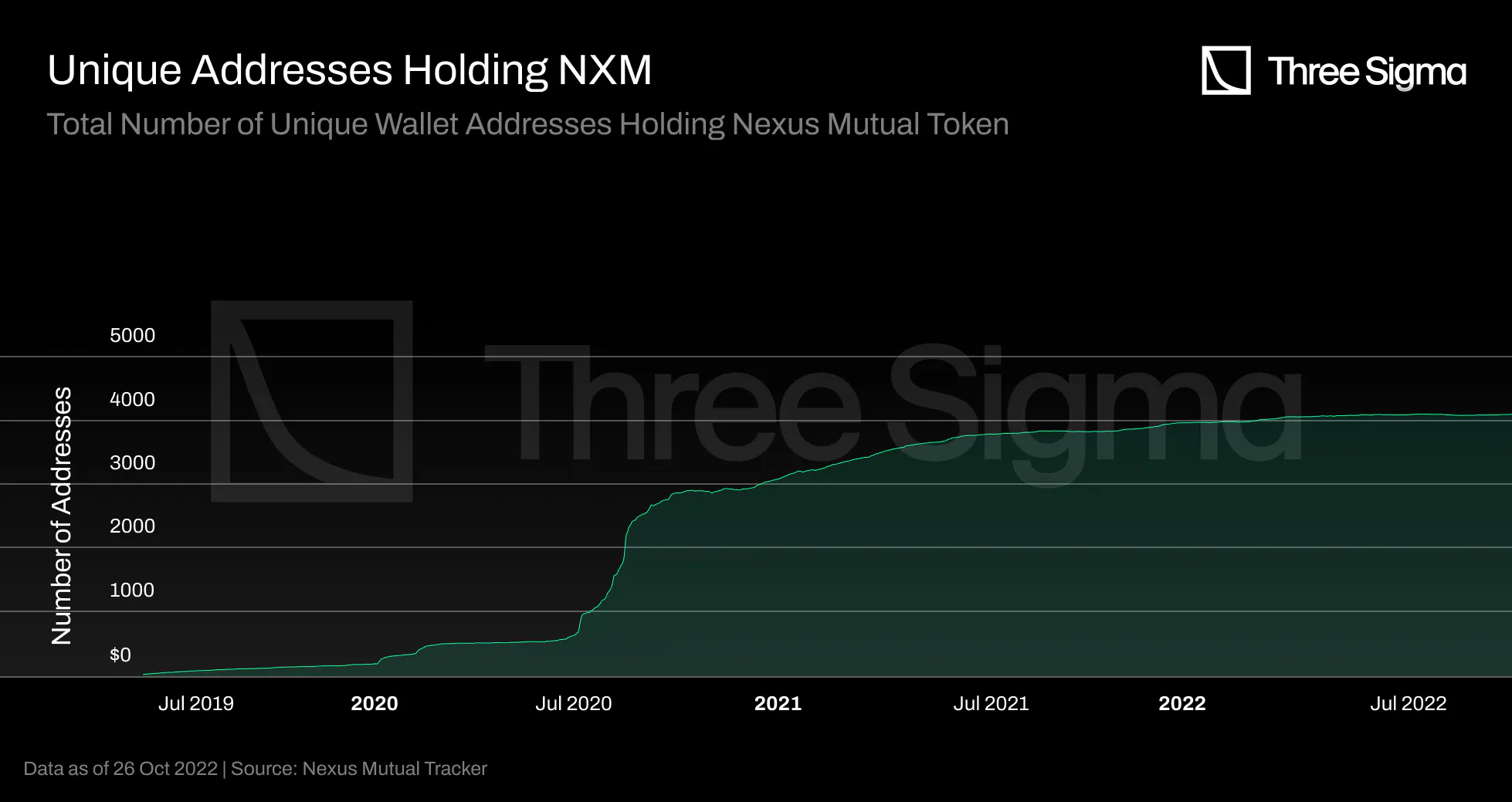

Tracking the growth and daily activity of Mutual members will be a key indicator of future economic

activity on Nexus, as they are the only users who can buy coverage and generate revenue outside of

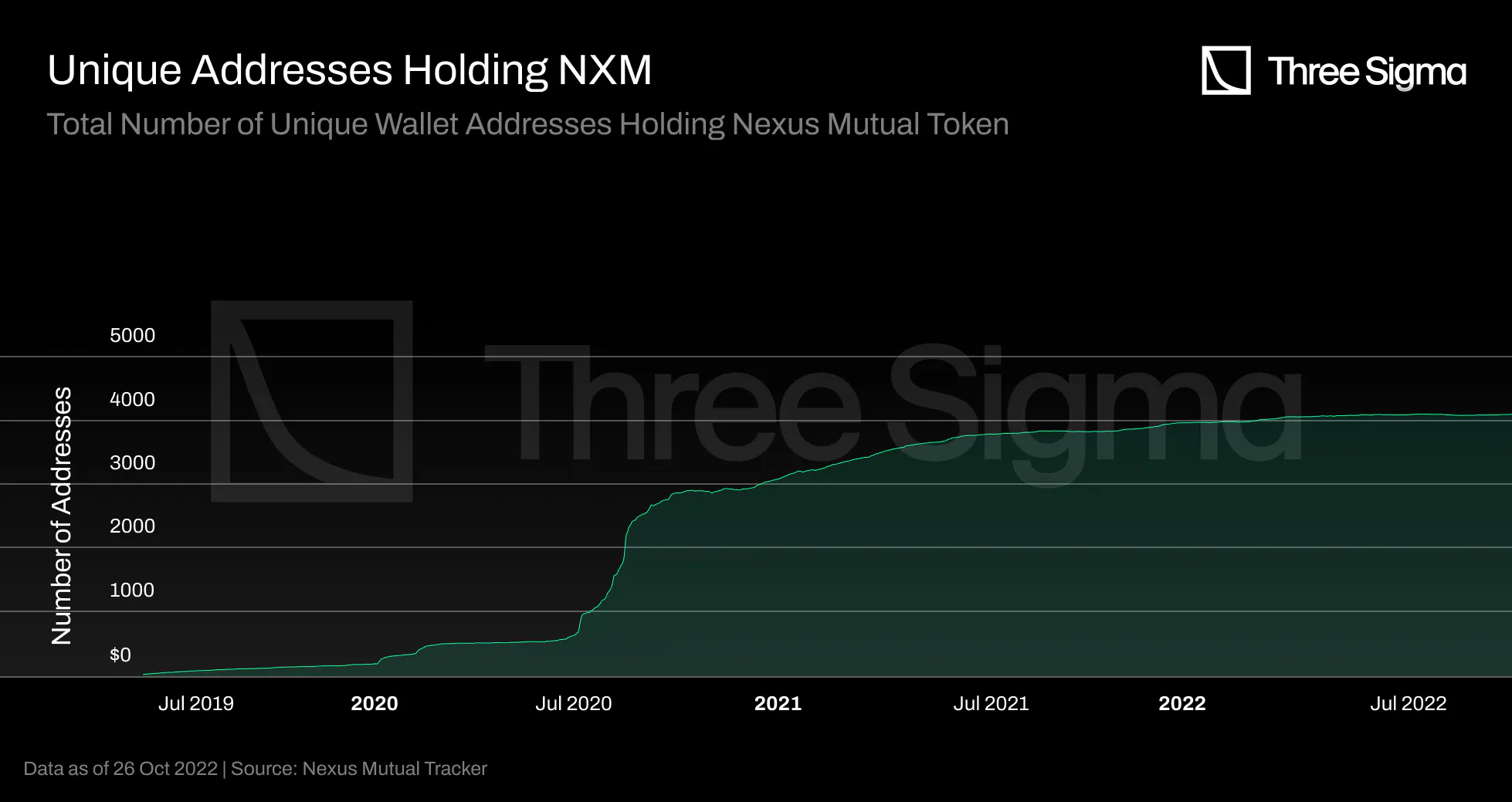

investment income. In 2022, the number of unique addresses is still increasing, but at a slower rate,

and this could be due to macroeconomic factors.

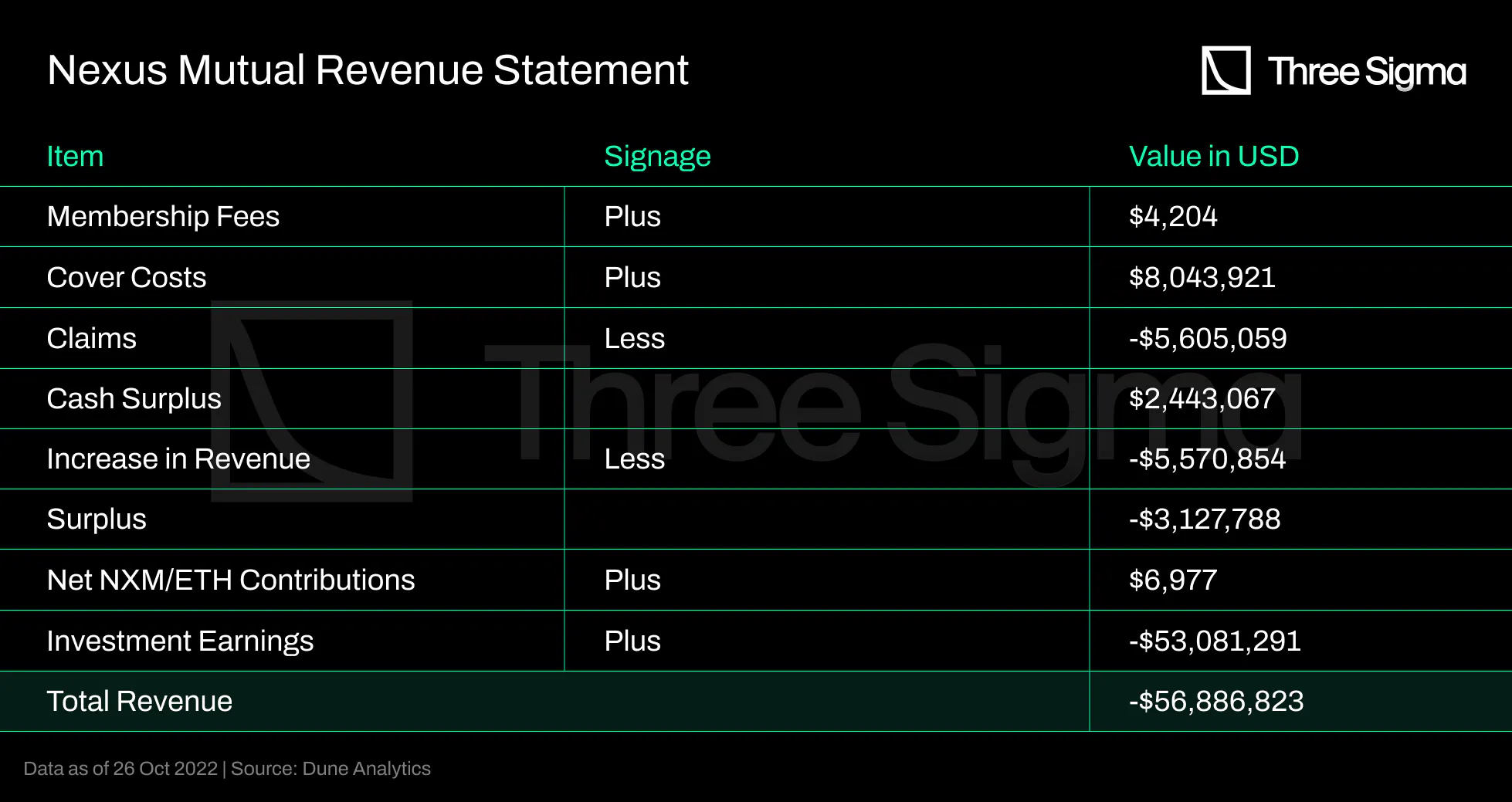

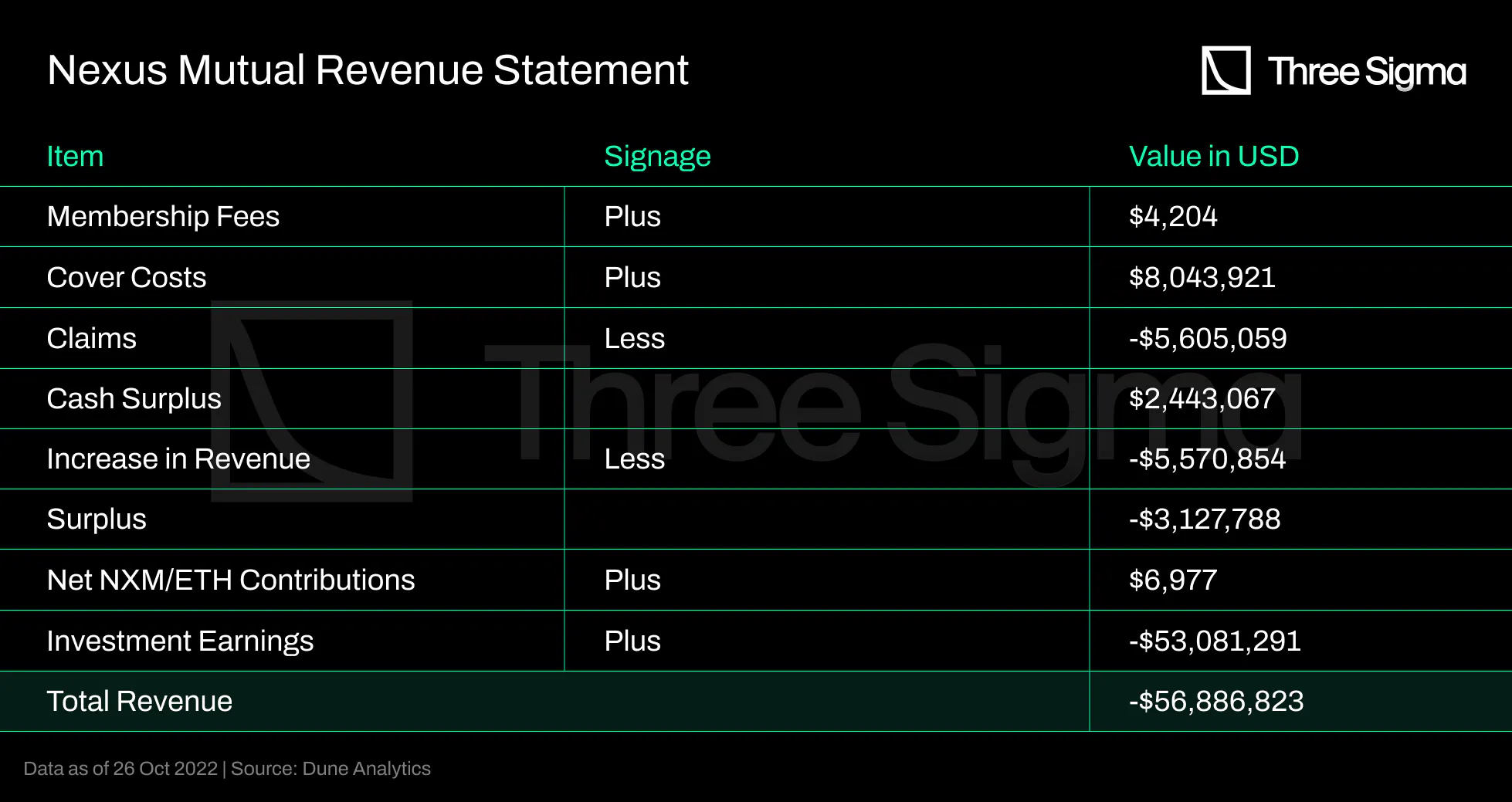

Membership fees and Cover Costs are the primary revenue for the Mutual, offset by claim payments. It is

essential to note that Investment Earning returns can fluctuate based on the time period used and the

market sentiment, with a massive negative amount currently appearing in the financials due to the

current macro effects. Insurers are anticipated to generate greater revenue when more insurance policies

are sold.

Final Thoughts

Nexus Mutual pioneered the Staker-as-Underwriter model, the most common DeFi insurance business model.

With this model, the underwriter (capital provider) controls the claims process, which creates a conflict

of interest that enables legitimate claims to be denied. In addition, Nexus Mutual compels capital

providers to speculate on risk instead of relying on data.

Token holders assume the inherent risk by providing capital in separate underwriting pools for covered

protocols. However, this leads users to perform due dilling in each protocol when most capital providers

seek higher APYs, which can impact the risk cost.

It performs well when no claims are submitted, but when cover providers want to withdraw their funds,

this model begins to fall apart.

InsurAce

InsurAce was launched in November 2020 with a “0” Premium pricing (ultra-low premiums close to 0% powered

by its dynamic pricing model), no-KYC wallet-based accessibility, cross-chain coverage and a

first-of-its-kind portfolio-based design, which allowed users to cover a basket of protocols. It

launched on Ethereum's mainnet in April 2021 and subsequently expanded to chains like BNB Chain,

Polygon, and Avalanche, among others, granting users access to a multi-chain world.

InsurAce provides insurance cover, including smart contract vulnerability, stablecoin de-peg, IDO risk,

and custodian risk with its unique portfolio-based coverage and customized bundled covers.

At launch, InsurAce provided two services, an insurance module and an investment module. To achieve its

“ultra-low premiums”, the insurance allows users to place funds from the capital pool in the investment

pool to gain a higher yield. Meanwhile, the investment module’s yield helps lower insurance premiums and

reduce coverage costs for users.

InsurAce Participants

There are three types of roles in InsurAce: the Investor, the Insurer and the Insured.

The investment arm is still under development. The Insurer stakes ETH, DAI and other assets to an

aggregated pool and earns an investment income, premium covers as well as INSUR rewards. In V1, insurers

are exclusively rewarded with INSUR tokens; the plan is to share premium covers in V2.

The Insured purchases insurance products and earns INSUR rewards and claim rights.

Cover Pricing

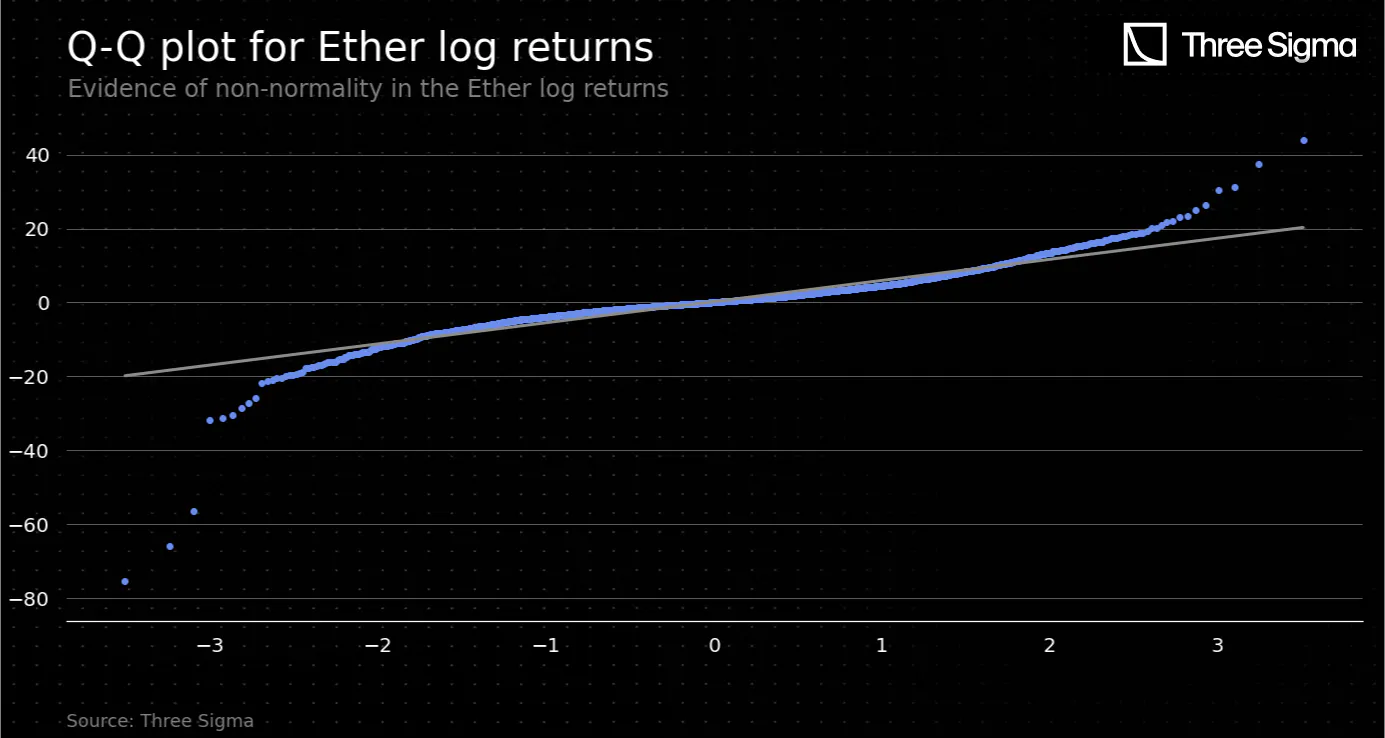

The InsurAce Protocol team argues that a staking-driven price structure, like the one Nexus Mutual uses,

fails to properly assess a protocol’s real risks, causing cover providers to charge too much for covers

when fewer funds are staked. This led them to use a Dynamic Price model to determine premiums,

introducing a minimum and maximum price. The premium is varied between these values, where the minimum

price is a base premium, and the maximum is three times this base premium. The more cover sold, the

higher the premium and vice-versa.

For each product, the premium for the first 65% of the total capacity will remain unchanged, equal to the

base premium. The premium for the remaining will increase following the dynamic pricing model. The base

premium is calculated by taking into account the aggregate loss distribution model and risk factors of

the protocol. The aggregate loss distribution model is an actuarial model that combines frequency and

severity (based on a number of claims and exposures in a given time period for a protocol), and it is

used to calculate the expected loss at the portfolio level.

The main inputs are the number of claims and exposures in a given time period. These are used for

selecting and training two separate models: the frequency model and the severity model. Frequency

modeling produces a model that calibrates the probability of a given number of losses occurring during a

specific period. Severity modeling produces the distribution of loss amounts and sets the level of

deductible and limit of the coverage amount. Both models are combined to determine aggregate loss, which

is incorporated into protocol risk factors, and so are calculations for the base price of each protocol

formulated.

The models’ parameters are based on historical data, which can be difficult to find in the DeFi

landscape. More often than not, when an exploit or hack happens that results in the need for insurance,

it is the end of that particular protocol, so retrieved data would not be directly useful in the future.

The collection of such data by machine learning algorithms seems like it could be used in an aggregate

way if there are many data points available in the future, but it’s possibly dangerous to use when there

is a small sample.

Pricing structure is not on-chain, which is common in DeFi insurance protocols, but clearly an important

improvement point for the sector. While pricing is off-chain, users can’t understand why and when

pricing changes, and it requires trusting the team, as there is the possibility of price manipulation.

Capital Model

InsurAce's capital model refers to EIOPA's Solvency II, the prudential regime for undertakings in the EU,

in line with Nexus Mutual. There are different tiers of capital requirements under this regime, namely

the Solvency Capital Requirement (SCR) and the Minimum Capital Requirement (MCR). While the first refers

to the capital required to ensure the fund will be able to meet its obligations over the next 12 months

with a probability of at least 99.5%, the MCR takes lighter restrictions and refers to the capital

required to meet the obligations over the same period with a probability of at least 85%.

InsurAce uses SCR, as opposed to the MCR used by Nexus Mutual, as the capital standard to calculate the

minimum amount of funds to reserve to potentially pay claims. It is calculated by taking into account

all active covers, all the outstanding claims, the potential incurred but not reported claims, the

market currency shock risk, the non-life premium and reserve, lapse and catastrophe risks, and the

potential operational risk. The calculation of the SCR is performed daily off-chain. The team reviews

and updates this information on-chain in the case that there is a noticeable difference.

The capital pool is built by funds pooled together by the mining pools, cover payments, and investment

pool (all governed by INSUR token holders). In line with the MCR% used in Nexus Mutual, InsurAce uses

the SCR%, which is the ratio of capital that it has available to support is SCR. It is also known as

Capital to Risk Assets Ratio and it is calculated as the capital pool size divided by the SCR. The

lowest acceptable ratio is 100%, which occurs when there are exactly enough funds to cover the SCR.

The Capital Efficiency Ratio (CER%) is used to measure the short-term success in deploying capital and

corresponds to the ratio of output per amount of capital deployed. InsurAce calculates it as the active

cover amount divided by the capital pool size. The desired ratio for InsurAce is between 100% and 300%,

which is considered to signal high productivity and moderate risk exposure.

At time of writing, Nexus Mutual has an MCR% of 94%, while InsurAce presents an SCR% of 238%. Although

the SCR and MCR are very similar metrics, where SCR seems to represent the strictest, i.e., safest, of

both, it is worth noting that the way in which they are calculated can be different. Both InsurAce and

Nexus Mutual run these calculations off-chain, so it is difficult to check whether the same standards

are upheld.

Tokenomics

INSUR tokens are used as a representation of voting rights in governance votes such as claim assessment,

as mining incentives for capital providers in both the mining pool and investment products, to earn fees

generated by the protocol by InsurAce.io through governance participation, and for other ecosystem

incentives. More use cases are expected to be introduced as the protocol develops.

Users who stake tokens in the platform earn INSUR token rewards. The InsurAce Protocol refers to this

process as mining. Mining by staking in either InsurAce’s Cover or Investment arms is governed by the

following equation:

$$Speed(Investment) + Speed(Cover) = C$$

where $C$ is determined by the token economy over time, ensuring a balance between the $Cover$ and

$Investment$ arms.

For the capital pools in the Cover Arm, mining speed is determined by the InsurAce protocol's SCR ratio.

When they are insufficient to meet the SCR, the mining speed for the Cover Arm increases to attract more

capital, helping InsurAce lower its prices and reduce insolvency risks. The pool with less capital

staked will have its SCR mining speed adjusted to attract more capital. This reverts back to normal once

the SCR is met and the Investment Arm’s mining speed increases to attract more funds.

More formally, the Speed for pool i is determined as follows:

where $S_i$ is the number of tokens staked in a cover capital pool at time t, $S_{max}$ is the number of

tokens staked in the largest pool at t − 1 whose mining speed is $S_{min}$ , and λ is the speed scale.

INSUR tokens can be bought on centralized and decentralized exchanges and bridged to and from any of the

networks the protocol operates in.

While INSUR is a governance token and doesn’t have a direct utility, sell pressure is created which can

lead to the decrease of token value. This can change if more uses cases are introduced.

Claim Assessment

The InsurAce Claims Process is similar to Nexus. A user may submit a claim within 30 days, and no later

than 15 days after the coverage has expired. As soon as the claim is submitted, the Advisory Board

initiates an investigation based on the proof of loss and other publicly available information, and

shares a Claim Report with their findings and conclusion with the community. Once this is accomplished,

there is a voting process that requires more than 75% of claim assessors (INSUR stakeholders) to be

valid. In invalid voting processes, the advisory board evaluates the situation and makes its own

decision. The user may contest rejected claims for 1% of the rejected claim amount but the Appeal is

handled by the Advisory Board, which has sole authority to make a final determination.

This brings up the same issues mentioned previously when analyzing Nexus: the Advisory Board has too much

power, is centralized in a small number of individuals, can influence claim assessors with their report,

and there is a clear conflict of interest because stakers are the ones deciding whether or not to pay

out a claim, despite the fact that they are the ones who will be penalized for the payment.

Adoption and TVL

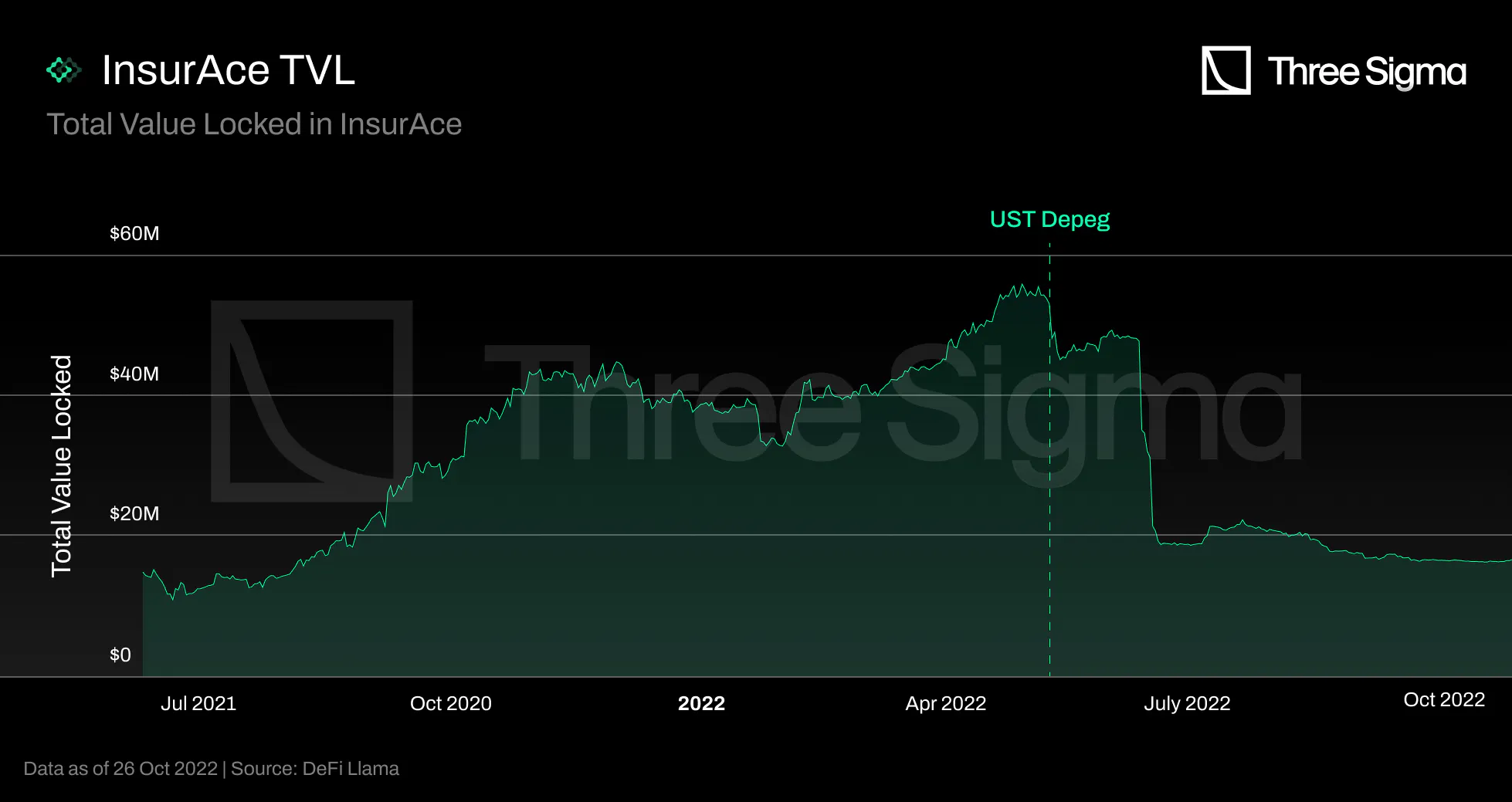

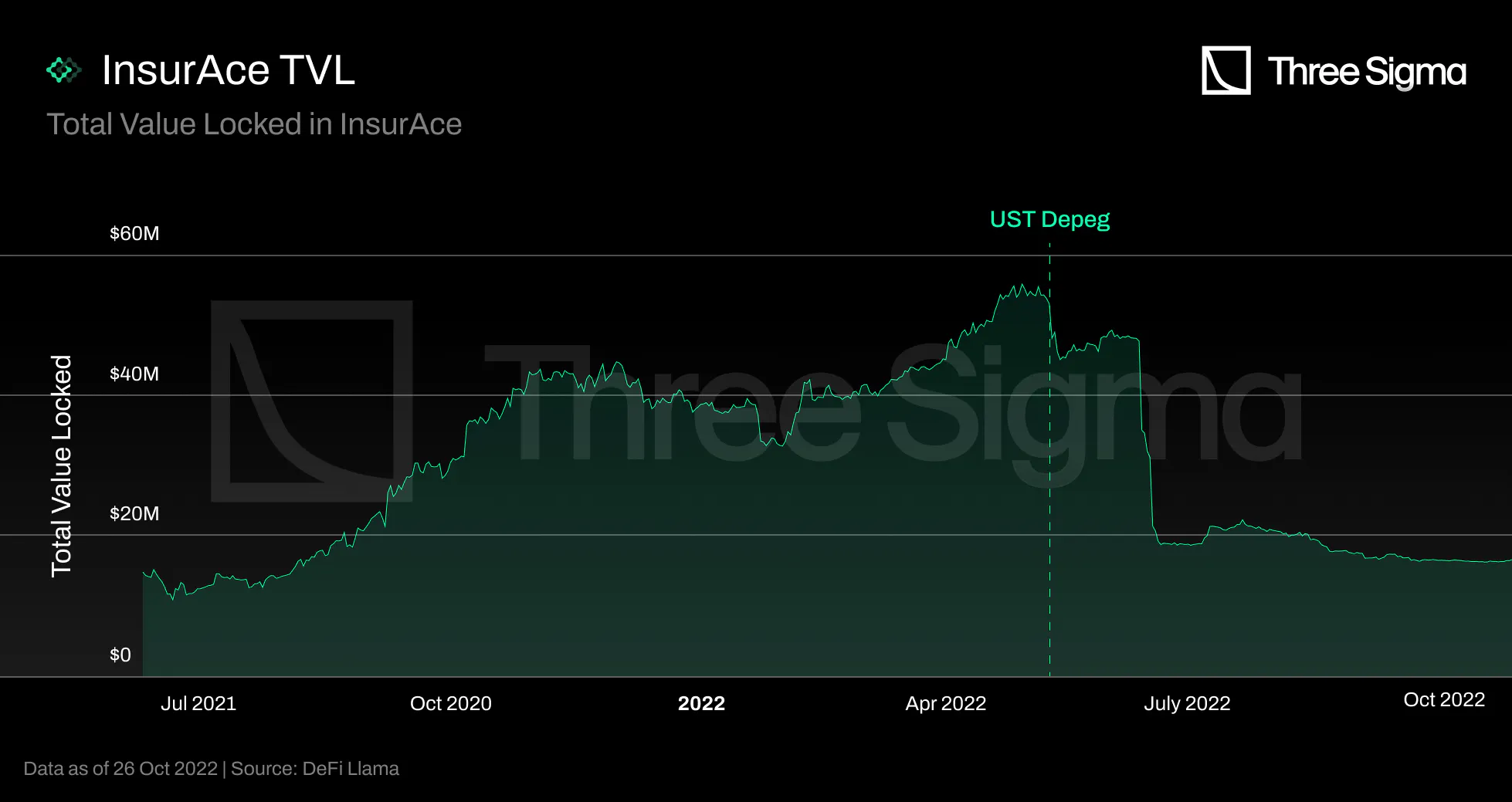

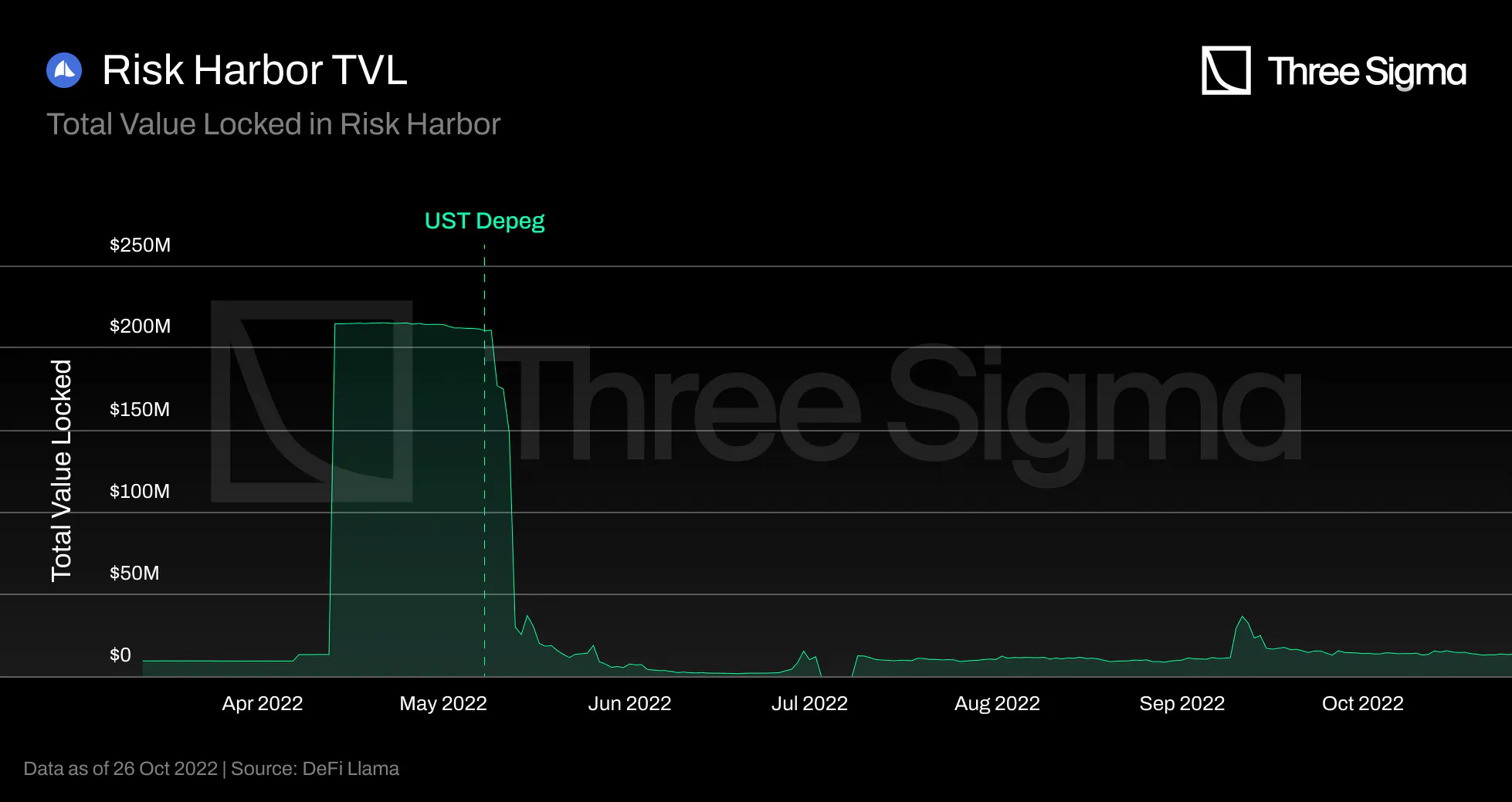

The Total Value Locked of InsurAce has grown from \$14 million in June 2021 to a peak of \$55.8 million

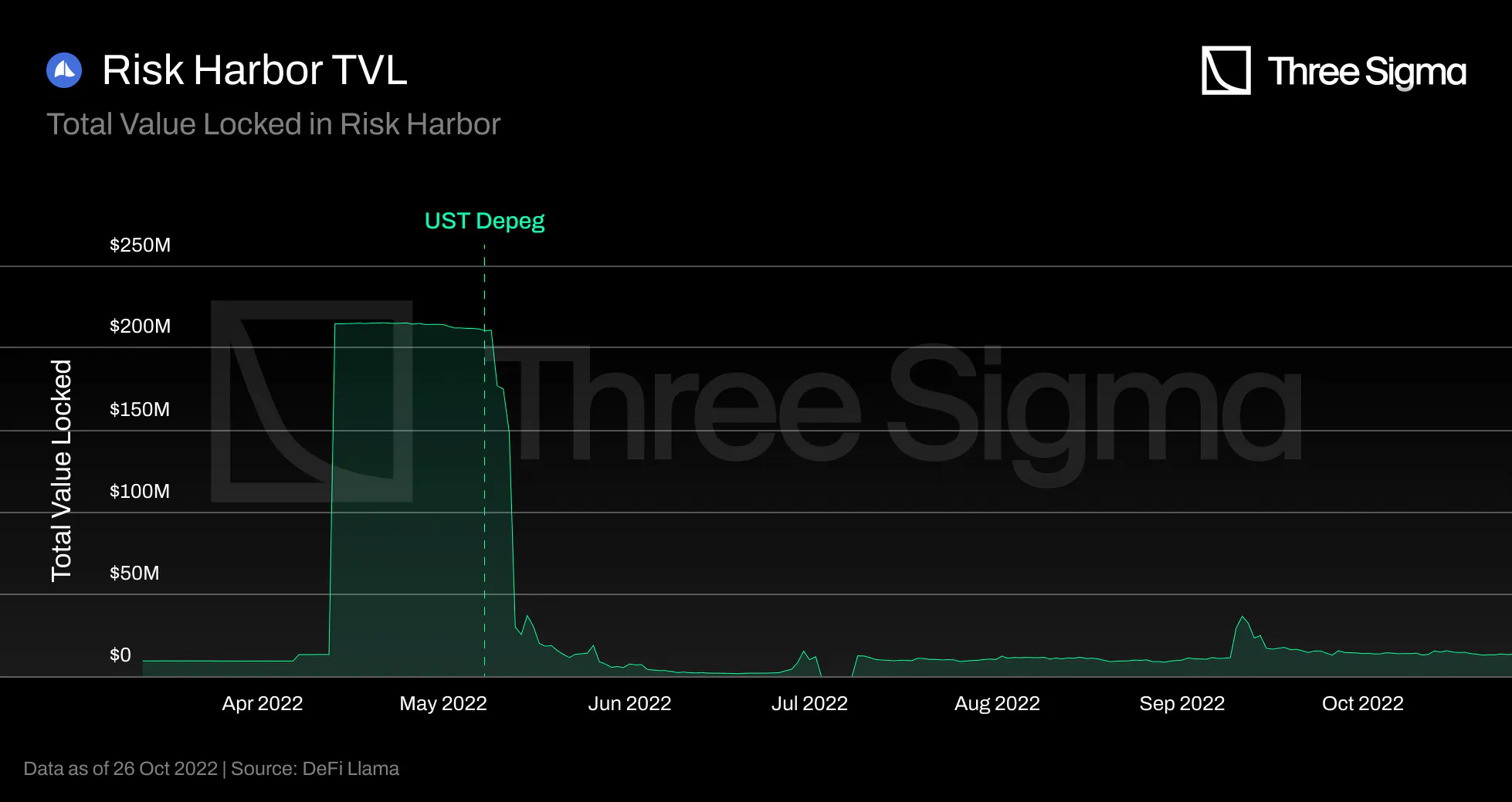

on April 29 2022, an increase of almost 4x. The TVL could have decreased between 7-13 May due to losses

in UST or Anchor, but the InsurAce investment arm is still in development, and the team did not make any

investments. Since the Terra collapse occurred in early May, this likely occurred due to the capital

providers' fear of the impact on claim payouts. In May, the claims were submitted and approved, but the

payments were only processed on June 11. Hence, LPs withdrew their funds to avoid being slashed by these

payouts. However, they were then subject to a 15-day unlocking period, which exacerbated the negative

impact on the TVL after June 11. The InsurAce TVL began a steep decline after that, falling from \$48

million to \$20 million within a week, and has been on a slow decline ever since.

Stakers were unable to withdraw funds from the pools while the InsurAce team assessed the value of

accepted claims to determine whether there was sufficient capital in the pools. InsurAce attempted to

persuade LPs to keep their funds in the pools by announcing a compensation plan for stakers who remained

in the pools after all claim payouts were settled, but after locking the funds for an undisclosed period

of time, that incentive was insufficient to keep capital in the pool.

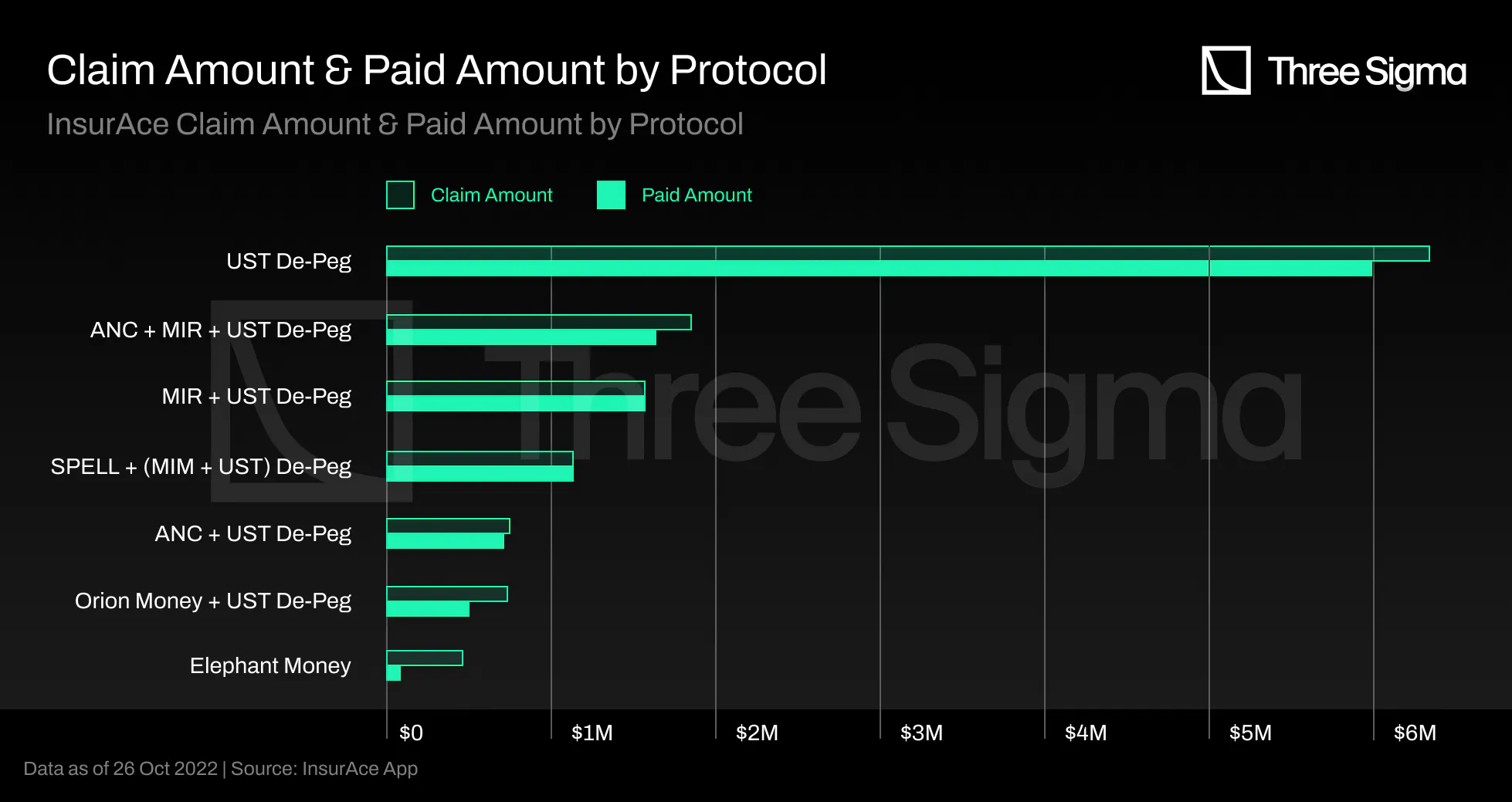

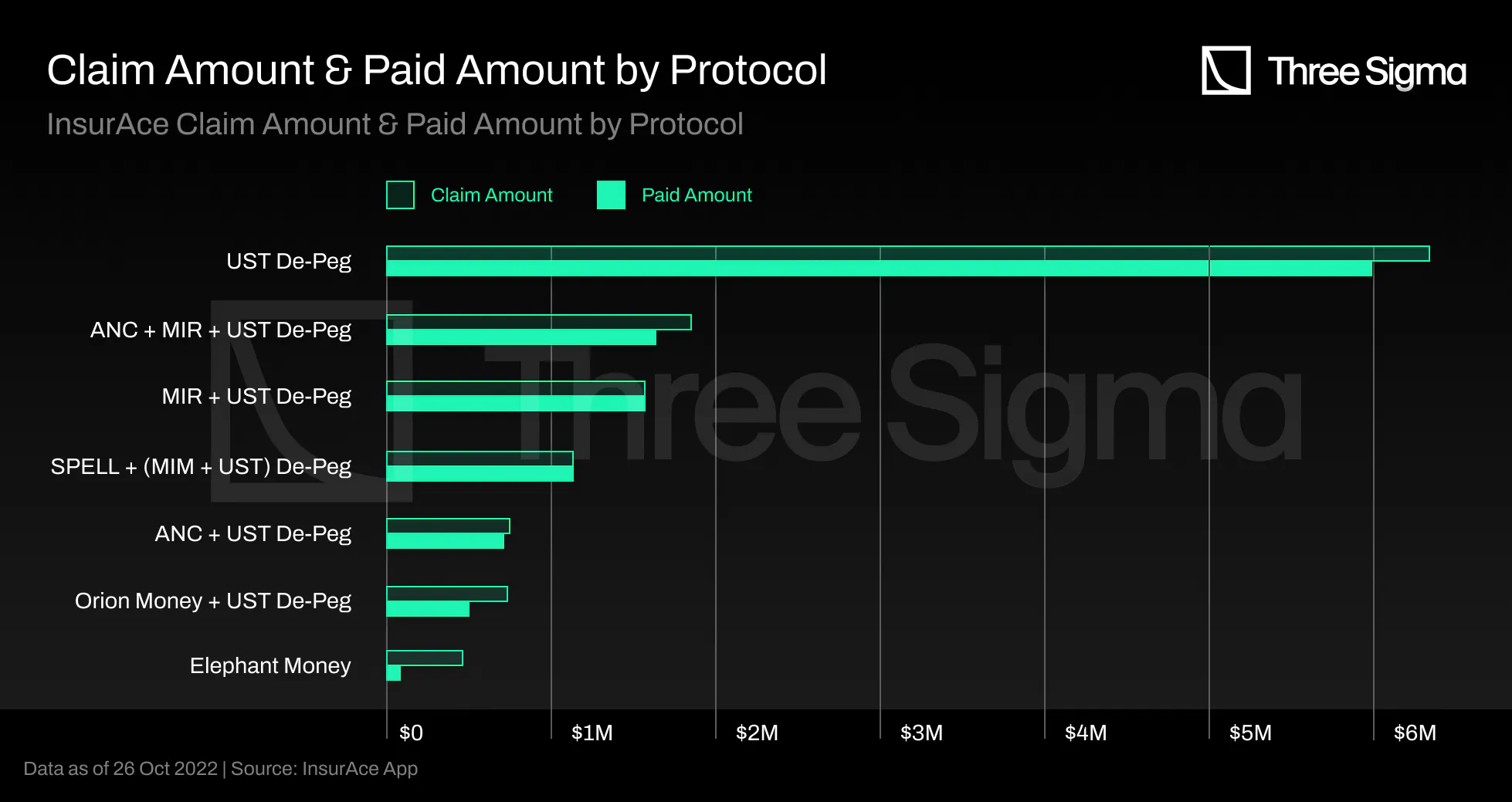

InsurAce covers 140 protocols and has already paid out \$11.6 million in claims. From a total of 215

claim requests and 161 claim requests that were approved, 177 claim requests were submitted and 154 were

approved in May. In June, the UST Depeg event caused a significant decline in InsurAce's TVL. The most

amount of claims were paid out in June, totaling \$11.5M out of a total of \$11.6M. The chart indicates

that payments were made in May, but the team is already working on a fix for this input error, as the

payout date is currently the same as the claim data, despite the fact that the actual payment date was

June 11.

Furthermore, the vast majority of these claim payouts were due to UST Depeg or UST Depeg-related bundle

coverages, as shown in the chart presented above.

TVC

InsurAce is currently covering \$15.6M in assets, totalizing \$348M in total value covered since its

launch.

The largest amount is currently covered on Binance Chain, while Ethereum is surprisingly in last place,

with Polygon demonstrating the demand for L2 solutions. The protocol with the highest cover amount,

totaling \$1.8M, is GMX, followed by Benqui with \$1M, and the majority have less than \$0.2M each.

Ethereum being the chain with less covered amount may indicate that InsurAce is not as attractive when

there are more insurance alternatives, as most other insurance protocols operate only on Ethereum.

InsurAce and UST Depeg

The InsurAce.io UST De-Peg cover was officially triggered on May 13, 2022, after a 10-day Time Weighted

Average Price (TWAP) of UST below $0.88, as specified in their UST De-Peg Cover Wording. The cover

amount was paid to those who held UST or any representation of UST supplied directly as liquidity in

their wallets or accounts with any custodians at the time of the Cover's purchase and on May 13, 2022,

and who held active UST De-peg Cover on May 13, 2022. InsurAce was overexposed to UST with roughly 21m

of exposure. This event had a huge impact on the capital pool which lead to InsurAce protecting over 155

UST-related investors.

On 11 June, out of a total of \$12.2M in claim requests, \$11.5M were paid. The Terra collapse had a

significant impact on InsurAce TVL and, consequently, SCR, but the team has been working on their risk

modeling and capital efficiency models to recover from this occurrence. Reduced SCR entails reduced

capacity for cover amounts, but the team has also severely constrained capacity compared to before the

UST payouts.

Revenue

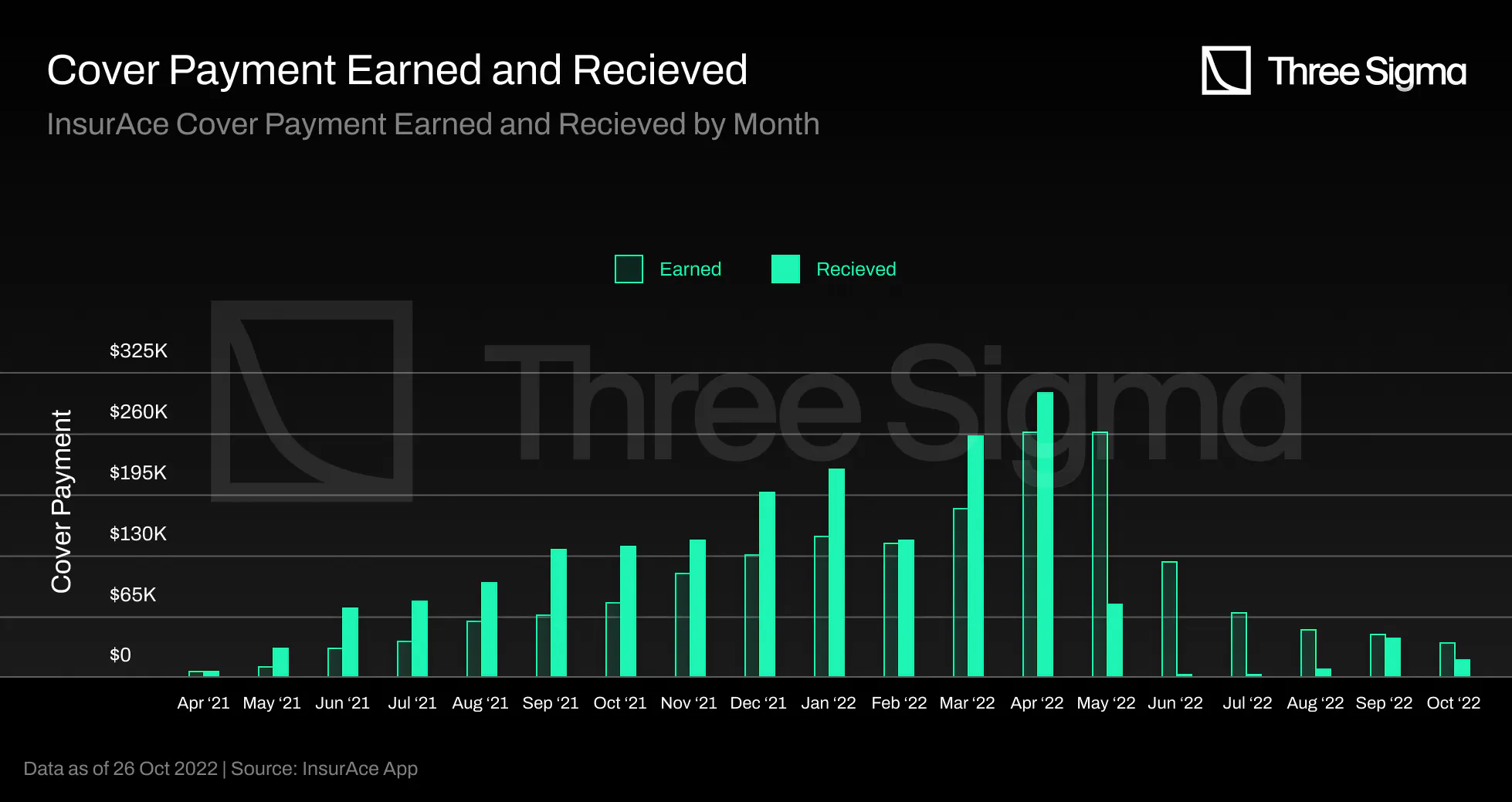

InsurAce’s goal is to generate revenue from the insurance premium and carries from the investment

returns. Currently, since the investment arm is still in development, insurance premiums are the primary

source of revenue.

The revenues are intended to be used in operation and development costs, token buybacks, community

incentives, ecosystem collaborations, and more.

Unlike the case in some protocols that are analyzed below, once purchased, InsurAce coverage cannot be

sold or modified.

The premium is paid in advance, but is only counted as "Earned" on a monthly basis, as some policies may

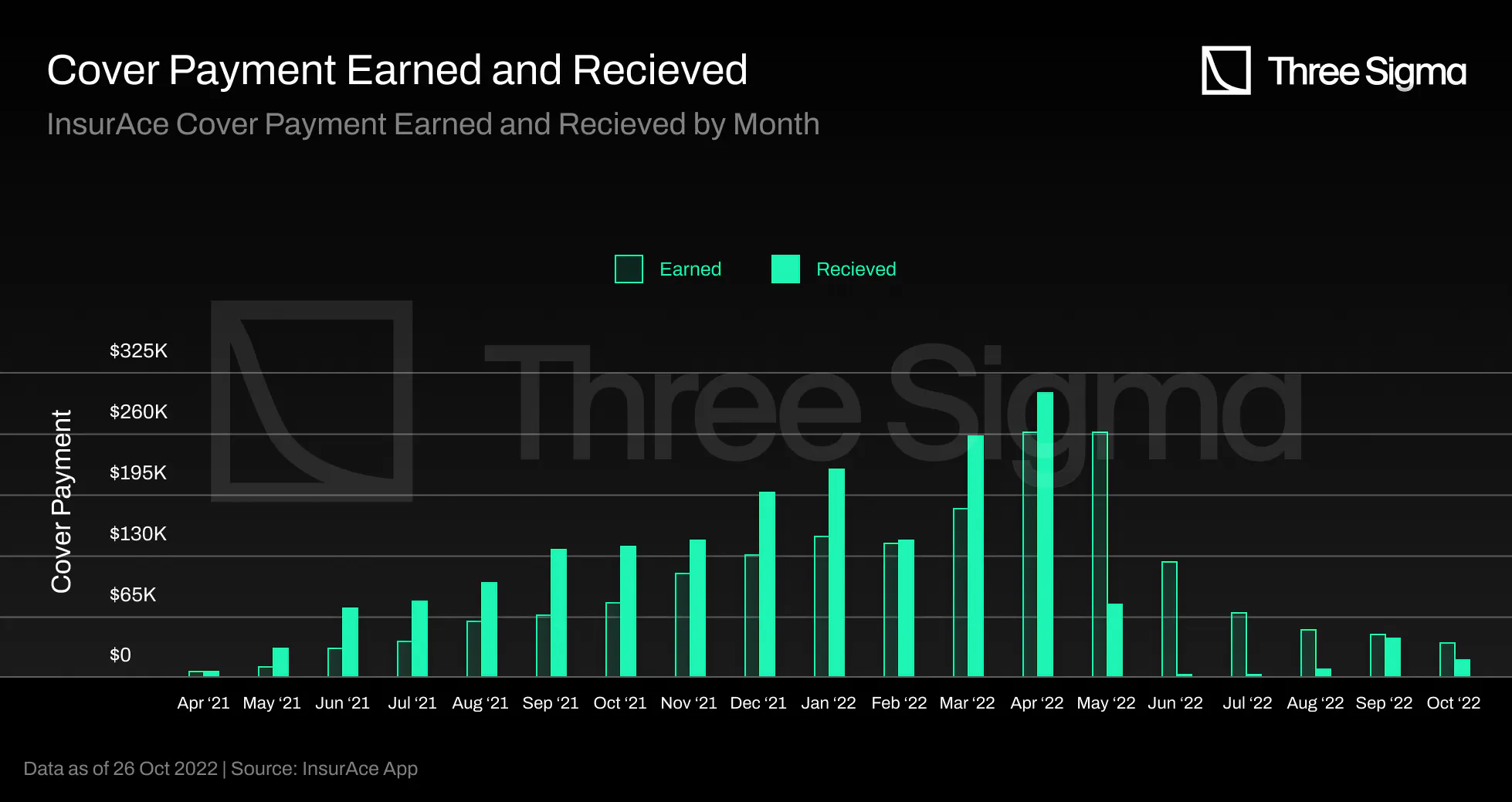

be canceled prior to the expiration date, in which case the protocol will refund the remaining value to

the user. The values referred to as "Earned" represent premiums, and the values referred to as

"Received" represent revenues distributed over the duration of the policy, not taking into account

cancellations of policies but counting the additional revenues from other sources, such as grants from

chains. The revenue value was steadily increasing until the collapse of Terra forced InsurAce to pay

nearly $12 million in claims.

Prior to the UST Depeg event, the "Received" amount was increasing as a result of new policy sales, and

the "Earned" amount was also increasing as a result of new monthly payments.

After the event, the “Earned” amount was impacted because monthly claims payments to protect UST holders

ceased; nevertheless, the protocol continues to earn premiums from long-term coverages. The “Received”

amount was also impacted because, in general, people stopped purchasing coverage in InsurAce after the

incident and were unable to do so due to the low SCR%.

Notably, the chart does not include operational costs, which include the amount spent on INSUR rewards

for capital providers. The team reserved 45% of the total supply for mining rewards from the beginning,

and the remaining time on that supply is likely two years. The team intends to divide revenue and profit

sharing from the investment arm with capital providers in the future, but the percentage has not yet

been made public.

Final Thoughts

InsurAce's underwriting model is based on a business model inspired by the DeFi summer liquidity

incentives concept. In order to accelerate underwriting, InsurAce issued Mining incentives, which offer

insurance providers APY paid in INSUR tokens. InsurAce APYs are based on supply and demand to

incentivize capital providers to assist with token rebalancing in order to maintain an even distribution

of underwriting tokens with sufficient capital for modeled payouts. This model provides a simple way to

bootstrap liquidity quickly, but LPs who seek higher APYs will leave the pool as soon as they find a

protocol with a higher APY.

Regarding cover pricing, it is interesting that InsurAce uses machine learning models to estimate

parameters typically used in traditional insurance. However, the data that is available for the DeFi

space seems to still be far from the necessary amount to employ these models.

The UST depeg event proved that insurance in DeFi serves its purpose and in the case of InsurAce,

claimants were indeed reimbursed. This is a great step towards adoption, although it took a great toll

on the SCR and the protocol seems to be having difficulty recovering from it. Having mechanisms in place

to quickly recover from these situations or be protected from them is concluded to be very important.

Armor.Fi/Ease.org

Armor was introduced in January 2021 with the intention of solving fragmented liquidity and limited

coverage capacity in the majority of protocols by extending the Nexus Mutual insurance model but

removing the Know Your Customer (KYC) requirements using the arNXM vault. Despite successfully making

Nexus' coverage products DeFi-compatible in 2022, the core Team felt that the premiums model was not

optimal for DeFi. Armor introduced the Uninsurance (Reciprocally-Covered Assets - RCA Coverage) model

and changed its name to Ease.org in May 2022.

The arNXM vault allows users to provide collateral to Nexus Mutual without a KYC check by acting as a

custodian on their behalf. In addition, the Armor team actively monitors yield and risk factors and

designs staking strategies accordingly. The yield-bearing nature of arNXM allows all rewards generated

by underwriting Nexus protocols to be distributed directly to arNXM holders. This vault currently

provides over 30% of all underwriting funds to Nexus Mutual but has provided 45% in the past.

Armor also introduced a new product in the DeFi space, arCore, based on a pay-as-you-go (PAYG) model,

with duration and coverage limits that can be customized. This product offered a PAYG model by charging

the insurance policy by block and offered customized duration by allowing users to purchase coverage

from a pool of staked arNFTs that did not lock the funds into a fixed contract. Despite being an

innovative insurance product, issues with gas costs on the Ethereum mainnet directly inhibited the

flexibility that this solution sought to provide, as insurers with smaller wallets were charged

block-level fees that were unaffordable. The protocol was discontinued at midnight on May 31, 2022 (UTC)

along with the new rebranding strategy, which will be explained in greater detail later.

The arNFTs are yet another product created by the Armor team, and offer users a new way to interact with

Nexus Mutual and their coverage policies. Users can mint arNFTs for any protocols for which Nexus Mutual

coverage is available, and they will receive an ERC-721 token that they can hold, sell, transfer, or

stake to receive fees in ETH and rewards in $ARMOR. The arNFTs will continue to be developed by the Ease

team, with new features on the horizon, but they will no longer be able to be staked in the discontinued

arCore product.

To meet the increased demand for coverage, Armor developed a second product called arShield, which

streamlined and aggregated coverage via Shield Vaults, where users could deposit assets and receive

passive coverage for as long as they remained in the vault. The premium cost was deducted from the asset

yield, eliminating the need for upfront payments and lowering the premium cost. This concept gave rise

to the shared risk ecosystem for which Ease protocol is known today. Since Ease is now live, the

arShield vaults have been discontinued.

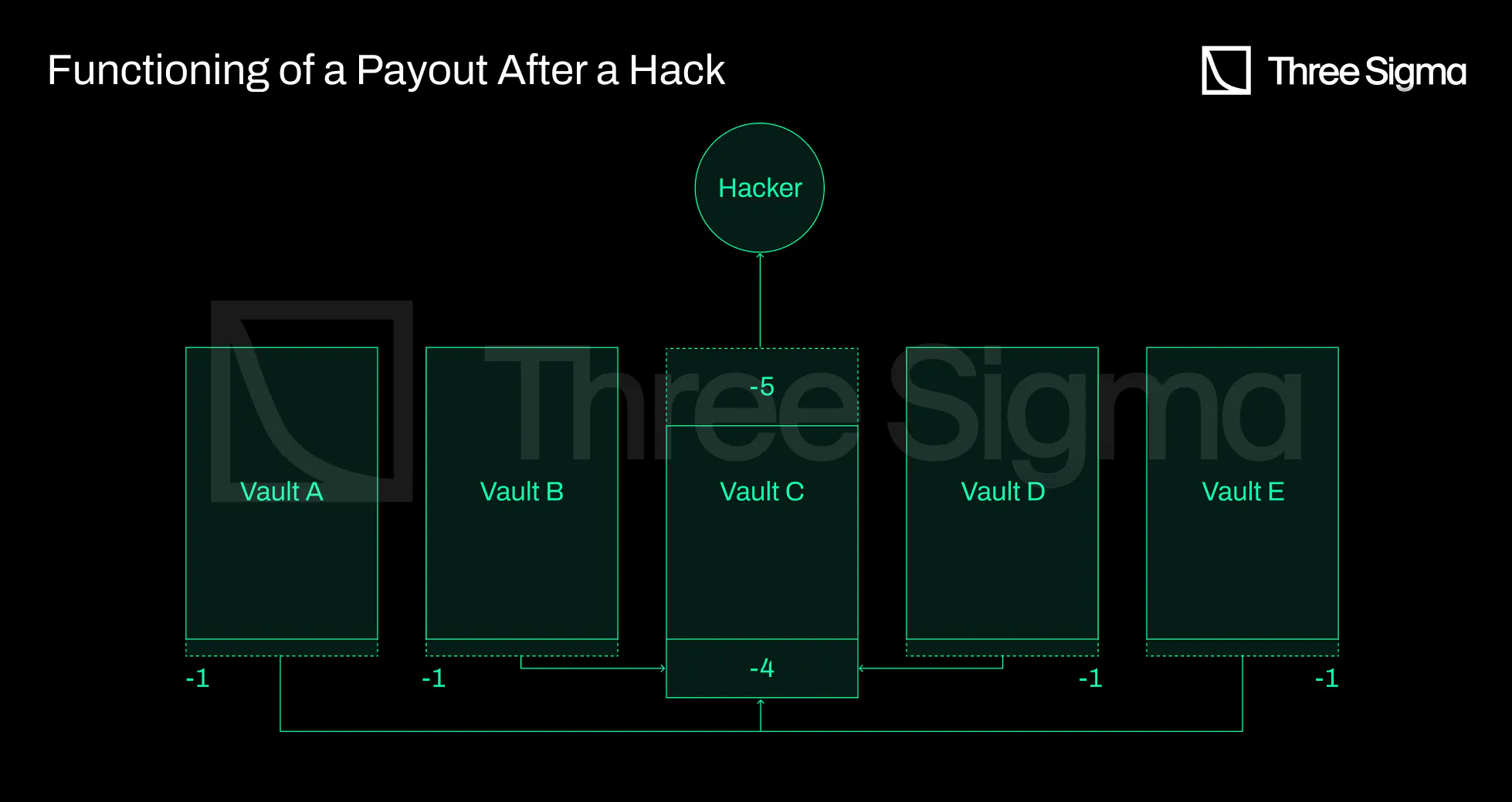

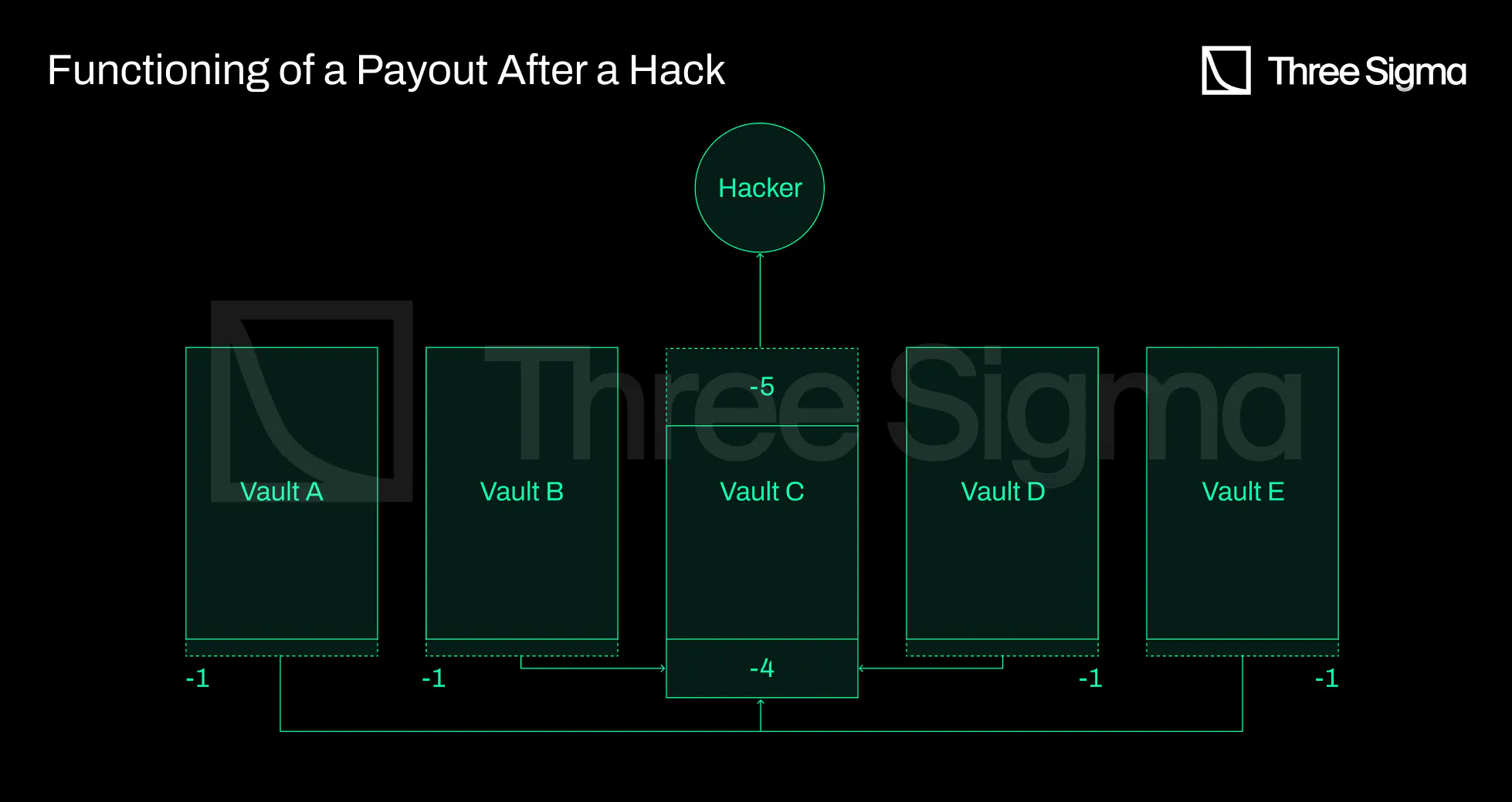

Reciprocally-Covered Assets (RCAs) were first introduced by the Ease team and are a DeFi-native coverage

method in which covered assets simultaneously underwrite the other assets in the ecosystem. This new

model enables users to store tokens in Uninsurance vaults with a one-time, vault-wide fee in the event

of a hack. These premium-free Uninsurance vaults are possible due to the fact that RCAs are a method for

collecting underwriting capital directly from deployed capital within DeFi yield strategies and

deducting the premiums directly from the generated yield. In the event that one of the strategies is

exploited, Ease liquidates a proportional amount of funds from all vaults to compensate investors. From

there, future premium payments replenish the payout liquidation's capital. Since the cost is only

incurred in the event of a hack and is spread across all participants, a larger number of participants

results in a lower individual fee.

The benefit of this system is that the risk is distributed across the entire ecosystem, as opposed to

being carried by a single vault or protocol, and that users are not required to pay premiums unless

there is an exploit. Since risk is proportionally distributed among users, a larger hack will result in

larger payouts to users, but will never lead to complete insolvency, resulting in a much more resilient

coverage model. Additionally, the user's funds are never fully covered, as there is a capacity

restriction on the vaults in order to maintain solvency. If 25% of the RCA ecosystem is hacked

simultaneously, only 75% of the stolen vaults will be reimbursed, as impacted vaults will only be

compensated an amount equal to the losses of other vaults. If the hacked value is greater than the total

RCA value, the system fails (imagine that there is a hack on DeFi that affects a lot of protocols at the

same time). The Ease team attempts to prevent this by not adding any protocols to the ecosystem,

auditing protocols, and performing due diligence on protocols the team intends to add. With increasing

protocol diversity, this type of system becomes more secure.

Claim Assessment

Armor’s claim assessment is identical to Nexus, but with Armor governance replacing the Nexus Claim

Assessors in the first instance of a claim. In Ease, the DAO will have final say over the contents of

each vault's coverage. In RCA's system, all losses are incurred directly from the vault of assets rather

than from individuals, thus eliminating the need for proof-of-loss and claim procedures. The DAO votes

on the amounts that must be returned to each affected vault and allows the liquidation of tokens from

other vaults to complete the payout. Claims payouts will be made by sending affected vaults ETH or a

stablecoin, after which users may withdraw payouts proportional to their vault holdings.

When an exploit event occurs, the protocols that are deemed safer will get slashed less, whereas the

least secure protocols will be slashed the most. The safeness of each protocol is determined by the

broad community itself through Ease token delegation.

The conflict of interests is the primary issue with this approach to stakeholders as insurers. Because

the DAO votes on the amounts that must be returned to each affected vault and allows liquidation of

tokens from other vaults to complete the payout, there is an incentive to accumulate votes to avoid

getting slashed. Protocols with higher TVL in the vaults will contain more EASE and so the DAO will vote

to return more funds to larger protocols. It is a smaller-to-larger protocols insurance, not an

all-to-all insurance.

Risk Assessment

Technically, reciprocally-covered assets do not require a detailed risk assessment to function. Since no

premiums are charged for coverage, Ease is able to cover protocols without a specific risk assessment,

with the Armor DAO's initial approval or denial of the protocol following a rigorous investigation by

the entire community serving as the figurative risk assessment.

It ultimately relies on the same premise as Nexus protocol, namely that the community is accountable for

performing due diligence on projects and assessing their risk. Since the bulk of DeFi communities are

made up of average users and not security experts, it would be imprudent to base the entire Ease

protocol on the community's diligence.

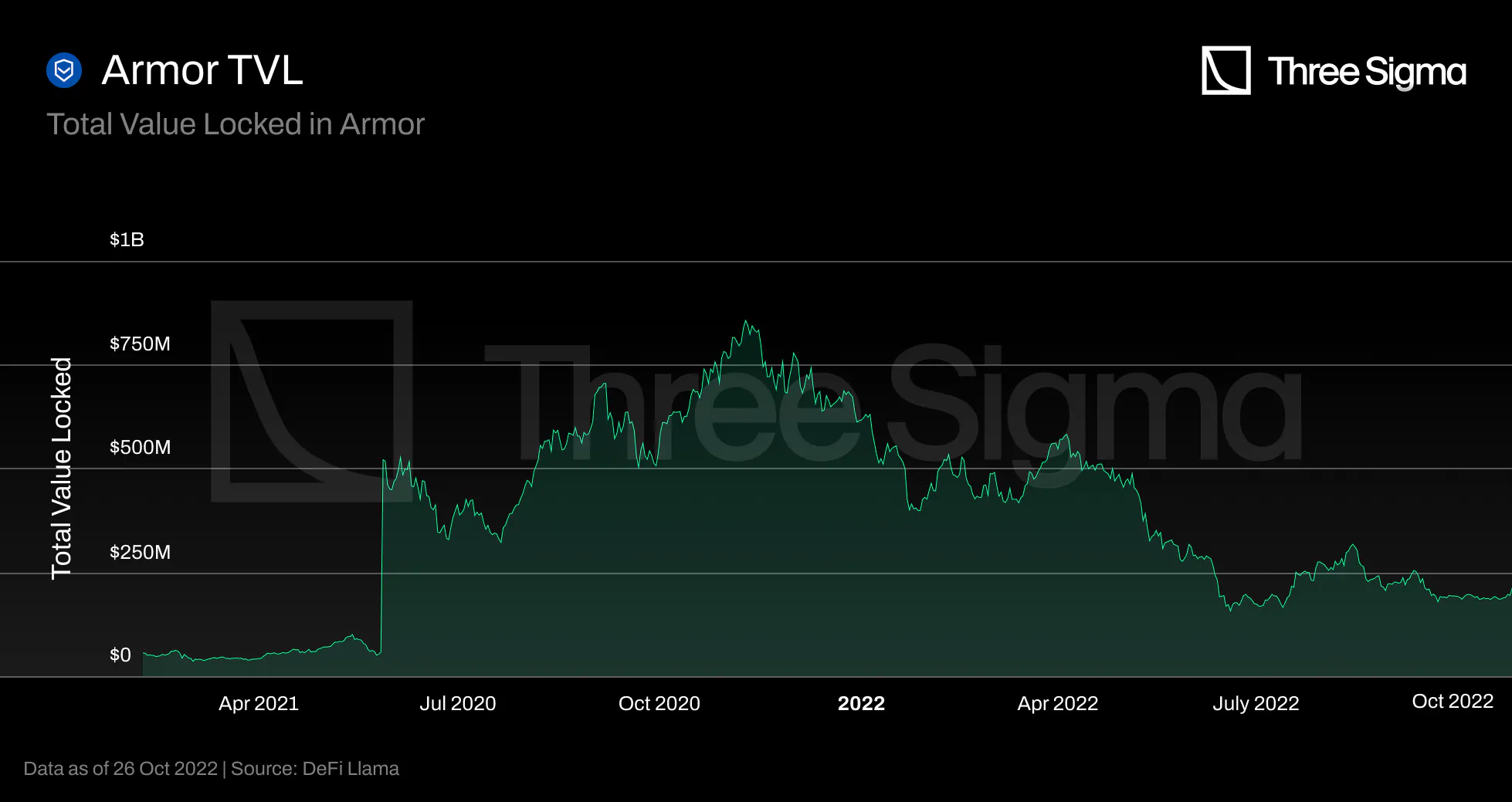

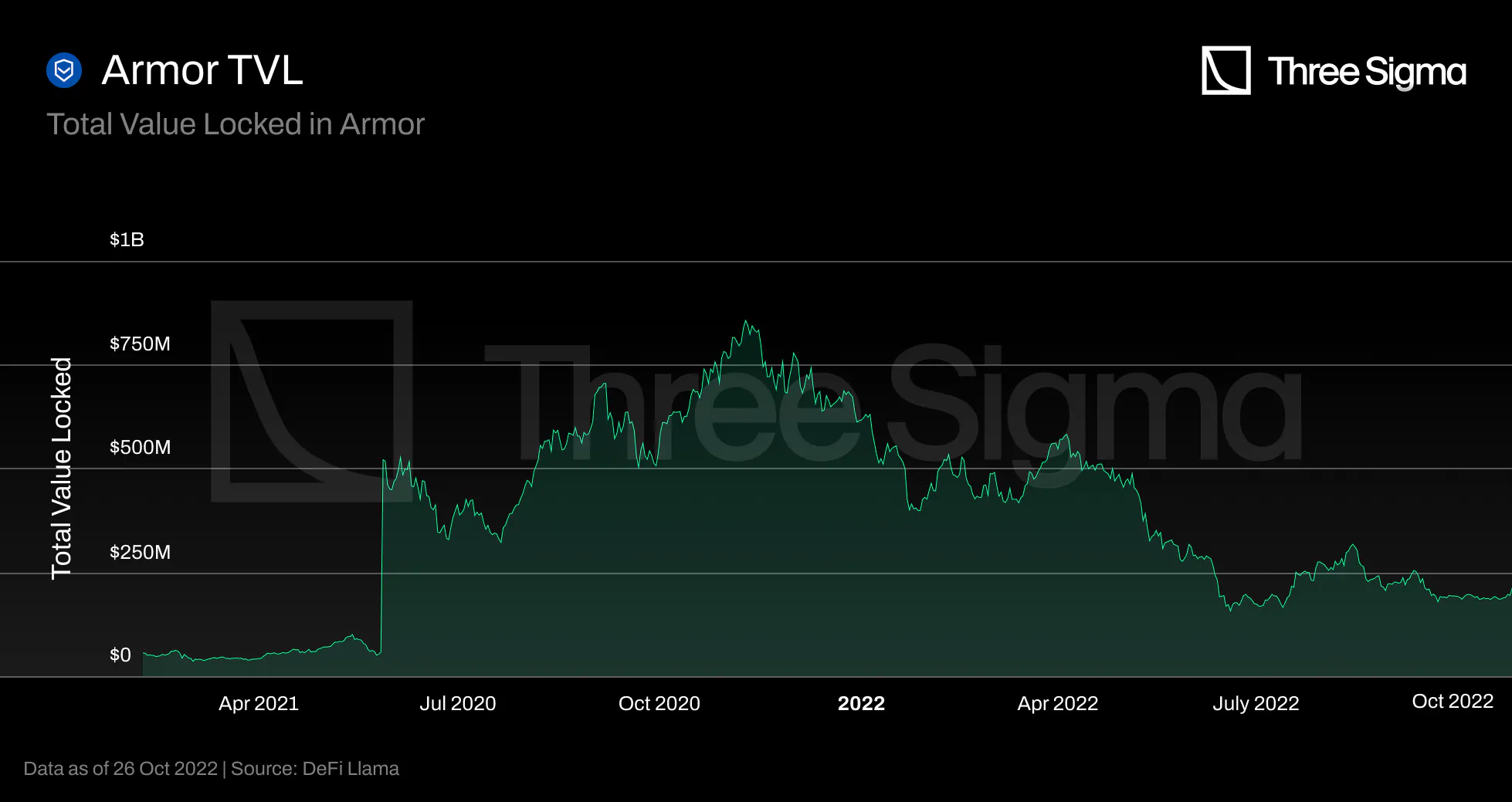

Adoption and TVL

DeFi Llama's Armor and Ease metrics are ambiguous. DeFi Llama incorporated Nexus into its TVL for Armor.

The team discovered this and contacted Defi Llama immediately, but they claimed to be unsure as to why

it was occurring, and it was never fixed.

Since the Ease launch in May, the only viable way to track Armor metrics is through their Dune Analytics

Dashboard, and based on the above chart, there are no longer any active covers in the protocol, since it

was discontinued.

DeFi Llama's presented metrics for Ease TVL are also invalid due to the lack of their legacy product,

arNXM, which DeFi Llama incorrectly counts as nearly $10 million for Armor TVL.

Since Ease was launched before there was a DeFi Llama metric page for it, the TVL has displayed 491k from

day one. Ease does not offer any official or community data dashboards. The Ease team had the

challenging task of launching a new and unique product during a bear market, which may explain why they

are having some trouble attracting liquidity.

TVC

In Ease, users deposit tokens in vaults to cover and provide coverage to other users. We can say that

Ease's TVL is equivalent to their TVC because all deposited funds are protected by other vaults. The

issue with this strategy is that if all protocols or even the vaults with the majority of value are

compromised simultaneously, the remaining vaults will not have sufficient funds to cover the defaulted

vaults. This relies on the same assumption that Sherlock uses, namely that the probability that multiple

high payout events occur within a short time span is very low. It would be interesting to see a deeper

analysis of this assumption and understand under which conditions it falls through. The way DeFi

operates in intertwined lego pieces that make up different protocols could pose a restriction to this

assumption in the sense that exploits in particular protocols could cause losses in others.

Revenue

RCA products are currently not generating any revenue. Revenue from prior Armor products such as arNFT

and arXM is currently enough to cover expenses. Ease.org does not currently charge any fees, but the DAO

will have the ability to impose a maintenance fee based on a percentage of the yields created by users.

This feature is not currently available. Ease is also working on Zapper integration, which will allow

clients to zap assets such as ETH, USDC, and others into Ease's vaults rather than having to provide the

exact underlying asset. This feature will be released from testing soon, and there will be a small fee

associated with it.

Final Thoughts

Ease's value proposition is based on the assumption that, on average, hacking losses are significantly

less costly than the premiums paid. We will be able to confirm this hypothesis once the project is

tested using actual exploits.

With this RCA business model, if a hack occurs in one vault, instead of the user paying a contract

premium, a small portion of the other vaults is liquidated to cover the loss, proportionally

distributing it throughout the ecosystem. The largest, most secure, and most robust protocols, and users

using these protocols have no incentive to participate in such a system because they are more likely to

pay for hacks in other protocols using this vault-shared architecture than to be hacked and receive

funds from other vaults. Even if the safest protocols are slashed less frequently, they will still be

slashed multiple times while the other protocols are hacked. This risk diversification seems very

beneficial for the system as a whole, as a large hack will never result in insolvency. However, proper

risk diversification only happens if there are a lot of different protocols and participants being

covered. One slight variation that could mitigate this would be to create different groups of vaults

with different risk categories. Riskier protocols could be grouped to share the same risk, or individual

users could then be better rewarded if they chose to provide the equivalent of their covered amount as

cover for a riskier protocol.

Also, relying on community decisions assumes that token holders can conduct extensive due diligence at

the smart contract level, which is beyond the knowledge of regular users. The safeness of each protocol

is determined by the community through Ease token delegation, which could, in turn, be a point of

failure if incentives are misaligned, i.e., if a large portion of voting power is gathered by a protocol

or user that could benefit significantly from deeming a protocol safer than it truly is.

Finally, assets in the ecosystem are the collateral for the ecosystem, meaning that the available

coverage increases as the ecosystem expands. Given that the risk is shared by all users and all vaults,

users are not genuinely insured in the conventional sense. Rather, they do not lose all of their capital

in the event of an exploit, only a portion.

Unslashed

Unslashed was launched on January 6, 2021, offering smart contract hacks, CeFi exchange hacks, stablecoin

depegs, oracle failures, and allowing users to create Capital Pools identical to those of the previously

described protocols, in which capital providers deposit ETH and their risk exposure are limited to a

single insurance policy. Capital Buckets, structured insurance products that limit risk across numerous

insurance policies, are also available.

Anyone may become a capital provider and provide risk coverage by allocating funds, which generates a

return and provides insurance coverage for the ecosystem as a whole. The return comprises three streams:

premium policies, the interest generated via Enzyme Finance, and the USF Capital Mining Program, which

enables the protocol to reward early adopters and users of Unslashed with the governance token via the

USF/ETH Uniswap pool.

Enzyme Finance is an asset management protocol that allows earning yield efficiently on the Capital

Supplied and can help increase the available Buckets Capital, therefore, increasing the amount of

provided coverage allowed.

Capital Suppliers receive premium payments live as they are directly streamed to them. They are not

locked in a specific policy for any amount of time, as they can leave a pool or bucket whenever they

desire and have access to liquidity to close the position.

Both capital providers and coverage seekers can trade their underlying tokens on external platforms, as

both positions are tokenized as ERC-20 tokens, improving their composability with other DeFi protocols.

Capital Buckets

A Capital Bucket is a collection of properly designed, analyzed, priced, and assembled insurance policies

for insurers to underwrite, diversifying their risk exposure.

The Spartan Bucket was the first structured capital bucket available on Unslashed. It protects users in

six centralized exchanges (loss of funds policy), two wallets, eight DApps (Smart Contract Protection

Policy), Chainlink oracle protection (oracle failure policy), Lido Finance protection (slashing

protection policy), three custodians, and four peg loss-related protections. The DAO can increase the

default maximum exposure by 5% per policy’s insurance capacity.

Cover Pricing

Unslashed has a pay as you go policy and users can stop the policy at any time, with payments being

calculated live. Pricing depends on several factors. Besides a fair pricing methodology applied to each

policy or policy type, Unslashed considers the correlations between policies that belong to the same

Capital Buckets. The pricing also takes into account loss distributions as it is done in traditional

actuarial pricing. The most recent policies include a supply and demand curve, allowing the premium to

vary with the utilization ratio.

The team states they have on-boarded quants from traditional finance and managed to build and calibrate

models that allow Unslashed to fairly price risk and structure insurance products. However, none of

these models are public and as such they bear natural intrinsic risks, i.e. trust is required.

Other than the factors that are considered to calculate premiums, there is no information on how the

calculation is done, how weights are assigned to each factor or whether this is a closed doors process

evaluated by the team or accept input by governance. Considering that Unslashed uses a pay as you go

model, this is most likely run off-chain. Another insurance protocol, Armor, implemented an on-chain pay

as you go policy, but had this had to be discontinued as Ethereum fees rendered it unsustainable.

Risk Cost

The minimum capital required corresponds to the maximum available cover. This is calculated by a

predefined formula that is not publicly disclosed. The design of the Capital Pools prevents withdrawing

capital or getting more cover if the corresponding action would result in the maximum payout exceeding

the maximum cover. Because the deposited Premium flows into the Capital Pool slowly over time, the

Maximum Available Cover does not change, but space can free up to either withdraw some of the capital

supplied or purchase additional coverage.

Unslashed considers that diversification across multiple smart contracts is not enough, as similar design

patterns may lead to similar attack vectors. For this reason the team chose to diversify the

Underwriters/Capital Suppliers risk across as many verticals as possible (smart contract risk, validator

slashing, exchange hacks, etc).

No more information could be found on the determination of the minimum capital required, nor on the risk

vectors integrated in cover pricing.

Tokenomics

USF is a governance token. Holders can vote on decisions regarding the direction of the protocol and

updates to the protocol parameters. The team will initially manage the protocol parameters and gradually

transition it to the Unslashed DAO.

Capital suppliers supply assets (e.g. ETH) to Individual Capital Pools and receive yield from the paid

premiums. These premiums are paid by Cover Buyers in the same asset (ETH). When instead someone decides

to deposit in Capital Buckets, they earn more types of yield: premiums, asset management yield and USF

capital mining rewards. Since USF is being rewarded for supplying capital and has no further utility,

sell pressure is created resulting in a constant decrease of the token value.

Claiming Assessment

A DAO-based claim assessment presents the challenge of choosing between the DAO's need to preserve the

capital of their mutuals and their conflicting obligation to spend the same money to pay valid claim

requests. Unslashed was one of the first decentralized insurance protocols to identify this issue and

adopt Kleros to arbitrate claims in a fair, transparent, and efficient manner.

In the case of a claimable incident, a user may submit a claim for reimbursement under the terms of the

policy. The claim request is followed by a time during which any user can contest the claim if they

believe it violates the claim policy. If no one contests the claim, it is approved and the payment is

made. If there is a dispute, a decentralized court case is launched in Kleros and Kleros jurors

determine whether the claim is valid or not. A claim can only be contested once, although it can be

appealed several times.

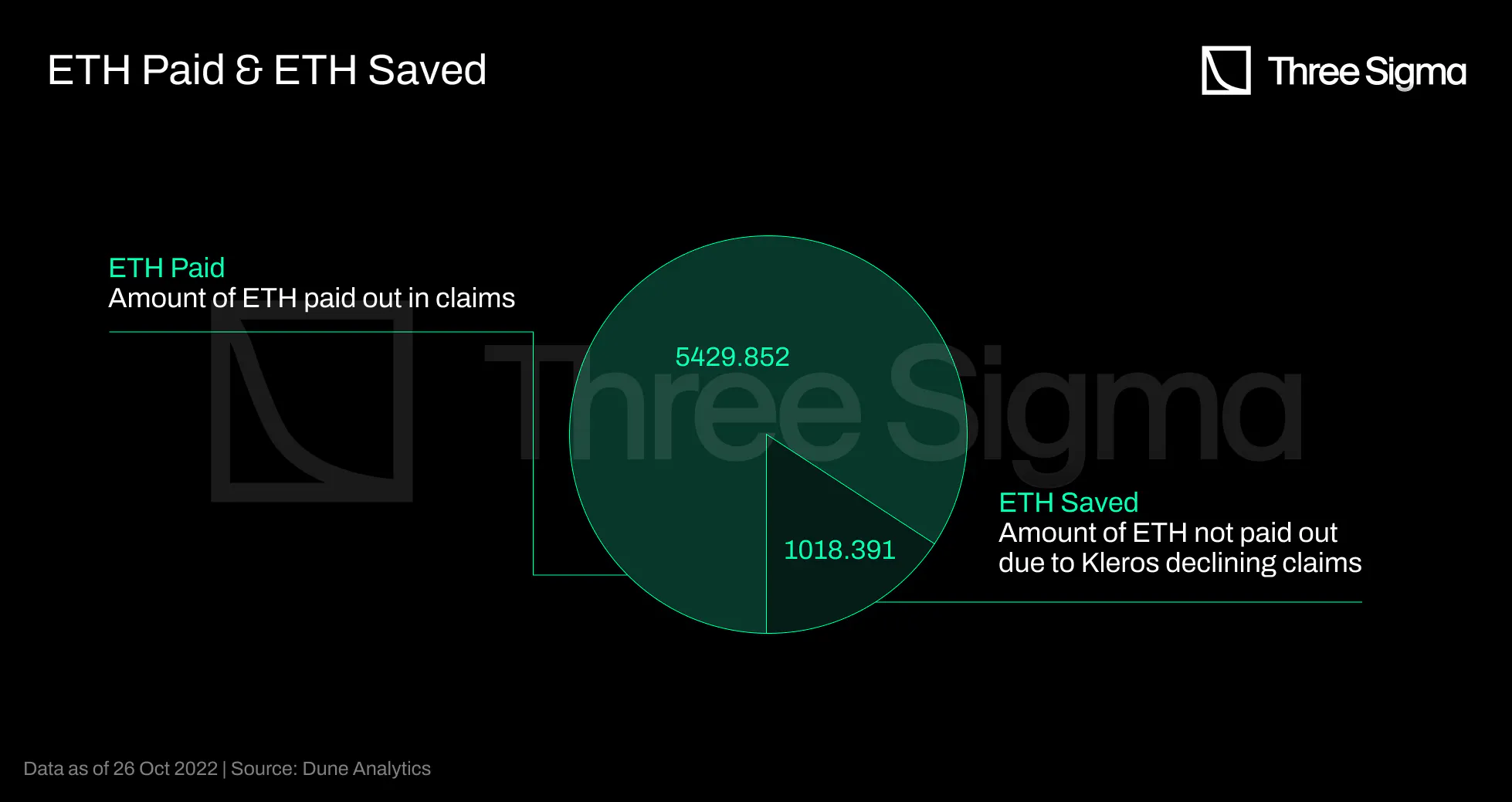

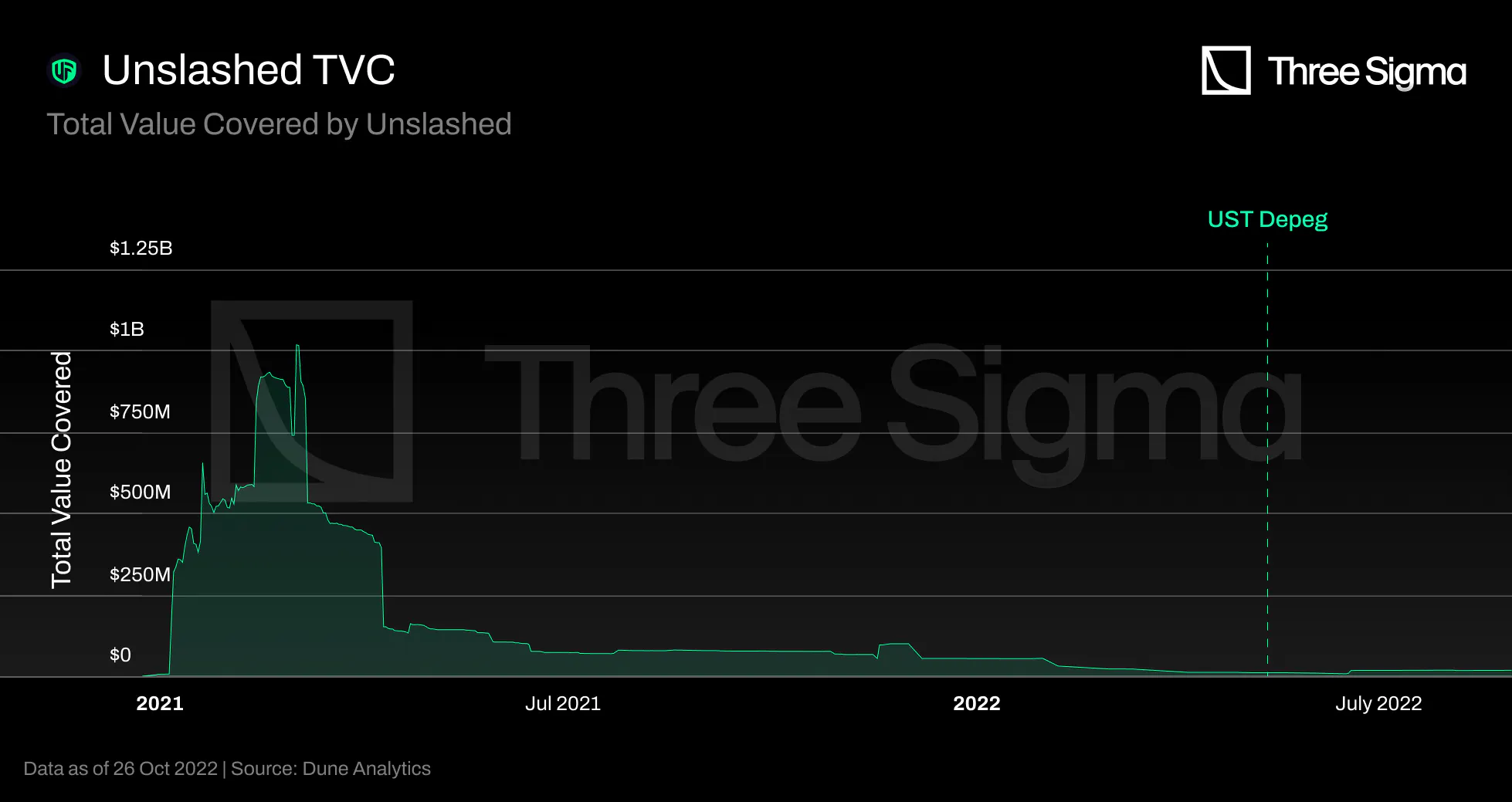

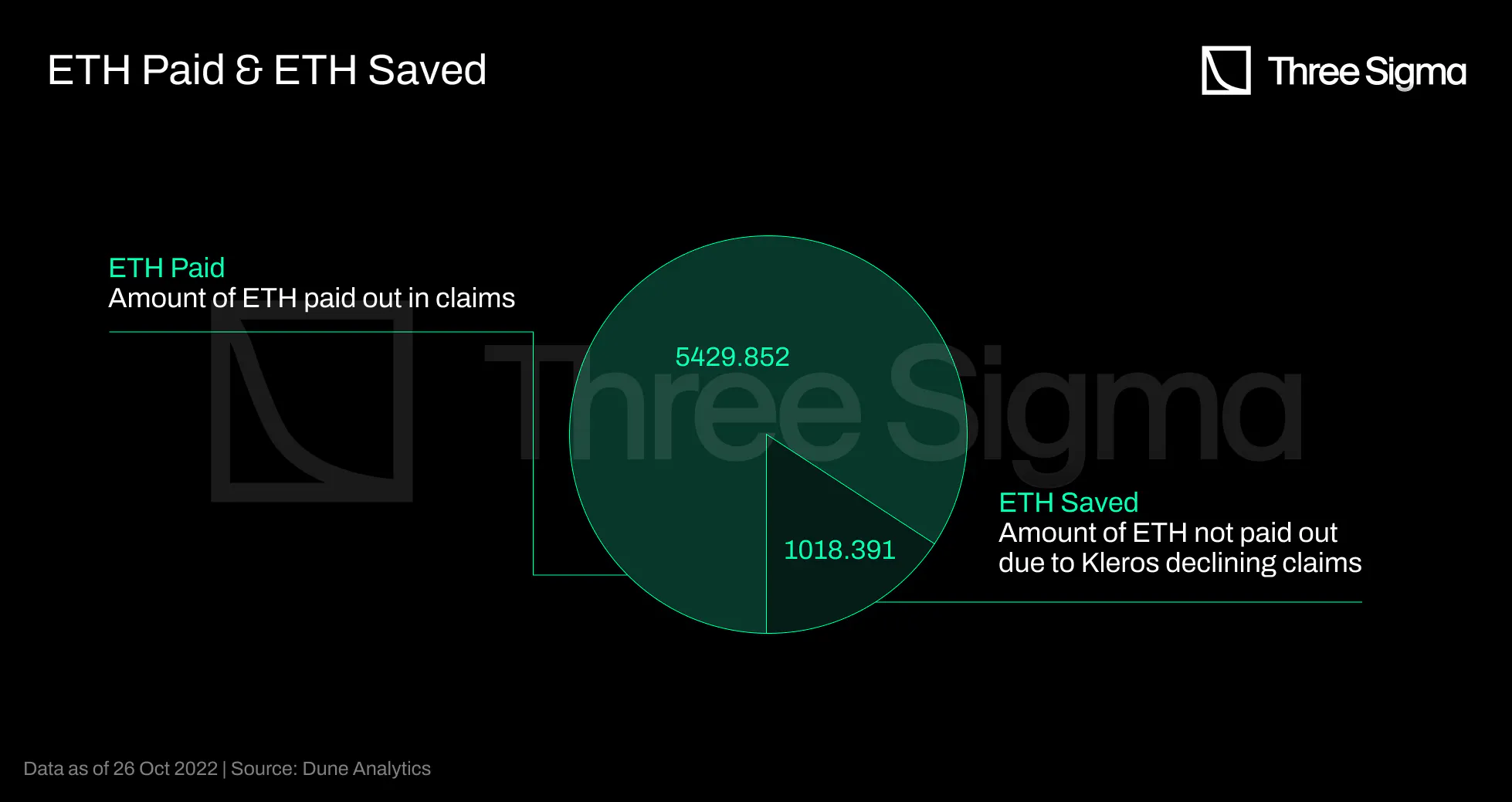

Before the UST Depeg event, only two claim requests were submitted to Unslashed. However, after the

event, more than eighty claims were filed. Unslashed's largest claim to date, a 742 ETH loss event,

caused by UST depeg, was rejected multiple times by the Kleros court arbitrating the case due to a 51%

attack.

Adoption and TVL

Unslashed's Total Value Locked (TVL) began at \$130 million on 14 March 2021 and peaked at approximately

\$169 million on 12 May 2021. Since then, Unslashed's TVL has been declining, reaching just under $23

million at the beginning of October.

At the time of Terra's collapse, Unslashed provided Stablecoins Depeg for UST users. As specified in

their UST De-Peg Cover Wording, the Unslashed UST De-Peg coverage was available for claim requests after

a 14-day Time Weighted Average Price (TWAP) of UST below $0.87. Unslashed paid more than 1000 ETH in

June, and the payments were made in multiple batches; therefore, the chart does not depict a sudden

decline in value, but rather a gradual decline throughout June.

A total of 102 claims were ever submitted, and a total of 1018.391ETH were saved as a result of 7 claim

requests handled and refused on Kleros dispute, all of them were linked to UST Depeg.

TVC

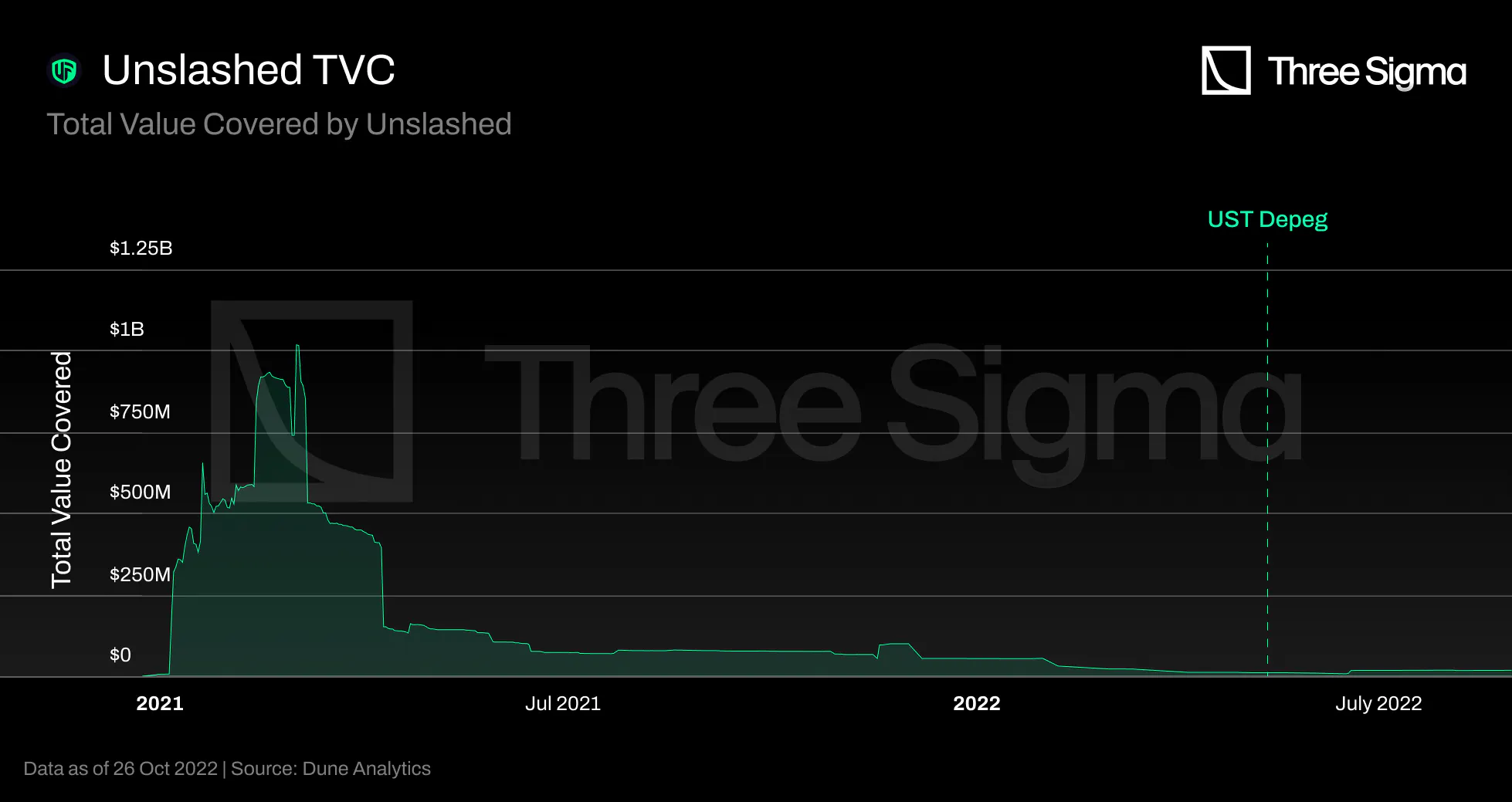

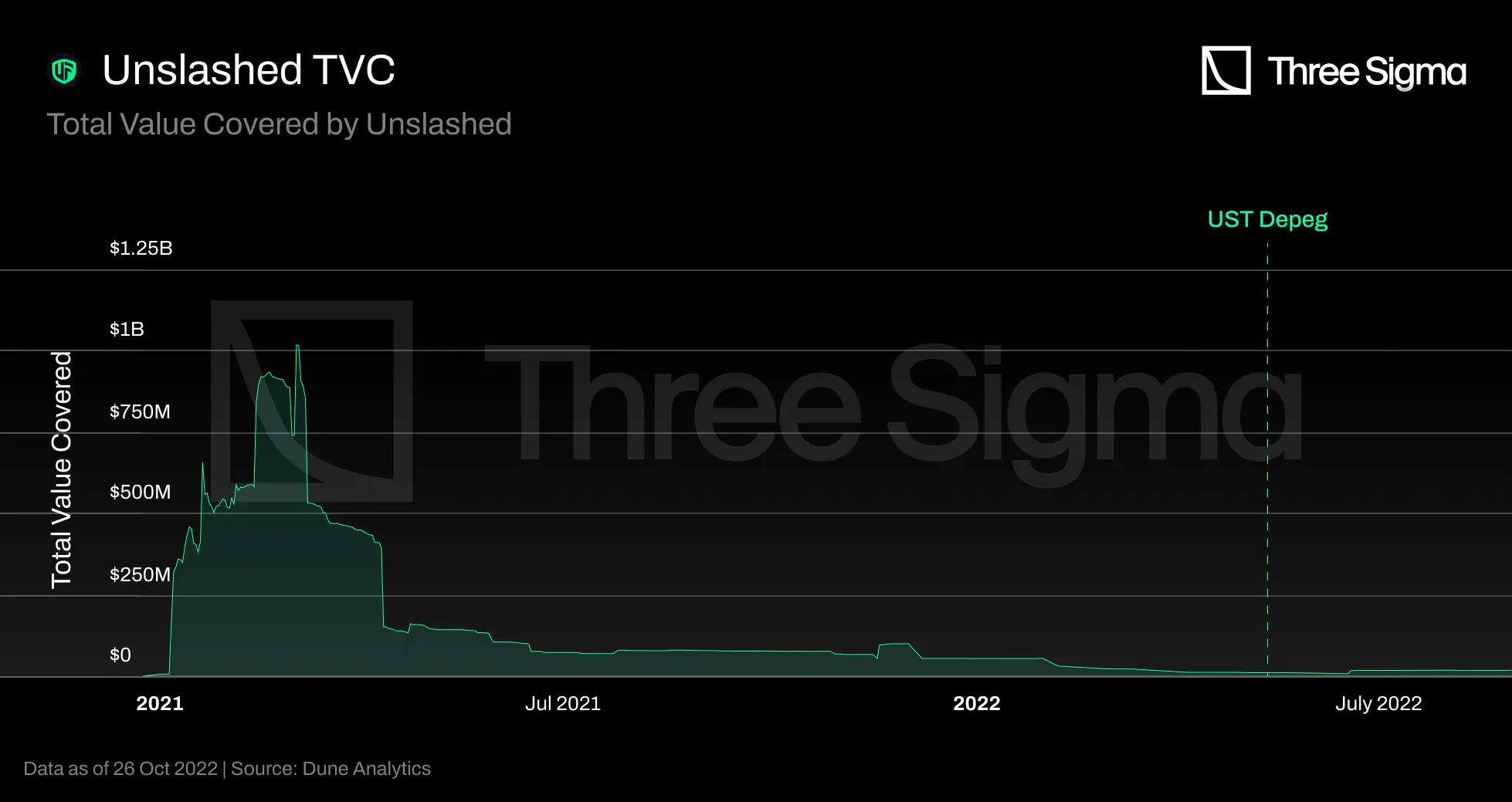

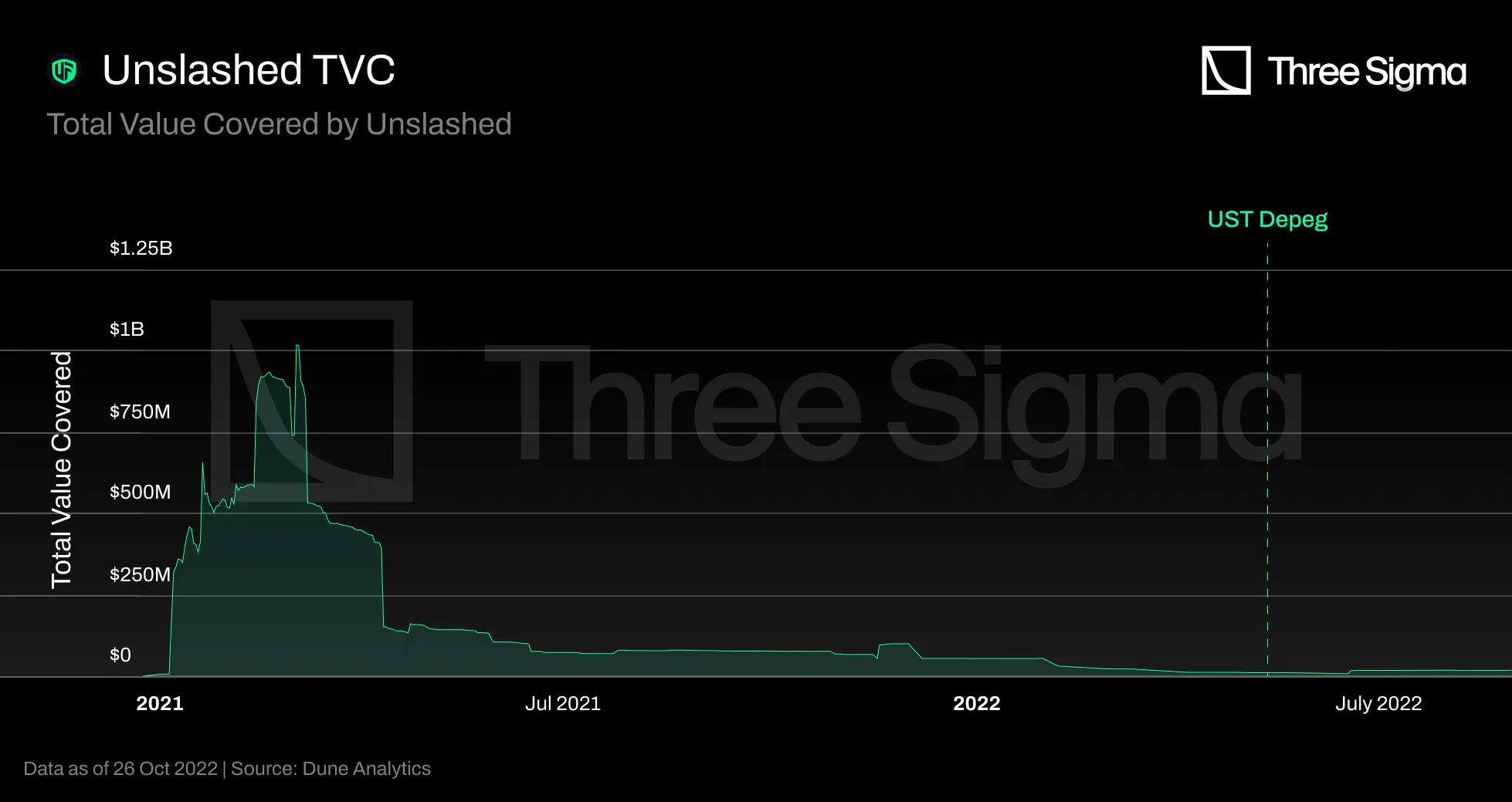

Unslashed launched its product during a bull market fueled by the DeFi summer, which attracted a

significant amount of capital and cover insurance since customers were able to pay an additional price

to protect their assets. Close to 100 claims were submitted as a result of the UST depeg, and once paid

or denied, they expired, reflecting the subsequent drop in active coverage. Similar to any other

insurance protocol, Unslashed have struggled to return to their glory days following this catastrophe.

Revenue

There is currently no publicly available information regarding the Unslashed protocol's revenue stream or

similar statistics.

Since there is no current information on the revenue stream, no conclusion can be drawn.

Final Thoughts

Unslashed seems to prioritize partnerships with DeFi protocols and protect them against some of their

risks, instead of targeting users. Giving both capital providers and coverage seekers ERC-20 tokens that

represent their position allows other protocols to build on top of Unslashed and potentially create

added value. Another protocol could, for instance, issue risk-free tokens that combine a position and

the corresponding insurance. Users can also speculate by for example selling their premium tokens at a

higher price when there is lack of capital to offer more insurance.

NSure

After a Polygon beta, NSure launched on Ethereum in April 2021. NSure is conceptually similar to Nexus

Mutual in that it has a capital pool of multiple accepted assets and a surplus pool that accrues capital

through paid premiums. Unlike Nexus Mutual, however, it uses a Dynamic Price Model to determine

premiums, which vary across products in the marketplace based on real-time supply and demand. This

pricing model includes a Risk Parameter based on the rating assigned to each project by NSure. Their

current business model does not necessarily require KYC.

NSure Participants

Cover Providers can stake NSure tokens against protocols or custodians to underwrite insurance and earn

50% of premiums. Another 40% of premiums go to the surplus pool, and 10% is kept locked until the end of

the coverage to incentivise users to participate in the voting process if there is a claim request. The

rewards are proportional to the amount of capital the cover provider has locked into the pool.

On the other hand, Claim Assessors are members who stake NSure tokens to evaluate claims submitted by

other members and receive rewards for voting in conformity with the consensus.

Cover Pricing

Nsure employs a dynamic pricing model based on supply and demand to determine policy premiums.

The model employs the 95th percentile of a beta distribution (Beta(α, β)), and the shape parameters are

capital demand and supply. The premium is also influenced by a risk factor that accounts for the

project's level of security and a cost loading that accounts for claim settlement costs and other

internal expenses.

$$\text{annual premium} = max[\text{95th percentile of Beta}(α, β), \text{min prem factor}] × \text{risk

factor}$$

$$α= \text{outstanding policy limit (in USD)} × demand \, scale \, factor$$

$$β= staking\, pool\, (in\, Nsure\, token) × staking\, scale\, factor$$

$$policy\ premium\ = \ \frac{\text{policy duration}}{365} \times \text{annual premium } \times \ \lbrack

1\ + \ (\frac{365}{\text{policy duration }} - 1)*avg.\ claim\ cost\%\rbrack$$

The team recognizes that due to the lack of historical data on smart contract exploits, it is difficult

to apply traditional actuarial pricing to Nsure products. They argue that for transparency sake, it is

beneficial to use a supply and demand model that is easily verifiable.

Using a dynamic pricing model based on supply and demand means that if the capital supply is high, the

premium rate will be lower; if the policy cover demand is high, the premium rate will rise. Premiums are

susceptible to supply and demand forces; consequently, the weaker the supply and demand forces, the more

variable the premiums. This means that the more insured value there is in DeFi and in particular in

Nsure, the less sensitive premium pricing will be to demand and supply changes, which increases the

robustness of the insurance landscape. However, in the case of Nsure, the less the price is driven by

supply and demand, the more it would be influenced by a risk cost that is currently determined by the

team in a non-transparent way, which could be problematic.

The risk factor should account for the riskiness embedded in each project. Without this factor the

premium rate of two projects would be the same if their capital demand and supply were the same, which

is not ideal. However, finding a decentralized way to assess this risk factor would be an improvement.

Risk Cost

Nsure developed the Nsure Smart Contract Overall Security Score (N-SCOSS), a 0 to 100 rating system for

determining the risk cost for every project.

N-SCOSS is based on five major characteristics that, according to Nsure, make up the possibility for a

protocol to suffer an exploit or bug in the code. These are the following: History and Team, Exposure

(aka TVL, Industry Segment), Audit, Code Quality, and Developer Community. The team assigns a weight to

each category and performs due diligence on each project by rating each category.

The formula used to calculate the N-SCOSS is as follows:

$$N − SCOSS = \sum_{i=1}^5 wi ⋅ Ni$$

$$N_i =\sum_{j=1}^{k_i} w_{i, j} ⋅ N_{i, j} (0 ≤ N_{i, j} ≤ 100)$$

where Ni (i = 1, ...5) are the five pillars of N-SCOSS and wi is the weight attributed to each. These

pillars are further subdivided into several separately analyzed rating factors, symbolized by Ni, j.

Weights are assigned to each pillar and each rating factor to quantify its relevance towards the code’s

security.

To develop this system, factor groups that logically impact the code security were selected. Then

historical hack events data were mapped to those selected rating factors, and the team analyzed whether

they are correlated. The significantly correlated factors were included in the final calculation of

N-SCOSS.

The pillar of History & Team considers the following sub-factors: project age, past exploits (if any),

team anonymity and team experience in programming. The Exposure factor entails: total value locked,

industry segment and infrastructure. The Audit factor is measured by audit transparency and scope, audit

findings, audit firm trust score and other credits. Code Quality is assessed through documentation and

testing. Finally, the Developer Community factor takes into account bug bounty programs and issues

raised on Github.

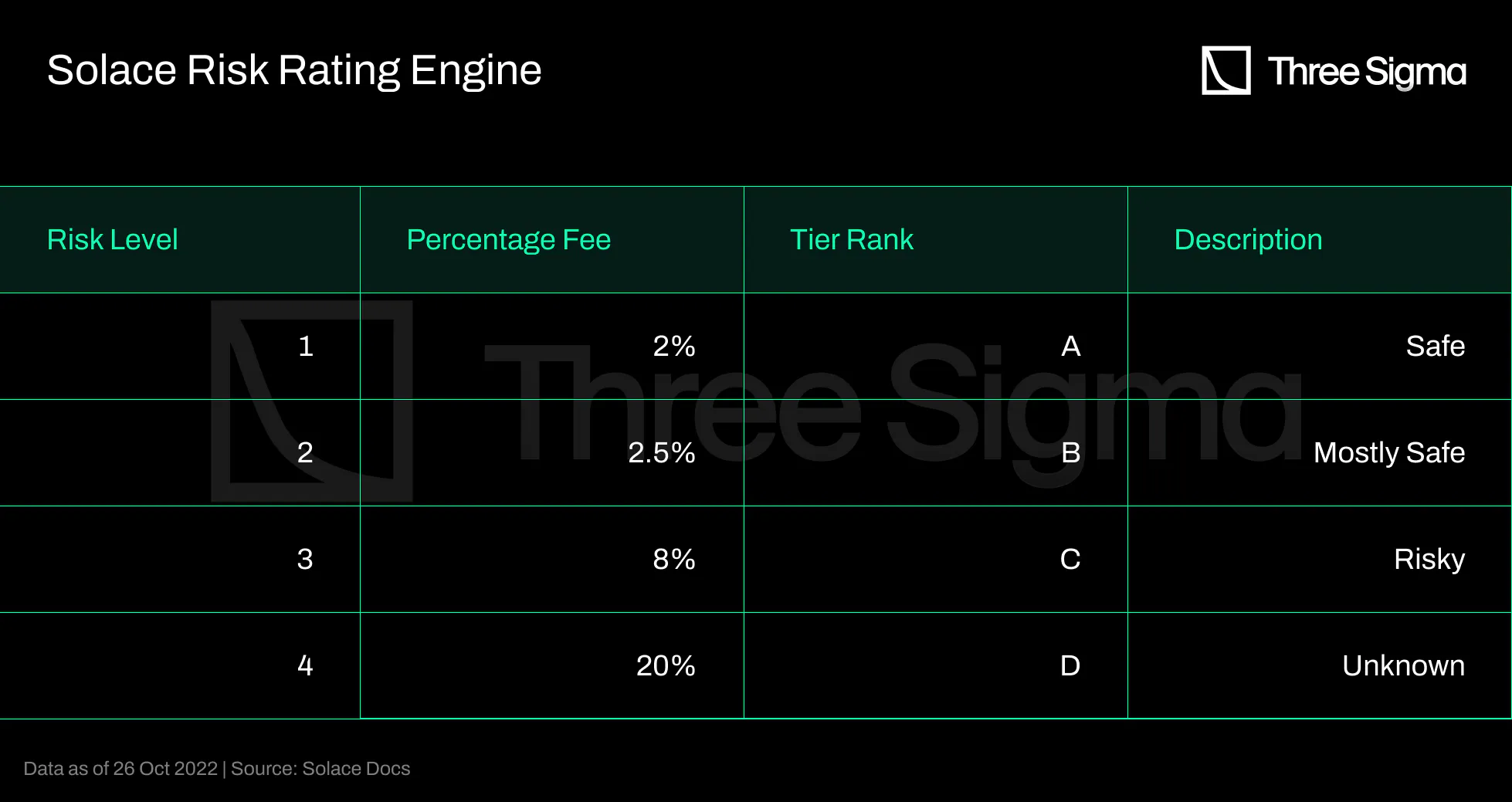

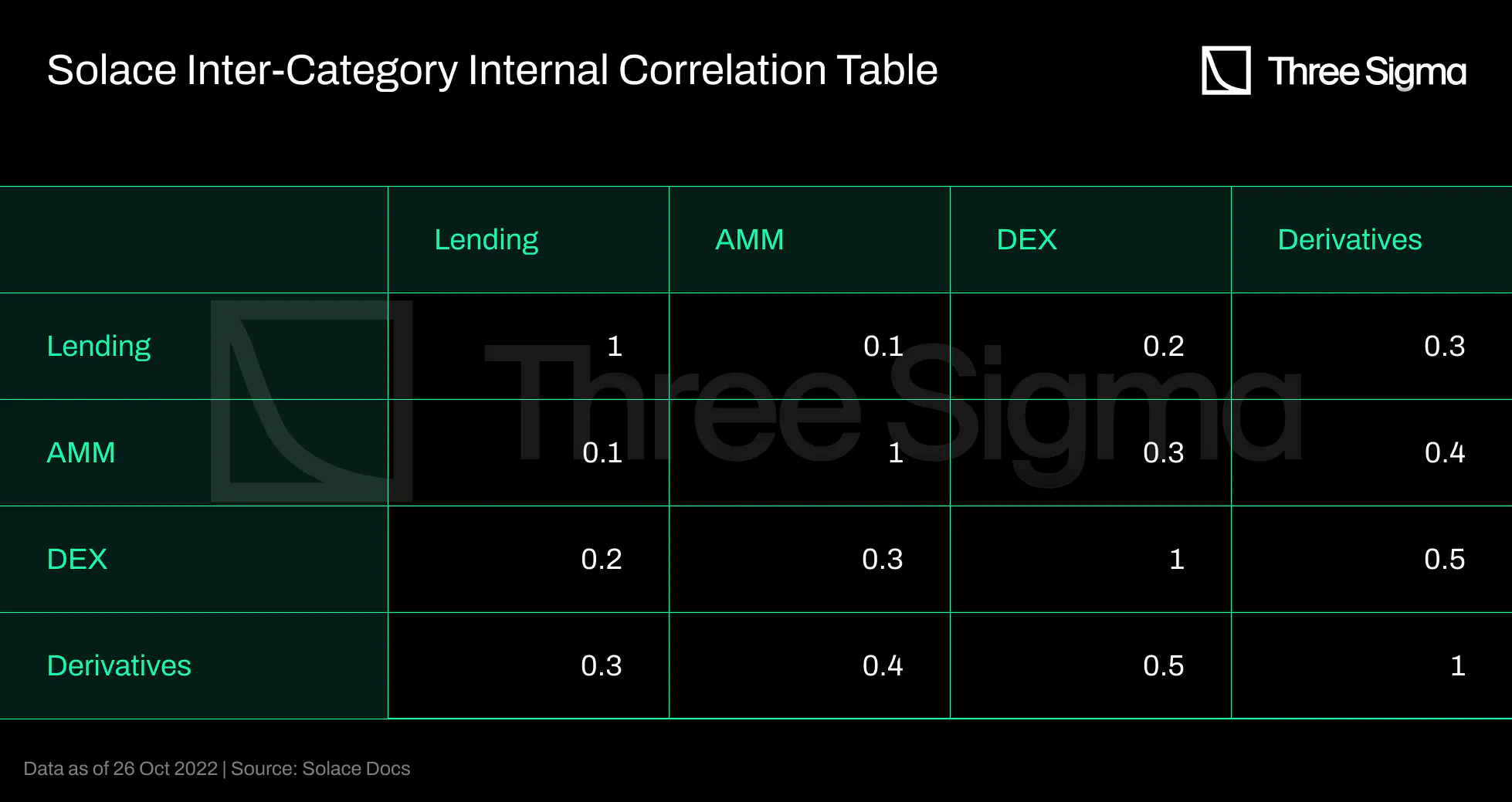

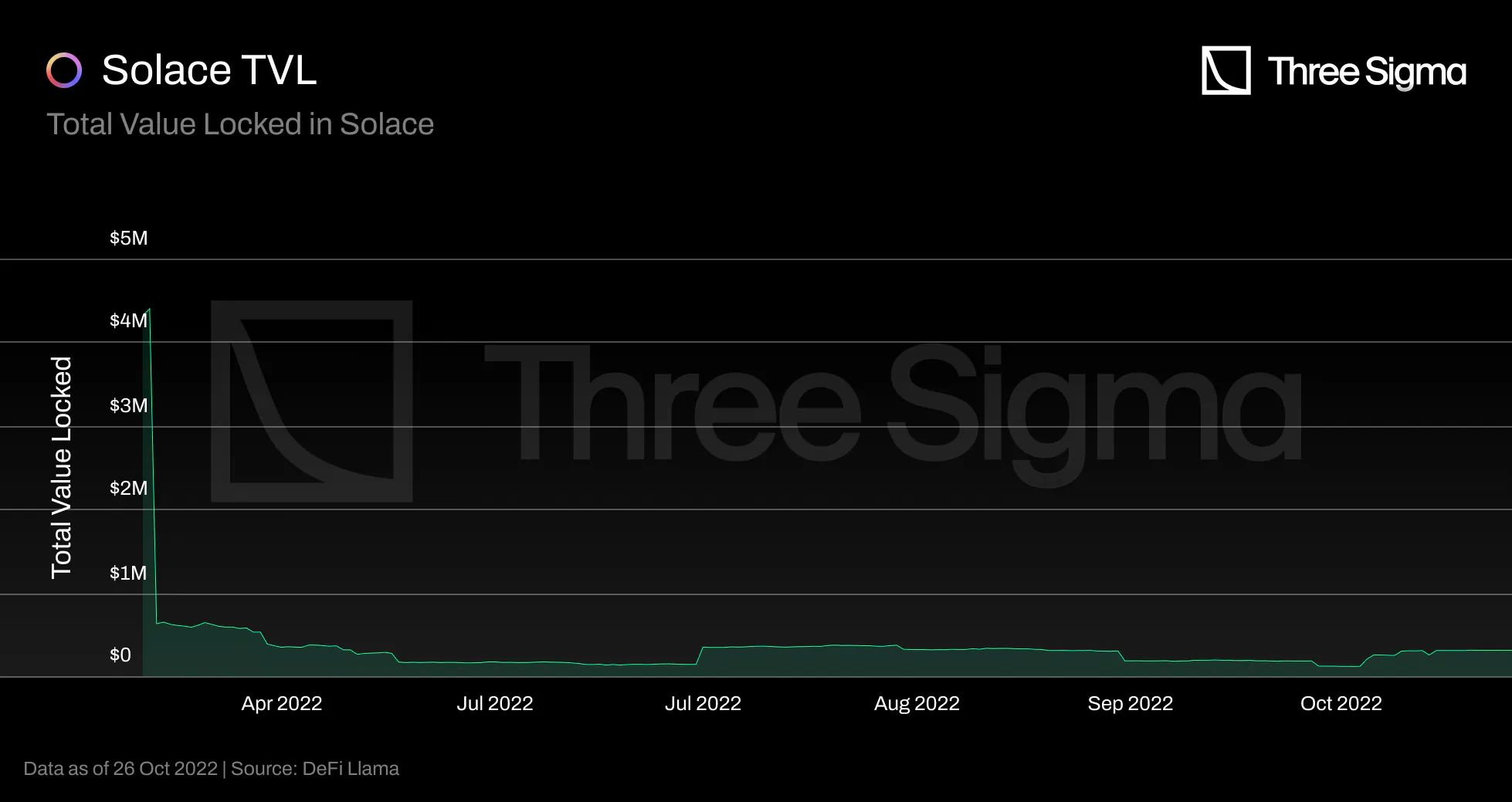

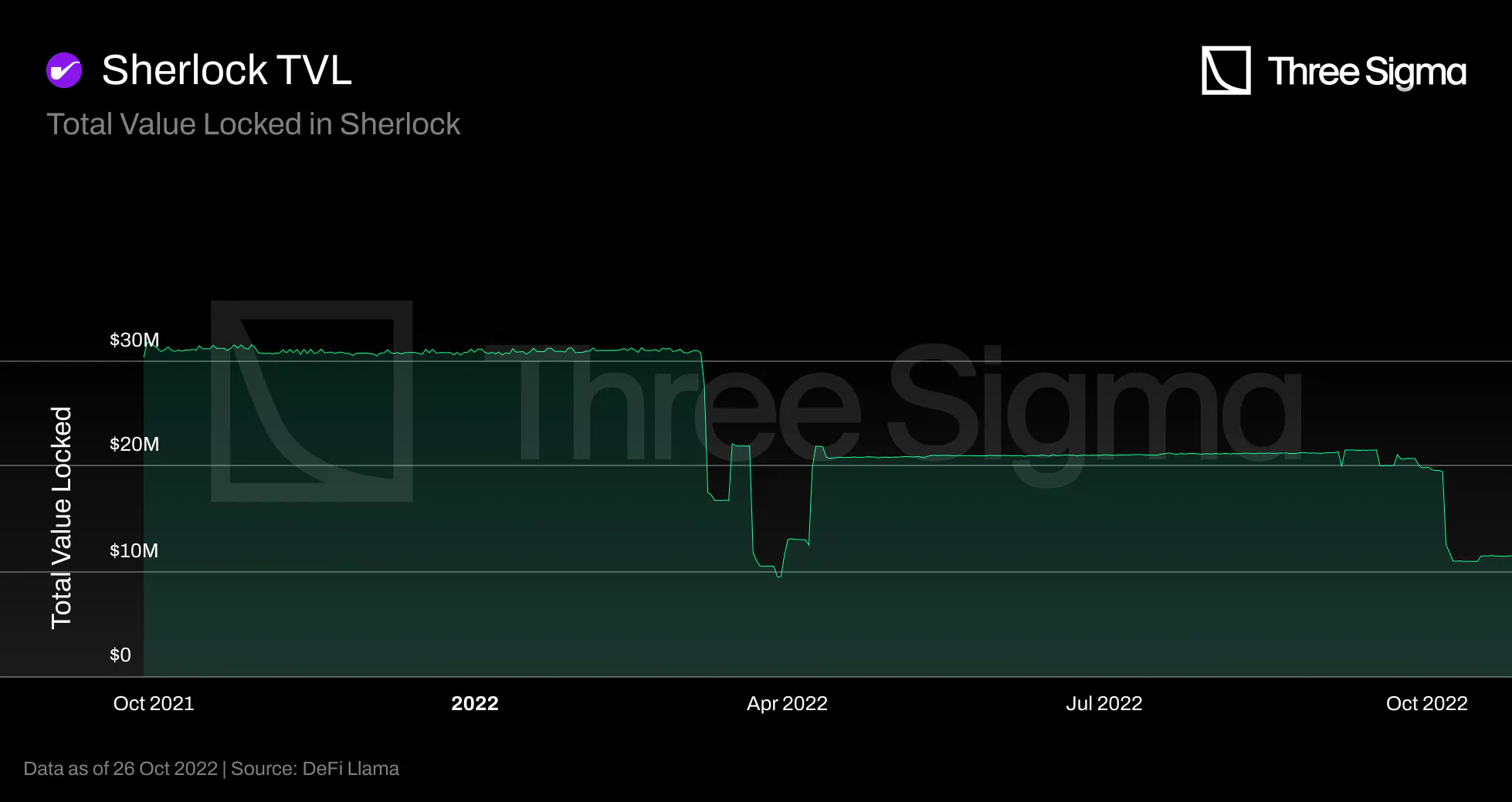

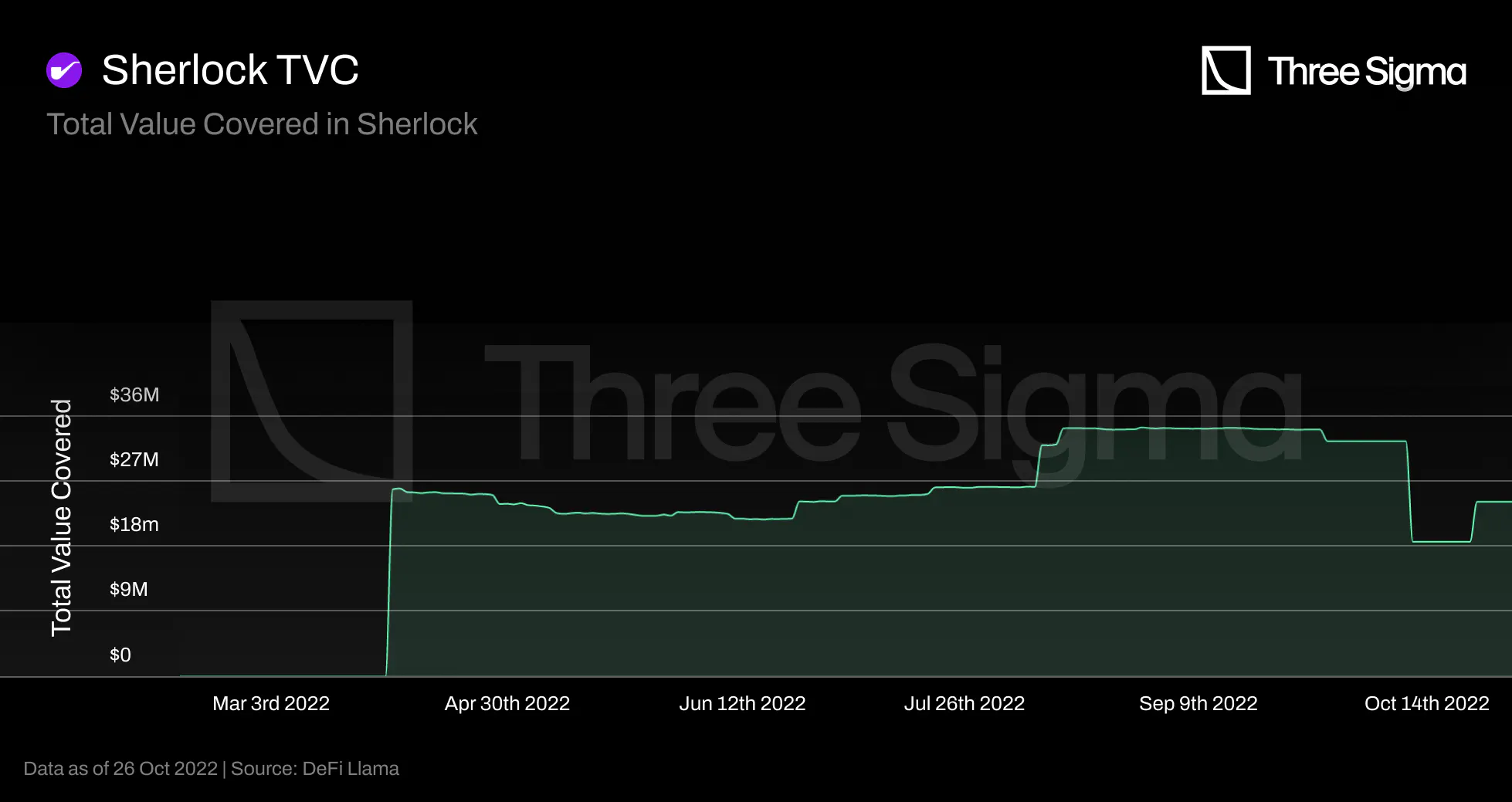

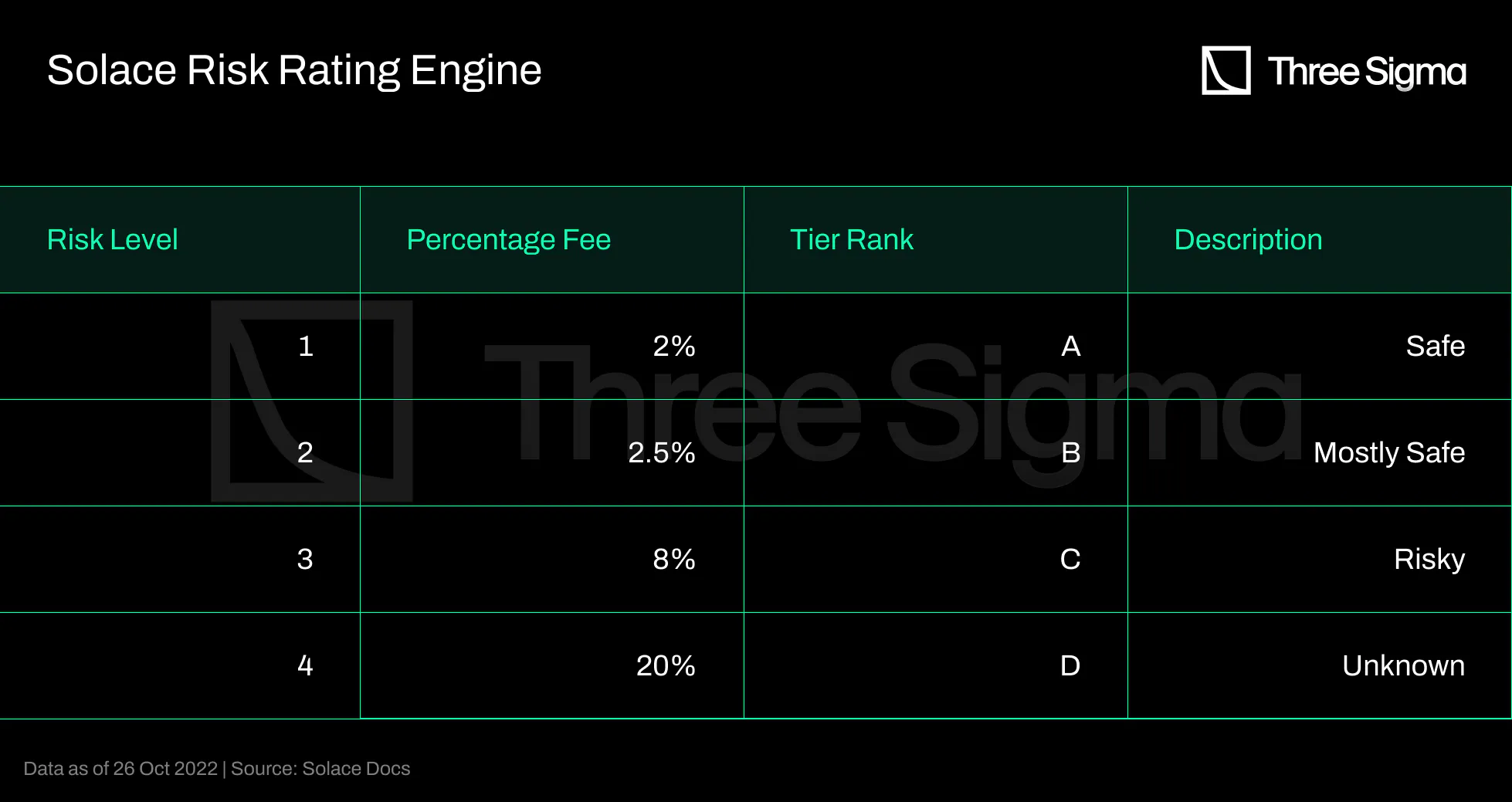

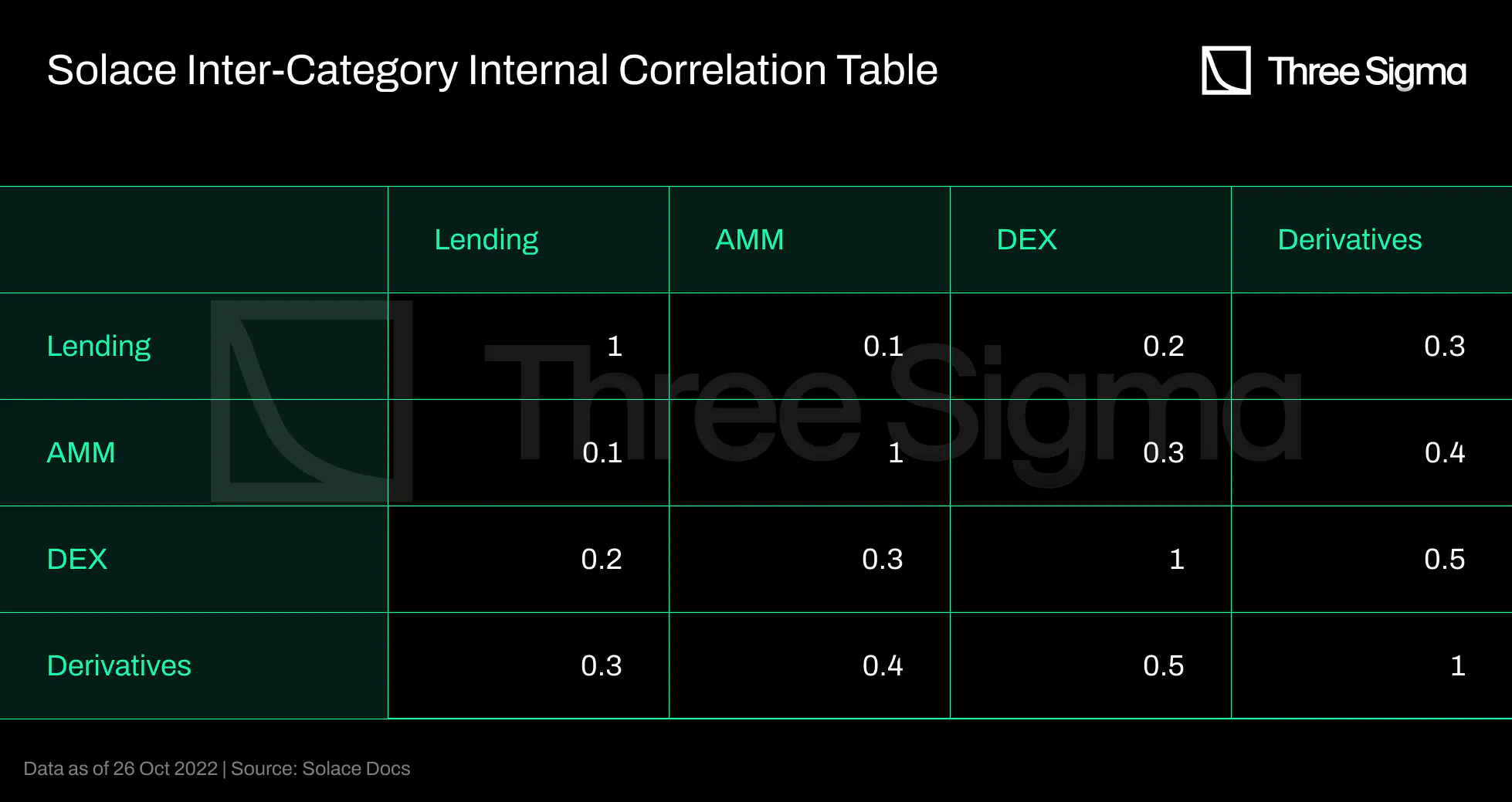

The team points to some improvements that could be made to the system, such as introducing an adjustment